Tools and Technologies used in this article :

Build Hadoop bin distribution for Windows

-

Download and install Microsoft Windows SDK v7.1.

-

Download and install Unix command-line tool Cygwin.

-

Download and install Maven 3.1.1.

-

Download Protocol Buffers 2.5.0 and extract to a folder (say c:\protobuf).

-

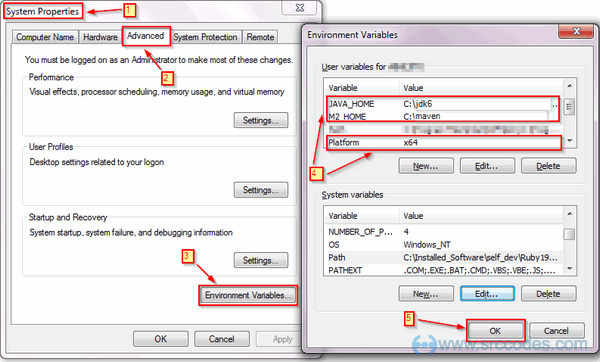

Add Environment Variables JAVA_HOME, M2_HOME and Platform if not added already.

Add Environment Variables: Note : Variable name Platform is case sensitive. And value will be either x64 or Win32 for building on a 64-bit or 32-bit system.

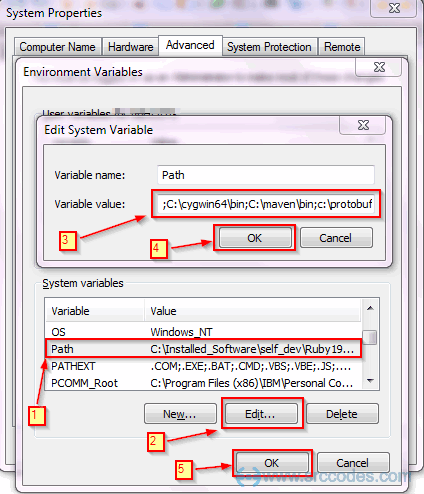

Note : Variable name Platform is case sensitive. And value will be either x64 or Win32 for building on a 64-bit or 32-bit system.Edit Path Variable to add bin directory of Cygwin (say C:\cygwin64\bin), bin directory of Maven (say C:\maven\bin) and installation path of Protocol Buffers (say c:\protobuf).

Edit Path Variable:

-

Download hadoop-2.2.0-src.tar.gz and extract to a folder having short path (say c:\hdfs) to avoid runtime problem due to maximum path length limitation in Windows.

-

Select Start --> All Programs --> Microsoft Windows SDK v7.1 and open Windows SDK 7.1 Command Prompt. Change directory to Hadoop source code folder (c:\hdfs). Execute mvn package with options -Pdist,native-win -DskipTests -Dtar to create Windows binary tar distribution.

Windows SDK 7.1 Command Prompt123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172Setting SDK environment relative to C:\Program Files\Microsoft SDKs\Windows\v7.1\.Targeting Windows 7 x64 DebugC:\Program Files\Microsoft SDKs\Windows\v7.1>cdc:\hdfsC:\hdfs>mvn package -Pdist,native-win -DskipTests -Dtar[INFO] Scanningforprojects...[INFO] ------------------------------------------------------------------------[INFO] Reactor Build Order:[INFO][INFO] Apache Hadoop Main[INFO] Apache Hadoop Project POM[INFO] Apache Hadoop Annotations[INFO] Apache Hadoop Assemblies[INFO] Apache Hadoop Project Dist POM[INFO] Apache Hadoop Maven Plugins[INFO] Apache Hadoop Auth[INFO] Apache Hadoop Auth Examples[INFO] Apache Hadoop Common[INFO] Apache Hadoop NFS[INFO] Apache Hadoop Common Project[INFO] Apache Hadoop HDFS[INFO] Apache Hadoop HttpFS[INFO] Apache Hadoop HDFS BookKeeper Journal[INFO] Apache Hadoop HDFS-NFS[INFO] Apache Hadoop HDFS Project[INFO] hadoop-yarn[INFO] hadoop-yarn-api[INFO] hadoop-yarn-common[INFO] hadoop-yarn-server[INFO] hadoop-yarn-server-common[INFO] hadoop-yarn-server-nodemanager[INFO] hadoop-yarn-server-web-proxy[INFO] hadoop-yarn-server-resourcemanager[INFO] hadoop-yarn-server-tests[INFO] hadoop-yarn-client[INFO] hadoop-yarn-applications[INFO] hadoop-yarn-applications-distributedshell[INFO] hadoop-mapreduce-client[INFO] hadoop-mapreduce-client-core[INFO] hadoop-yarn-applications-unmanaged-am-launcher[INFO] hadoop-yarn-site[INFO] hadoop-yarn-project[INFO] hadoop-mapreduce-client-common[INFO] hadoop-mapreduce-client-shuffle[INFO] hadoop-mapreduce-client-app[INFO] hadoop-mapreduce-client-hs[INFO] hadoop-mapreduce-client-jobclient[INFO] hadoop-mapreduce-client-hs-plugins[INFO] Apache Hadoop MapReduce Examples[INFO] hadoop-mapreduce[INFO] Apache Hadoop MapReduce Streaming[INFO] Apache Hadoop Distributed Copy[INFO] Apache Hadoop Archives[INFO] Apache Hadoop Rumen[INFO] Apache Hadoop Gridmix[INFO] Apache Hadoop Data Join[INFO] Apache Hadoop Extras[INFO] Apache Hadoop Pipes[INFO] Apache Hadoop Tools Dist[INFO] Apache Hadoop Tools[INFO] Apache Hadoop Distribution[INFO] Apache Hadoop Client[INFO] Apache Hadoop Mini-[INFO][INFO] ------------------------------------------------------------------------[INFO] Building Apache Hadoop Main 2.2.0[INFO] ------------------------------------------------------------------------[INFO][INFO] --- maven-enforcer-plugin:1.3.1:enforce (default) @ hadoop-main ---[INFO][INFO] --- maven-site-plugin:3.0:attach-descriptor (attach-descriptor) @ hadoop-main ---Note : I have pasted only the starting few lines of huge logs generated by maven. This building step requires Internet connection as Maven will download all the required dependencies. -

If everything goes well in the previous step, then native distribution hadoop-2.2.0.tar.gz will be created insideC:\hdfs\hadoop-dist\target\hadoop-2.2.0 directory.

Install Hadoop

-

Extract hadoop-2.2.0.tar.gz to a folder (say c:\hadoop).

-

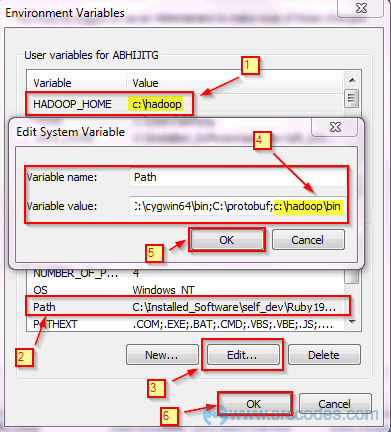

Add Environment Variable HADOOP_HOME and edit Path Variable to add bin directory of HADOOP_HOME (sayC:\hadoop\bin).

Add Environment Variables:

Configure Hadoop

Make following changes to configure Hadoop

-

File: C:\hadoop\etc\hadoop\core-site.xml

123456789101112131415161718192021222324

<?xmlversion="1.0"encoding="UTF-8"?><?xml-stylesheettype="text/xsl"href="configuration.xsl"?><!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License atUnless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.--><!-- Put site-specific property overrides in this file. --><configuration><property><name>fs.defaultFS</name></property></configuration>- fs.defaultFS:

- The name of the default file system. A URI whose scheme and authority determine the FileSystem implementation. The uri's scheme determines the config property (fs.SCHEME.impl) naming the FileSystem implementation class. The uri's authority is used to determine the host, port, etc. for a filesystem.

-

File: C:\hadoop\etc\hadoop\hdfs-site.xml

1234567891011121314151617181920212223242526272829303132

<?xmlversion="1.0"encoding="UTF-8"?><?xml-stylesheettype="text/xsl"href="configuration.xsl"?><!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License atUnless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.--><!-- Put site-specific property overrides in this file. --><configuration><property><name>dfs.replication</name><value>1</value></property><property><name>dfs.namenode.name.dir</name><value>file:/hadoop/data/dfs/namenode</value></property><property><name>dfs.datanode.data.dir</name><value>file:/hadoop/data/dfs/datanode</value></property></configuration>- dfs.replication:

- Default block replication. The actual number of replications can be specified when the file is created. The default is used if replication is not specified in create time.

- dfs.namenode.name.dir:

- Determines where on the local filesystem the DFS name node should store the name table(fsimage). If this is a comma-delimited list of directories then the name table is replicated in all of the directories, for redundancy.

- dfs.datanode.data.dir:

- Determines where on the local filesystem an DFS data node should store its blocks. If this is a comma-delimited list of directories, then data will be stored in all named directories, typically on different devices. Directories that do not exist are ignored.

Note : Create namenode and datanode directory under c:/hadoop/data/dfs/. -

File: C:\hadoop\etc\hadoop\yarn-site.xml

123456789101112131415161718192021222324

<?xmlversion="1.0"?><!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License atUnless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.--><configuration><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property><property><name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name><value>org.apache.hadoop.mapred.ShuffleHandler</value></property></configuration>- yarn.nodemanager.aux-services:

- The auxiliary service name. Default value is omapreduce_shuffle

- yarn.nodemanager.aux-services.mapreduce.shuffle.class:

- The auxiliary service class to use. Default value is org.apache.hadoop.mapred.ShuffleHandler

-

File: C:\hadoop\etc\hadoop\mapred-site.xml

123456789101112131415161718192021222324

<?xmlversion="1.0"?><?xml-stylesheettype="text/xsl"href="configuration.xsl"?><!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License atUnless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.--><!-- Put site-specific property overrides in this file. --><configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property></configuration>- mapreduce.framework.name:

- The runtime framework for executing MapReduce jobs. Can be one of local, classic or yarn.

Format namenode

For the first time only, namenode needs to be formatted.

Command Prompt|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

|

Microsoft Windows [Version 6.1.7601]Copyright (c) 2009 Microsoft Corporation. All rights reserved.C:\Users\abhijitg>cd c:\hadoop\bin

c:\hadoop\bin>hdfs namenode -format

13/11/03 18:07:47 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************STARTUP_MSG: Starting NameNodeSTARTUP_MSG: host = ABHIJITG/x.x.x.x

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.2.0STARTUP_MSG: classpath = <classpath jars here>STARTUP_MSG: build = Unknown -r Unknown; compiled by ABHIJITG on 2013-11-01T13:42ZSTARTUP_MSG: java = 1.7.0_03************************************************************/Formatting using clusterid: CID-1af0bd9f-efee-4d4e-9f03-a0032c22e5eb13/11/03 18:07:48 INFO namenode.HostFileManager: read includes:

HostSet()13/11/03 18:07:48 INFO namenode.HostFileManager: read excludes:

HostSet()13/11/03 18:07:48 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

13/11/03 18:07:48 INFO util.GSet: Computing capacity for map BlocksMap

13/11/03 18:07:48 INFO util.GSet: VM type = 64-bit

13/11/03 18:07:48 INFO util.GSet: 2.0% max memory = 888.9 MB

13/11/03 18:07:48 INFO util.GSet: capacity = 2^21 = 2097152 entries

13/11/03 18:07:48 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

13/11/03 18:07:48 INFO blockmanagement.BlockManager: defaultReplication = 1

13/11/03 18:07:48 INFO blockmanagement.BlockManager: maxReplication = 512

13/11/03 18:07:48 INFO blockmanagement.BlockManager: minReplication = 1

13/11/03 18:07:48 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

13/11/03 18:07:48 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false

13/11/03 18:07:48 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

13/11/03 18:07:48 INFO blockmanagement.BlockManager: encryptDataTransfer = false

13/11/03 18:07:48 INFO namenode.FSNamesystem: fsOwner = ABHIJITG (auth:SIMPLE)

13/11/03 18:07:48 INFO namenode.FSNamesystem: supergroup = supergroup

13/11/03 18:07:48 INFO namenode.FSNamesystem: isPermissionEnabled = true

13/11/03 18:07:48 INFO namenode.FSNamesystem: HA Enabled: false

13/11/03 18:07:48 INFO namenode.FSNamesystem: Append Enabled: true

13/11/03 18:07:49 INFO util.GSet: Computing capacity for map INodeMap

13/11/03 18:07:49 INFO util.GSet: VM type = 64-bit

13/11/03 18:07:49 INFO util.GSet: 1.0% max memory = 888.9 MB

13/11/03 18:07:49 INFO util.GSet: capacity = 2^20 = 1048576 entries

13/11/03 18:07:49 INFO namenode.NameNode: Caching file names occuring more than 10 times

13/11/03 18:07:49 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

13/11/03 18:07:49 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

13/11/03 18:07:49 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

13/11/03 18:07:49 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

13/11/03 18:07:49 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time

is 600000 millis13/11/03 18:07:49 INFO util.GSet: Computing capacity for map Namenode Retry Cache

13/11/03 18:07:49 INFO util.GSet: VM type = 64-bit

13/11/03 18:07:49 INFO util.GSet: 0.029999999329447746% max memory = 888.9 MB

13/11/03 18:07:49 INFO util.GSet: capacity = 2^15 = 32768 entries

13/11/03 18:07:49 INFO common.Storage: Storage directory \hadoop\data\dfs\namenode has been successfully formatted.

13/11/03 18:07:49 INFO namenode.FSImage: Saving image file \hadoop\data\dfs\namenode\current\fsimage.ckpt_00000000000000

00000 using no compression13/11/03 18:07:49 INFO namenode.FSImage: Image file \hadoop\data\dfs\namenode\current\fsimage.ckpt_0000000000000000000 o

f size 200 bytes saved in 0 seconds.

13/11/03 18:07:49 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

13/11/03 18:07:49 INFO util.ExitUtil: Exiting with status 0

13/11/03 18:07:49 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************SHUTDOWN_MSG: Shutting down NameNode at ABHIJITG/x.x.x.x

************************************************************/ |

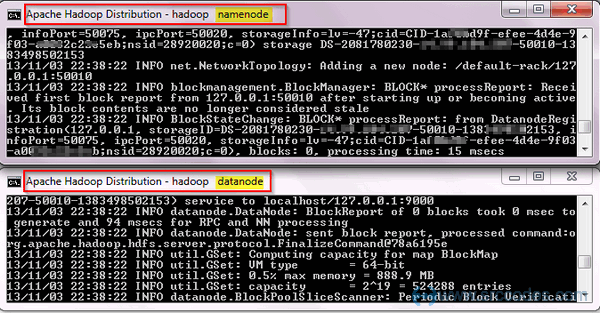

Start HDFS (Namenode and Datanode)

Command Prompt|

1

2

|

C:\Users\abhijitg>cd c:\hadoop\sbin

c:\hadoop\sbin>start-dfs |

Two separate Command Prompt windows will be opened automatically to run Namenode and Datanode.

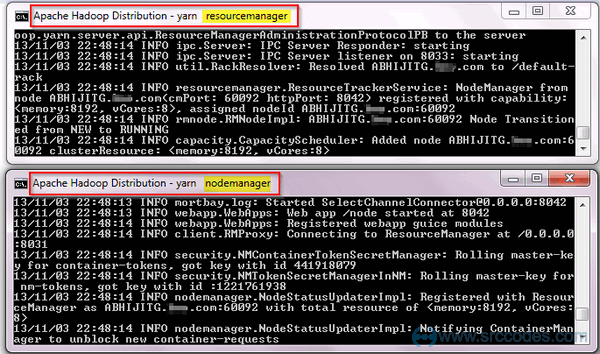

Start MapReduce aka YARN (Resource Manager and Node Manager)

Command Prompt|

1

2

3

|

C:\Users\abhijitg>cd c:\hadoop\sbin

c:\hadoop\sbin>start-yarnstarting yarn daemons |

Similarly, two separate Command Prompt windows will be opened automatically to run Resource Manager and Node Manager.

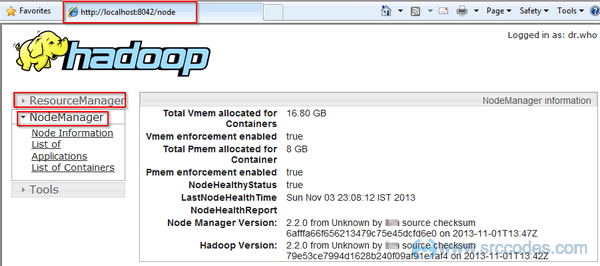

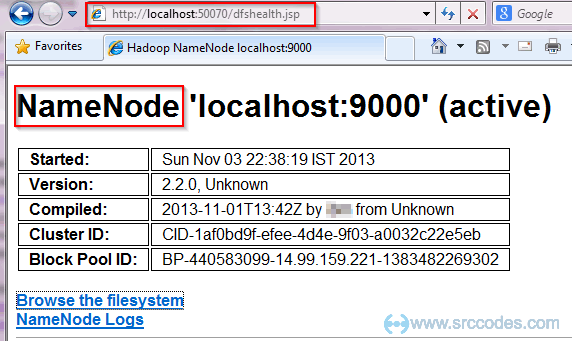

Verify Installation

If everything goes well then you will be able to open the Resource Manager and Node Manager at http://localhost:8042 andNamenode at http://localhost:50070.

Node Manager: http://localhost:8042/

Stop HDFS & MapReduce

Command Prompt|

1

2

3

4

5

6

7

8

9

10

11

|

C:\Users\abhijitg>cd c:\hadoop\sbin

c:\hadoop\sbin>stop-dfsSUCCESS: Sent termination signal to the process with PID 876.SUCCESS: Sent termination signal to the process with PID 3848.c:\hadoop\sbin>stop-yarnstopping yarn daemonsSUCCESS: Sent termination signal to the process with PID 5920.SUCCESS: Sent termination signal to the process with PID 7612.INFO: No tasks running with the specified criteria. |

相关推荐

首先,你需要从Apache Hadoop官方网站下载hadoop-2.2.0的源代码压缩包,这通常以`.tar.gz`或`.zip`格式提供。解压后,进入源代码目录,准备编译环境。确保系统已安装了必要的依赖项,如GCC编译器、Java开发套件(JDK...

在Ubuntu 12.04系统中,如果你需要将32位的Hadoop 2.2.0编译成64位,以下是一份详细的步骤指南。首先,确保你的系统支持64位编译,并且你已经具备了必要的权限。 1. **安装GCC**: 在编译任何软件之前,你需要安装...

This course is included in the Expert Exchange on Hadoop: Using SAS/ACCESS service offering to configure SAS/ACCESS Interface to Hadoop or SAS/ACCESS Interface to Impala to work with your Hadoop ...

它具有高性能、安全性和稳定性,支持多种操作系统作为虚拟机的 guest OS。ESXi的安装过程简洁,可以通过vSphere Client远程管理。 vSphere Client是管理员与vSphere环境交互的主要界面,用于连接到vCenter Server或...

Configure storage, UE, and in-memory computing Integrate Hadoop with other programs including Kafka and Storm Master the fundamentals of Apache Big Top and Ignite Build robust data security with ...

VMware vSphere Install Configure Manage V6.5_lab manual

### Hadoop 2.x 编译实例详解 ...编译完成后,会在 `/usr/local/hadoop-2.2.0-src/hadoop-dist/target/hadoop-2.2.0` 目录下生成编译好的 Hadoop 包。 至此,Hadoop 2.2.0 的源码编译过程全部完成。

在本文中,我们将深入探讨如何在Cent OS 6.5操作系统上编译Hadoop 2.6.0的源码,以及如何利用编译生成的lib包目录替换官方下载资源中的对应目录。这个过程对于那些希望自定义Hadoop配置、优化性能或解决特定环境下的...

编辑`hadoop-2.2.0/etc/hadoop/hadoop-env.sh`文件,配置Java环境等信息。 ```bash vi hadoop-2.2.0/etc/hadoop/hadoop-env.sh ``` 添加以下内容: ```bash export JAVA_HOME=/path/to/java ``` 其中`/path/to/...

and Apache in 24 Hours combines coverage of these three popular open-source Web development tools into one easy-to-understand book -- and it comes with one easy-to-use Starter Kit CD-ROM for Windows ...

Learn how to build a Hadoop cluster in the cloud ☆ 出版信息:☆ [作者信息] Shumin Guo [出版机构] Packt Publishing [出版日期] 2013年07月24日 [图书页数] 368页 [图书语言] 英语 [图书格式] PDF 格式

Hadoop源代码分析(MapTask) Hadoop的MapTask类是Hadoop MapReduce框架中的一部分,负责执行Map任务。MapTask类继承自Task类,是MapReduce框架中的一个重要组件。本文将对MapTask类的源代码进行分析,了解其内部...

### 在Windows上使用Eclipse编写Hadoop应用程序 #### 前言 随着大数据技术的不断发展,Hadoop作为处理大规模数据集的重要工具之一,在企业和研究机构中得到了广泛应用。Hadoop主要由两个部分组成:Hadoop分布式...

Understand the Flume architecture, and also how to download and install open source Flume from Apache Follow along a detailed example of transporting weblogs in Near Real Time (NRT) to Kibana/...

交叉编译qt 5.9.9源码,配置的脚本。使用方法 将sh文件拷贝到源码目录,赋予执行权限,然后执行。 路径对应的编译器 需要自己指向路径arm-poky-linux-gnueabi

The book teaches the reader to install, configure and set up the PHP scripting language, the MySQL database system, and the Apache Web server. <br/>By the end of this book the reader will ...

开发者需要编写特定的文件,如configure.ac或configure.in(autoconf的配置模板),以及Makefile.am(automake的宏文件),系统会根据这些文件自动生成其他文件,如configure脚本、Makefile.in模板以及最终的...