http://blog.stevensanderson.com/2009/08/24/writing-great-unit-tests-best-and-worst-practises/

This blog post is aimed at developers with at least a small amount of unit testing experience. If you’ve never written a unit test, please read an introduction and have a go at it first.

What’s the difference between a good unit test and a bad one? How do you learn how to write good unit tests? It’s far from obvious. Even if you’re a brilliant coder with decades of experience, your existing knowledge and habits won’t automatically lead you to write good unit tests, because it’s a different kind of coding and most people start with unhelpful false assumptions about what unit tests are supposed to achieve.

Most of the unit tests I see are pretty unhelpful. I’m not blaming the developer: Usually, he or she just got told to start unit testing, so they installed NUnit and started churning out [Test] methods. Once they saw red and green lights, they assumed they were doing it correctly. Bad assumption! It’s overwhelmingly easy to write bad unit tests that add very little value to a project while inflating the cost of code changes astronomically. Does that sound agile to you?

Unit testing is not about finding bugs

Now, I’m strongly in favour of unit testing, but only when you understand what role unit tests play within the Test Driven Development (TDD) process, and squash any misconception that unit tests have anything to do with testing for bugs.

In my experience, unit tests are not an effective way to find bugs or detect regressions. Unit tests, by definition, examine each unit of your code separately. But when your application is run for real, all those units have to work together, and the whole is more complex and subtle than the sum of its independently-tested parts. Proving that components X and Y both work independently doesn’t prove that they’re compatible with one another or configured correctly. Also, defects in an individual component may bear no relationship to the symptoms an end user would experience and report. And since you’re designing the preconditions for your unit tests, they won’t ever detect problems triggered by preconditions that you didn’t anticipate (for example, if some unexpected IHttpModule interferes with incoming requests).

So, if you’re trying to find bugs, it’s far more effective to actually run the whole application together as it will run in production, just like you naturally do when testing manually. If you automate this sort of testing in order to detect breakages when they happen in the future, it’s called integration testing and typically uses different techniques and technologies than unit testing. Don’t you want to use the most appropriate tool for each job?

| Goal | Strongest technique |

| Finding bugs (things that don’t work as you want them to) | Manual testing (sometimes also automated integration tests) |

| Detecting regressions (things that used to work but have unexpectedly stopped working) | Automated integration tests (sometimes also manual testing, though time-consuming) |

| Designing software components robustly | Unit testing (within the TDD process) |

(Note: there’s one exception where unit tests do effectively detect bugs. It’s when you’re refactoring, i.e., restructuring a unit’s code but without meaning to change its behaviour. In this case, unit tests can often tell you if the unit’s behaviour has changed.)

Well then, if unit testing isn’t about finding bugs, what is it about?

I bet you’ve heard the answer a hundred times already, but since the testing misconception stubbornly hangs on in developers’ minds, I’ll repeat the principle. As TDD gurus keep saying, “TDD is a design process, not a testing process”. Let me elaborate: TDD is a robust way of designing software components (“units”) interactively so that their behaviour is specified through unit tests. That’s all!

Good unit tests vs bad ones

TDD helps you to deliver software components that individually behave according to your design. A suite of good unit tests is immensely valuable: it documents your design, and makes it easier to refactor and expand your code while retaining a clear overview of each component’s behaviour. However, a suite of bad unit tests is immensely painful: it doesn’t prove anything clearly, and can severely inhibit your ability to refactor or alter your code in any way.

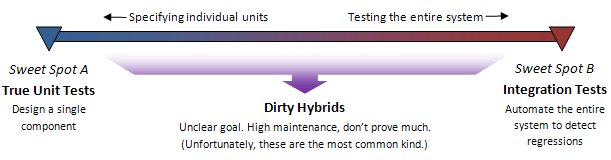

Where do your tests sit on the following scale?

Unit tests created through the TDD process naturally sit at the extreme left of this scale. They contain a lot of knowledge about the behaviour of a single unit of code. If that unit’s behaviour changes, so must its unit tests, and vice-versa. But they don’t contain any knowledge or assumptions about other parts of your codebase, so changes to other parts of your codebase don’t make them start failing (and if yours do, that shows they aren’t true unit tests). Therefore they’re cheap to maintain, and as a development technique, TDD scales up to any size of project.

At the other end of the scale, integration tests contain no knowledge about how your codebase is broken down into units, but instead make statements about how the whole system behaves towards an external user. They’re reasonably cheap to maintain (because no matter how you restructure the internal workings of your system, it needn’t affect an external observer) and they prove a great deal about what features are actually working today.

Anywhere in between, it’s unclear what assumptions you’re making and what you’re trying to prove. Refactoring might break these tests, or it might not, regardless of whether the end-user experience still works. Changing the external services you use (such as upgrading your database) might break these tests, or it might not, regardless of whether the end-user experience still works. Any small change to the internal workings of a single unit might force you to fix hundreds of seemingly unrelated hybrid tests, so they tend to consume a huge amount of maintenance time – sometimes in the region of 10 times longer than you spend maintaining the actual application code. And it’s frustrating because you know that adding more preconditions to make these hybrid tests go green doesn’t truly prove anything.

Tips for writing great unit tests

Enough vague discussion – time for some practical advice. Here’s some guidance for writing unit tests that sit snugly atSweet Spot A on the preceding scale, and are virtuous in other ways too.

-

Make each test orthogonal (i.e., independent) to all the others

Any given behaviour should be specified in one and only one test. Otherwise if you later change that behaviour, you’ll have to change multiple tests. The corollaries of this rule include:- <l

- i>

- Which specific behaviour are you testing? It’s counterproductive to Assert() anything that’s also asserted by another test: it just increases the frequency of pointless failures without improving unit test coverage one bit. This also applies to unnecessary Verify() calls – if it isn’t the core behaviour under test, then stop making observations about it! Sometimes, TDD folks express this by saying “

- ”.

- Remember, unit tests are a design specification of how a certain behaviour should work, not a list of observations of

- the code happens to do.

-

Test only one code unit at a time

Your architecture must support testing units (i.e., classes or very small groups of classes) independently, not all chained together. Otherwise, you have lots of overlap between tests, so changes to one unit can cascade outwards and cause failures everywhere.

If you can’t do this, then your architecture is limiting your work’s quality – consider using Inversion of Control. -

Mock out all external services and state

Otherwise, behaviour in those external services overlaps multiple tests, and state data means that different unit tests can influence each other’s outcome.

You’ve definitely taken a wrong turn if you have to run your tests in a specific order, or if they only work when your database or network connection is active.

(By the way, sometimes your architecture might mean your code touches static variables during unit tests. Avoid this if you can, but if you can’t, at least make sure each test resets the relevant statics to a known state before it runs.) -

Avoid unnecessary preconditions

Avoid having common setup code that runs at the beginning of lots of unrelated tests. Otherwise, it’s unclear what assumptions each test relies on, and indicates that you’re not testing just a single unit.

An exception: Sometimes I find it useful to have a common setup method shared by a very smallnumber of unit tests (a handful at the most) but only if all those tests require all of those preconditions. This is related to the context-specification unit testing pattern, but still risks getting unmaintainable if you try to reuse the same setup code for a wide range of tests.

(By the way, I wouldn’t count pushing multiple data points through the same test (e.g., using NUnit’s [TestCase] API) as violating this orthogonality rule. The test runner may display multiple failures if something changes, but it’s still only one test method to maintain, so that’s fine.)

-

Test only one code unit at a time

-

Don’t unit-test configuration settings

By definition, your configuration settings aren’t part of any unit of code (that’s why you extracted the setting out of your unit’s code). Even if you could write a unit test that inspects your configuration, it merely forces you to specify the same configuration in an additional redundant location. Congratulations: it proves that you can copy and paste!Personally I regard the use of things like filters in ASP.NET MVC as being configuration. Filters like [Authorize] or [RequiresSsl] are configuration options baked into the code. By all means write an integration test for the externally-observable behaviour, but it’s meaningless to try unit testing for the filter attribute’s presence in your source code – it just proves that you can copy and paste again. That doesn’t help you to design anything, and it won’t ever detect any defects.

-

Name your unit tests clearly and consistently

If you’re testing how ProductController’s Purchase action behaves when stock is zero, then maybe have a test fixture class called PurchasingTests with a unit test called ProductPurchaseAction_IfStockIsZero_RendersOutOfStockView(). This name describes the subject(ProductController’s Purchase action), the scenario (stock is zero), and the result (renders “out of stock” view). I don’t know whether there’s an existing name for this naming pattern, though I know others follow it. How about S/S/R?Avoid non-descriptive unit tests names such as Purchase() or OutOfStock(). Maintenance is hard if you don’t know what you’re trying to maintain.

Conclusion

Without doubt, unit testing can significantly increase the quality of your project. Many in our industry claim that any unit tests are better than none, but I disagree: a test suite can be a great asset, or it can be a great burden that contributes little. It depends on the quality of those tests, which seems to be determined by how well its developers have understood the goals and principles of unit testing.

By the way, if you want to read up on integration testing (to complement your unit testing skills), check out projects such as Watin, Selenium, and even the ASP.NET MVC integration testing helper library I published recently.

相关推荐

CCNA Routing and Switching Practice Tests: Exam 100-105, Exam 200-105, and Exam 200-125 by Jon Buhagiar English | 10 Mar. 2017 | ASIN: B06XKQBT63 | 504 Pages | AZW3 | 12.44 MB Preview exam day with ...

• Overcome confusion and misunderstandings about the mechanics of writing tests • Test “side effects,” behavioral characteristics, and contextual constraints • Understand subtle interactions ...

wget https://github.com/dgroup/docker-unittests/releases/download/s1.1.1/docker-unittests-app-1.1.1.jar 用测试定义一个。 version : 1.1 setup : - apt-get update - apt-get install -y tree tests : - ...

Taking a complete journey through the most valuable design patterns in React, this book demonstrates how to apply design patterns and best practices in real-life situations, whether that's for new or ...

It guides you step by step from simple tests to tests that are maintainable, readable, and trustworthy. It covers advanced subjects like mocks, stubs, and frameworks such as Typemock Isolator and ...

build for production and view the bundle analyzer reportnpm run build --report# run unit tests: coming soon# npm run unit# run e2e tests: coming soon# npm run e2e# run all tests: coming soon# npm test...

sap press doc 解压密码:abap_developer

Tests run: 1, Failures: 0, Errors: 0, Skipped: 0, Time elapsed: 3.578 sec 要在没有异步脚本任务的情况下运行: mvn -Dtest=SimpleTestCase#simpleNoAsync test **** Running simple_no_async test... SCRIPT ...

Selenium WebDriver is a global leader in automated web testing. It empowers users to perform complex ...Stabilize your tests by using patterns such as the Action Wrapper and Black Hole Proxy patterns

OCA / OCP Practice Tests: Exam 1Z0-808 and Exam 1Z0-809 by Scott Selikoff English | 16 Mar. 2017 | ASIN: B06XQR7DVN | 600 Pages | AZW3 | 1.8 MB OCA/OCP Java SE 8 Programmer Practice Tests complements...

欢迎来到kvm-unit-tests 有关此项目的高级描述,以及运行测试和添加测试HOWTO,请参见 。 建立测试 该目录包含KVM测试套件的源。 要创建测试图像,请执行以下操作: ./configure make 在此目录中。 测试图像在./...

单元测试 Node.js代码的单元测试示例。 ... 运行应用程序 ...mocha-tests目录中的测试与节点测试等效,但它们与Mocha一起运行。 未更改的代码表示通过测试。 更改测试或基础代码以查看失败测试的输出。 运行测试

**单元测试(Unit Tests)详解** 单元测试是软件开发中的一个重要环节,它是对代码的最小可测试单元进行验证的过程。在面向对象编程中,这个单元通常是一个方法或一个类。单元测试的目标是确保代码的各个部分按预期...

This book explores the important concepts in software testing and their implementation in Python 3 and shows you how to automate, organize, and execute unit tests for this language. This knowledge is ...

【标题】"clustering-unit-tests: 单元测试样板项目" 涉及的主要知识点是单元测试和集群(clustering)技术,以及在项目中如何实施和管理它们。单元测试是一种软件开发实践,用于验证代码的各个独立部分,即单元,...

Autodesk Maya的持续集成(CI) 从此存储库中派生或复制/粘贴,以开始使用Maya Python脚本的CI。 阅读此以获得有关单元测试和CI与Maya结合使用的更多信息。链接检查此以获取示例测试。 向展示他的精彩爱。...