From:

A major issue stands in the way of Java scalability today, and Cloudera's Eva Andreasson wants Java developers to stop ignoring it. Here she makes the case for why true Java application scalability will require a dramatic overhaul in how we think about Java virtual machines, and how developers and vendors build them.

Most developers approach JVM performance issues as they surface, which means spending a lot of time fine-tuning application-level bottlenecks. If you've been reading this series so far then you know that I see the problem more systemically. I say it's JVM technology that limits the scalability of enterprise Java applications. Before we go further, let me point out some leading facts:

- Modern hardware servers offer huge amounts of memory.

- Distributed systems require huge amounts of memory, the demand is always increasing.

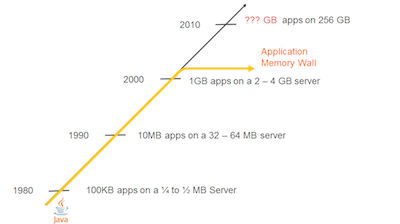

- A normal heap size for a Java application instance currently is between 1 and 4 GB -- far below what most servers can manage and what most distributed applications require. This is sometimes known as the Java application memory wall, as illustrated in Figure 1.

The time graph in Figure 1, created by Gil Tene, shows a history of memory usage on Java application servers and what was a normal heap size for Java applications at each point in time. (See Resources.)

Figure 1. The Java application memory wall from 1980 to 2010 (click to enlarge)

Image copyright Azul Systems.

This brings us to the JVM performance conundrum, which goes something like this:

- If you provide too little memory to an application it will run out of memory. The JVM will not be able to free up memory space at the rate that your application needs it. Push too hard and eventually the JVM will throw an

OutOfMemoryError and shut down completely. So you have to provide more memory to your applications. - If you increase the heap size for a response-time-sensitive application, the heap will eventually become fragmented. This is unavoidable if you don't restart your system or custom-architect your application. When fragmentation happens, an application can hang for anywhere from 100 millisecond to 100 seconds depending on the application, the heap size, and other JVM tuning parameters.

Most discourse about JVM pauses focuses on average or target pauses. What isn't discussed as much is the worst-case pause time that happens when the whole heap needs to be compacted. A worst-case pause time in a production environment is around one second per gigabyte of live data in the heap.

JVM performance optimization: Read the series

- Part 1: Overview

- Part 2: Compilers

- Part 3: Garbage collection

- Part 4: Concurrently compacting GC

- Part 5: Scalability

A two- to four-second pause is not acceptable for most enterprise applications, so Java application instances are stalled out at 2 to 4 GB, despite their need for more memory. On some 64-bit systems, with lots of JVM tuning for scale, it is possible to run 16 GB or even 20 GB heaps and meet typical response-time SLAs. But compared to where Java heap sizes should be today, we're still way off. The limitation lies in the JVM's inability to handle fragmentation without a stop-the-world GC. As a result, Java application developers are stuck doing two tasks that most of us deplore:

- Architecting or modeling deployments in chopped-up large instance pools, even though it leads to a horrible operations monitoring and management situation.

- Tuning and re-tuning the JVM configuration, or even the application, to "avoid" (meaning postpone) the worst-case scenario of a stop-the-world compaction pause. The most that developers can hope for is that when a pause happens, it won't be during a peak load time. This is what I call a Don Quixote task, of chasing an impossible goal.

Now let's dig a little deeper into Java's scalability problem.

Over-provisioning and over-instancing Java deployments

To be able to utilize all of the hardware memory available in a large box, many Java teams choose to scale their application deployment through multiple instances instead of within a single instance or a few larger instances. While running 16 application instances on a single box is a good way to use all of the available memory, it doesn't address the cost of managing and monitoring that many instances, especially when you've deployed multiple servers the same way.

Another problem designed as a solution is the dramatic precautions that teams take to stay up (and not go down) during peak load times. This means configuring heap sizes for worst-case peak loads. Most of that memory isn't needed for everyday loads, so it becomes an expensive waste of resources. In some cases, teams will go even further, deploying not more than two or three instances per box. This is exceptionally wasteful, both economically and in terms of environmental impact, particularly during non-peak hours.

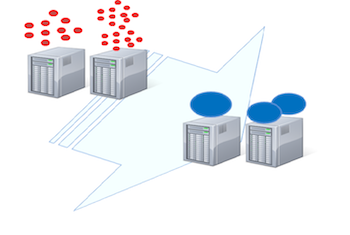

Now let's compare architectures. On the left side of Figure 2 we see many small-instance clusters, which are harder to manage and maintain. To the right are fewer, larger instances handling the same load. Which solution is more economical?

Figure 2. Fewer larger instances (click to enlarge)

Image copyright Azul Systems.

As I discussed in my last article, concurrent compaction is a truly viable solution to this problem. It makes fewer, larger instances possible and removes the scalability limitations commonly associated with the JVM. Currently only Azul's Zing JVM offers concurrent compaction, and Zing is a server-side JVM, not yet in the hands of developers. It would be terrific to see more developers take on Java's scalability challenge at the JVM level.

Since performance tuning is still our primary tool for managing Java scalability issues, let's look at some of the main tuning parameters and what we're actually able to achieve with them.

Tuning parameters -- some examples

The most well-known JVM performance option, which most Java developers specify on their command-line when launching a Java application, is -Xmx. This option lets you specify how much memory is allocated to your Java heap, although results will vary by JVM.

Some JVMs include the memory needed for internal structures (such as compiler threads, GC structures, code cache, and so on) in the -Xmx setting, while others add more memory outside of it. As a result, the size of your Java processes may not always reflect your -Xmx setting.

Once you've reached your maximum memory threshold, you can't return that memory to the system. You also can't grow beyond it; it is a fixed upper limit. If you don't get your -Xmx setting "right" -- that is, if the application's object-allocation rate, the lifetime of your objects, or the size of your objects exceeds your JVM's memory configuration settings -- you will run out of memory. Then the garbage collector will throw an out-of-memory error and your application will shut down.

If your application is struggling with memory availability, you currently don't have many other options than to restart it with a larger -Xmx size. To avoid downtime and frequent restarts, most enterprise production environments tend to tune for the worst-case load, hence over-provisioning.

Tip: Tune for production load

A common error for Java developers is to tune heap memory settings in a lab environment, forgetting to re-tune it for production load. The two loads can differ significantly, so always re-tune for your production load.

Tuning generational garbage collectors

Some other common tuning options for the JVM are -Xns and -XX:NewSize. These options are used to tune the size of young generation (or nursery) in your JVM. They specify the amount of the heap that should be dedicated for new allocation ingenerational garbage collectors.

Most Java developers will try to tune the nursery size based on a lab environment, which means risking a production-load fail. It's common to set a third or half of the heap as nursery, which is a bit of glue that seems to work most of the time. It isn't based on a real rule, however, as the "right" size is actually application dependent. You're better off investigating the actual promotion rate and the actual size of your long-living objects, and then setting the nursery size to be as large as possible without causing promotion failure in the old space of the heap. (Promotion failure is a sign that the old space is too small, and will trigger a number of garbage collection actions that could result in an out-of-memory error. See JVM performance optimization, Part 2 for an in-depth discussion of generational garbage collection and heap sizing.)

Another nursery-related option is -XX:SurvivorRatio. This option lets you set thepromotion rate, meaning the life-length an object has to survive before getting promoted to old space. To set this option "right" you will have to know the frequency of young-space collection and be able to estimate for how long new objects will be referenced in your application. Note that the "right" setting for these options depends on the allocation rate, so setting them based on the lab environment will cost you in production.

Tuning concurrent garbage collectors

If you have a pause-sensitive application, your best bet is to use a concurrent garbage collector -- at least until someone invents something better. Although parallel approaches give excellent throughput benchmark scores and are often used for JVM comparative publications, parallel GC does not benefit response times. Concurrent GC is currently the only way to achieve some kind of consistency and the least number of stop-the-world interruptions. Different JVMs supply different options for setting a concurrent garbage collector. For the Oracle JVM it's -XX:+UseConcMarkSweepGC. More recently G1 has become the default for the Oracle JVM, which uses a concurrent approach.

GC algorithms compared

See JVM performance optimization, Part 2 to learn about the strengths and weaknesses of different garbage collection algorithms for various application scenarios.

Performance tuning is not the solution

You might have noticed that I put quotation marks around each use of the word rightin my discussion about tuning parameters. That's because my experience shows that when it comes to JVM performance tuning, there is no right setting. Assigning a value to one of your JVM parameters means setting it for a specific application scenario. Given that the application scenario changes, JVM performance tuning is a stop-gap solution at best.

Take the heap size as an example: If 2 GB looks good with 200,000 concurrent users, it probably won't be enough with 400,000 users. Or take the survivor ratio, or the promotion rate: A setting that looked good for a test case had a continuously growing load up to 10,000 transactions per millisecond, but what happens when the pressure increases to 50,000 for the same timeframe in production?

Most enterprise application loads are dynamic, which is why Java is indeed an excellent language for the enterprise. Java's dynamic memory management and compilation means that the more dynamic you keep your configuration environment, the better it is for language execution. Consider the two listings below. Do either of these example look similar to your production environment's startup command line?

Listing 1. Startup options for an enterprise Java application (1)

>java -Xmx12g -XX:MaxPermSize=64M -XX:PermSize=32M -XX:MaxNewSize=2g

-XX:NewSize=1g -XX:SurvivorRatio=16 -XX:+UseParNewGC

-XX:+UseConcMarkSweepGC -XX:MaxTenuringThreshold=0

-XX:CMSInitiatingOccupancyFraction=60 -XX:+CMSParallelRemarkEnabled

-XX:+UseCMSInitiatingOccupancyOnly -XX:ParallelGCThreads=12

-XX:LargePageSizeInBytes=256m вА¶

Listing 2. Startup options for an enterprise Java application (2)

>java --Xms8g --Xmx8g --Xmn2g -XX:PermSize=64M -XX:MaxPermSize=256M

-XX:-OmitStackTraceInFastThrow -XX:SurvivorRatio=2 -XX:-UseAdaptiveSizePolicy -XX:+UseConcMarkSweepGC

-XX:+CMSConcurrentMTEnabled -XX:+CMSParallelRemarkEnabled -XX:+CMSParallelSurvivorRemarkEnabled

-XX:CMSMaxAbortablePrecleanTime=10000 -XX:+UseCMSInitiatingOccupancyOnly

-XX:CMSInitiatingOccupancyFraction=63 -XX:+UseParNewGC --Xnoclassgc вА¶

The values are very different for these two real-world JVM startup configurations, which makes sense because they're for two different Java applications. Both are "right" in the sense that they have been tuned to suit particular enterprise application characteristics. Both achieved great performance in the lab but eventually failed in production. The config in Listing 1 failed immediately because the test cases didn't represent the dynamic load in production. The config in Listing 2 failed following an update because new features changed the application's production usage pattern. In both cases, the development team was blamed. But were they really to blame?

Do 'workarounds' work?

Some enterprises have gone to the extreme of "recycling" object space by exactly measuring how large transaction objects are and "cutting" their architecture to fit that size. This is one way to reduce fragmentation and survive perhaps for an entire day of trading without compaction. Another option is to use an application design that assures objects are only referenced for a very short while, so that they never are promoted, thus preventing old-space collection and full compaction scenarios. Both approaches work, but put unnecessary strain on the developers and the application design.

Who is responsible for Java application performance?

The second a portal application hits a campaign peak load, it fails. A trading application fails every time the market falls or rises. Ecommerce sites go under during the holiday shopping season. These are all real-world failures that often result from tuning JVM parameters for specific application needs. When money is lost, development teams get blamed, and in some cases they should be. But what about the responsibility of JVM vendors?

It makes sense for JVM vendors to prioritize tuning parameters, at least in the short term. New tuning options benefit specific, emerging enterprise application scenarios, which meets demand. More tuning options also reduce the JVM support team's load by pushing the responsibility for application performance onto developers, although my guess is that this causes even more support load in the long run. Tuning options also work to postpone some worst-case scenarios, but not indefinitely.

There's no doubt that hard work goes into developing JVM technology. It's also true that only application implementers know the specific needs of their application. But it will always be impossible for application developers to predict dynamic variations in load time. It is past time for JVM vendors to address Java's performance and scalability issues where they are most resolvable, not with new tuning parameters, but with new and better garbage collection algorithms. Better yet, imagine what would happen if the OpenJDK community came together to rethink garbage collection!

Benchmarking JVM performance

Tuning parameters are sometimes used as a tool in the competition between JVM vendors, because different tunings can improve performance benchmarks in predictable environments. The concluding article in this series will investigate benchmarks as a measure of JVM performance.

A challenge to JVM developers

True enterprise scalability demands that Java virtual machines be able to respond with dynamic flexibility to changes in application load. That's the key to sustainable performance in throughput and response times. Only JVM developers can make that kind of innovation happen, so I propose it is time that we -- the Java developer community -- take on the real Java scalability challenge:

- On tuning: The only question for a given application should be, how much memory does it need? After that, it should be the job of the JVM to adapt to dynamic changes in application load or usage patterns.

- On instance count vs. instance scalability: Modern servers have tons of memory, so why can't JVM instances make efficient use of it? The split deployment model, with its dependence on many small instances, is wasteful, both economically and environmentally. Modern JVMs should support sustainable IT.

- On real-world performance and scalability: Enterprises should not have to go to extremes to get the performance and scalability they need for Java applications. It is time for JVM vendors and the OpenJDK community to tackle Java's scalability problem at its core and eliminate stop-the-world operations.

Conclusion to Part 5

Java developers shouldn't ever have to spend painful time understanding how to configure a JVM for Java performance. If the JVM actually did its job, and if there were more garbage collection algorithms that could do concurrent compaction, the JVM would not limit Java scalability. There would be more brainpower out there innovating interesting Java applications instead of endlessly tuning JVMs. I challenge JVM developers and vendors to do what's needed and (to cite Oracle) "help make the future Java!"

зЫЄеЕ≥жО®иНР

Dive into JVM garbage collection logging, monitoring, tuning, and tools Explore JIT compilation and Java language performance techniques Learn performance aspects of the Java Collections API and get ...

гАРJavaдЄ≠зЪД`java.net.BindException: Address already in use: JVM_Bind`еЉВеЄЄгАС еЬ®JavaзЉЦз®ЛдЄ≠пЉМељУдљ†е∞ЭиѓХеРѓеК®дЄАдЄ™жЬНеК°еЩ®зЂѓеЇФзФ®пЉМе¶ВTomcatпЉМжИЦиАЕдїїдљХйЬАи¶БзЫСеРђзЙєеЃЪзЂѓеП£зЪДжЬНеК°жЧґпЉМеПѓиГљдЉЪйБЗеИ∞`java.net.BindException: ...

JVM иЗіеСљйФЩиѓѓжЧ•ењЧиѓ¶иІ£ JVM иЗіеСљйФЩиѓѓжЧ•ењЧжШѓ Java иЩЪжЛЯжЬЇпЉИJVMпЉЙеЬ®йБЗеИ∞иЗіеСљйФЩиѓѓжЧґзФЯжИРзЪДжЧ•ењЧжЦЗдїґпЉМзФ®дЇОиЃ∞ељХйФЩиѓѓдњ°жБѓеТМз≥їзїЯдњ°жБѓгАВиѓ•жЧ•ењЧжЦЗдїґеПѓдї•еЄЃеК©еЉАеПСиАЕеТМзїіжК§иАЕењЂйАЯеЃЪдљНеТМиІ£еЖ≥йЧЃйҐШгАВ жЦЗдїґе§іпЉЪжЦЗдїґе§іжШѓйФЩиѓѓжЧ•ењЧзЪД...

еЖЕеЃєж¶Ви¶БпЉЪжЬђжЦЗж°£иѓ¶зїЖдїЛзїНдЇЖJavaиЩЪжЛЯжЬЇпЉИJVMпЉЙзЪДзЫЄеЕ≥зЯ•иѓЖзВєпЉМжґµзЫЦJavaеЖЕе≠Шж®°еЮЛгАБеЮГеЬЊеЫЮжФґжЬЇеИґеПКзЃЧж≥ХгАБеЮГеЬЊжФґйЫЖеЩ®гАБеЖЕе≠ШеИЖйЕНз≠ЦзХ•гАБиЩЪжЛЯжЬЇз±їеК†иљљжЬЇеИґеТМJVMи∞ГдЉШз≠ЙеЖЕеЃєгАВй¶ЦеЕИйШРињ∞дЇЖJavaдї£з†БзЪДзЉЦиѓСеТМињРи°МињЗз®ЛпЉМдї•еПКJVMзЪД...

JVM жЇРдї£з†Б part07

JVM жЇРдї£з†Б part06

[jvm]жЈ±еЕ•JVM(дЄА):дїО"abc"=="abc"зЬЛjavaзЪДињЮжО•ињЗз®ЛжФґиЧП дЄАиИђиѓіжЭ•пЉМжИСдЄНеЕ≥ж≥®javaеЇХе±ВзЪДдЄЬи•њпЉМињЩжђ°жШѓдЄАдЄ™жЬЛеПЛйЧЃеИ∞дЇЖпЉМж≥®жДПдЄНеЕЙжШѓ System.out.println("abc"=="abc");ињФеЫЮtrueпЉМ System.out.println(("a"+"b"+"c")....

JVM жЇРдї£з†Бpart1 пЉИзЬЛжИСзЪДдЄКдЉ†иЃ∞ељХ жЬЙ1--9 дЄ™partпЉЙ

еЬ®myeclipseдЄ≠е∞ЖhtmlжЦЗдїґжФєжИРjspжЦЗдїґжЧґmyeclipseеН°дљПпЉЫе∞ЖдєЛеЙНзЪДдїїеК°еЕ≥жОЙпЉЫеЖНжЙУеЉАжЧґе§Ъжђ°йГ®зљ≤й°єзЫЃзЪДжЧґеАЩжК•йФЩ

JVM жЇРдї£з†Б part09

JVM жЇРдї£з†Б part08

JVM жЇРдї£з†Б part05

JVMйЭҐиѓХиµДжЦЩгАВ JVMзїУжЮДпЉЪз±їеК†иљљеЩ®пЉМжЙІи°МеЉХжУОпЉМжЬђеЬ∞жЦєж≥ХжО•еП£пЉМжЬђеЬ∞еЖЕе≠ШзїУжЮДпЉЫ еЫЫе§ІеЮГеЬЊеЫЮжФґзЃЧж≥ХпЉЪе§НеИґзЃЧж≥ХгАБж†ЗиЃ∞-жЄЕйЩ§зЃЧж≥ХгАБж†ЗиЃ∞-жХізРЖзЃЧж≥ХгАБеИЖдї£жФґйЫЖзЃЧж≥Х дЄГе§ІеЮГеЬЊеЫЮжФґеЩ®пЉЪSerialгАБSerial OldгАБParNewгАБCMSгАБParallel...

jvm-npm, йАВзФ®дЇОJVMзЪДеЕЉеЃєCommonJSж®°еЭЧеК†иљљеЩ® JVMдЄКJavascriptињРи°МжЧґдЄ≠зЪДNPMж®°еЭЧеК†иљљжФѓжМБгАВ еЃЮзО∞еЯЇдЇО http://nodejs.org/api/modules.htmlпЉМеЇФиѓ•еЃМеЕ®еЕЉеЃєгАВ ељУзДґпЉМдЄНеМЕжЛђеЃМжХізЪДnode.js APIпЉМеЫ†ж≠§дЄНи¶БжЬЯжЬЫдЊЭиµЦдЇОеЃГзЪД...

java hotspotжЇРз†БжѓФиЊГJVMеЃЮзО∞зЪДжАІиГљ ињЩжШѓжИСзЪДжЦЗзЂ†зЪДжЇРдї£з†БгАВ жАОдєИиЈС Java 8еЯЇеЗЖrun-benchmark-java-8.sh Java 11еЯЇеЗЖrun-benchmark-java-11.sh

JVM дЄНеЃЙеЕ®еЃЮзФ®з®ЛеЇПеЇУ ињЩй°єеЈ•дљЬж≠£еЬ®ињЫи°МдЄ≠пЉМе∞ЪжЬ™ж≠£з°ЃеСљеРНгАВ дЄУдЄЇе§ІжХ∞жНЃеИЖжЮРиЃЊиЃ°зЪДйЂШжАІиГљеЇУпЉМеМЕжЛђпЉЪ жШЊеЉПпЉИе†ЖеЖЕеТМе†Же§ЦпЉЙеЖЕе≠ШеИЖйЕНеЩ®еПѓйБњеЕН JVM еЮГеЬЊжФґйЫЖеєґжПРйЂШзЉУе≠Ше±АйГ®жАІ й¶ЖиЧПеЇУ еПѓдї•иґЕеЗЇ 2GB йЩРеИґзЪДжХ∞зїДжКљи±°пЉИеЕЈжЬЙ...

гАРж†ЗйҐШгАС"jvm_session_demo:jvm дЉЪиѓЭжЉФз§Ї"дЄїи¶БеЕ≥ж≥®зЪДжШѓJavaиЩЪжЛЯжЬЇпЉИJVMпЉЙеЬ®е§ДзРЖдЉЪиѓЭзЃ°зРЖдЄ≠зЪДеЇФзФ®гАВдЉЪиѓЭжШѓWebеЇФзФ®з®ЛеЇПдЄ≠дЄАдЄ™еЕ≥йФЃзЪДж¶ВењµпЉМеЃГеЕБиЃЄжЬНеК°еЩ®иЈЯиЄ™зФ®жИЈзЪДзКґжАБеТМи°МдЄЇпЉМзЙєеИЂжШѓеЬ®зКґжАБжЧ†зКґжАБзЪДHTTPеНПиЃЃдЄ≠гАВJVMеЬ®ж≠§...

еЬ®JavaдЄЦзХМдЄ≠пЉМJVMпЉИJava Virtual MachineпЉЙжШѓињРи°МжЙАжЬЙJavaз®ЛеЇПзЪДж†ЄењГпЉМеЃГиіЯиі£иІ£жЮРе≠ЧиКВз†БгАБзЃ°зРЖеЖЕе≠Шдї•еПКжЙІи°МзЇњз®ЛгАВеѓєдЇОе§ІеЮЛдЉБдЄЪзЇІеЇФзФ®пЉМJVMзЪДжАІиГљзЫСжОІдЄОи∞ГдЉШжШѓиЗ≥еЕ≥йЗНи¶БзЪДпЉМеЫ†дЄЇињЩзЫіжО•ељ±еУНеИ∞еЇФзФ®зЪДеУНеЇФйАЯеЇ¶гАБз®≥еЃЪжАІеТМ...

"JVM еПВжХ∞и∞ГдЉШ-optimization-jvm.zip"ињЩдЄ™еОЛзЉ©еМЕеЊИеПѓиГљжШѓеМЕеРЂдЇЖдЄАе•ЧеЕ≥дЇОJVMи∞ГдЉШзЪДиµДжЦЩжИЦиАЕдї£з†Бз§ЇдЊЛпЉМеПѓиГљеМЕжЛђжЦЗж°£гАБжХЩз®ЛжИЦеЈ•еЕЈгАВиЩљзДґеЕЈдљУзЪДжЦЗдїґеЖЕеЃєжЬ™зїЩеЗЇпЉМдљЖжИСдїђеПѓдї•ж†єжНЃж†ЗйҐШеТМжППињ∞жЭ•иЃ®иЃЇJVMи∞ГдЉШзЪДдЄАдЇЫеЕ≥йФЃзЯ•иѓЖзВє...

jvm-libp2p зФ®KotlinзЉЦеЖЩзЪДJVMзЪДlibp2pеЃЮзО∞ :fire: :warning: ињЩжШѓзєБйЗНзЪДеЈ•дљЬпЉБ :warning: иЈѓзЇњеЫЊ жЮДеїЇjvm-libp2pзЪДеЈ•дљЬеИЖдЄЇдЄ§дЄ™йШґжЃµпЉЪ жЬАе∞ПйШґжЃµпЉИv0.xпЉЙпЉЪжЧ®еЬ®жПРдЊЫжЬАеЯЇжЬђзЪДжЬАе∞Пе†Жж†ИпЉМдї•еЕБиЃЄеЯЇдЇОJVMзЪД俕姙еЭК2.0...