装完的HDFS用IE打开访问,查看其磁盘使用情况:http://192.168.33.10:50070/dfshealth.jsp

step 1. 为Hadoop增加用户,记住设定的密码

- $ sudo addgroup hadoop

- $ sudo adduser --ingroup hadoop hduser

- $ sudo addgroup hadoop

- $ sudo adduser --ingroup hadoop hduser

step 2. ssh的安装与设置

由于Hadoop用ssh 通信,因此首先要安装SSH Server

- $ sudo apt-get install ssh

- $ sudo apt-get install ssh

下面进行免密码登录设定,su 命令执行后,输入刚才设定的密码

- $ su - hduser

- $ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

- $ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

- $ ssh localhost

生成用于SSH的密钥 ,完成后请登入确认不用输入密码,(第一次登入需按enter键,第二次就可以直接登入到系统。

- ~$ ssh localhost

- ~$ exit

- ~$ ssh localhost

- ~$ exit

- ~$ ssh localhost

- ~$ exit

- ~$ ssh localhost

- ~$ exit

step 3. 安装java

笔者采用的是离线的tar,解压到/opt/java1.7.0/

- •$ tar zxvf jdk1.7.0.tar.gz

- •$ sudo mv jdk1.7.0 /opt/java/

- •$ tar zxvf jdk1.7.0.tar.gz

- •$ sudo mv jdk1.7.0 /opt/java/

配置环境

- •$ sudo gedit /opt/profile

- •$ sudo gedit /opt/profile

在 "umask 022"之前输入 as below

export JAVA_HOME=/opt/java/jdk1.7.0

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib

export PATH=$PATH:$JRE_HOME/bin:$JAVA_HOME/bin

step 4. 下载安装Hadoop

•下载 Hadoop-1.0.2,并解开压缩文件到 /opt 路径。

- •$ tar zxvf Hadoop-1.0.2.tar.gz

- •$ sudo mv Hadoop-1.0.2 /opt/

- •$ sudo chown -R hduser:hadoop /opt/Hadoop-1.0.2

- •$ tar zxvf Hadoop-1.0.2.tar.gz

- •$ sudo mv Hadoop-1.0.2 /opt/

- •$ sudo chown -R hduser:hadoop /opt/Hadoop-1.0.2

step 5. 设定 hadoop-env.sh

•进入 hadoop 目录,做进一步的设定。我们需要修改两个档案,第一个是 hadoop-env.sh,需要设定 JAVA_HOME, HADOOP_HOME, PATH 三个环境变量。

/opt$ cd Hadoop-1.0.2/

/opt/Hadoop-1.0.2$ cat >> conf/hadoop-env.sh << EOF

贴上以下信息

export JAVA_HOME=/opt/java/jdk1.7.0

export HADOOP_HOME=/opt/Hadoop-1.0.2

export PATH=$PATH:$HADOOP_HOME/bin

EOF

这里我有一点不明白,明明/etc/profile里已经指定了JAVA_HOME,这里为什么还需要指定?

step 6. 设定 hadoop配置文件

•编辑 $HADOOP_HOME/conf/core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/tmp/hadoop/hadoop-${user.name}</value>

</property>

</configuration>

•编辑 HADOOP_HOME/conf/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

• 编辑 HADOOP_HOME/conf/mapred-site.xml

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>localhost:9001</value>

</property>

</configuration>

step 7. 格式化HDFS

•以上我们已经设定好 Hadoop 单机测试的环境,接着让我们来启动 Hadoop 相关服务,格式化 namenode, secondarynamenode, tasktracker

- •$ cd /opt/Hadoop-1.0.2

- •$ source /opt/Hadoop-1.0.2/conf/hadoop-env.sh

- •$ hadoop namenode -format

- •$ cd /opt/Hadoop-1.0.2

- •$ source /opt/Hadoop-1.0.2/conf/hadoop-env.sh

- •$ hadoop namenode -format

执行上面的语句会报空指针错误,因为默认 hadoop.tmp.dir= tmp/hadoop/hadoop-${user.name}

如果你要修改的话可以

- •/opt/hadoop-1.0.2/conf$ sudo gedit core-site.xml

- •/opt/hadoop-1.0.2/conf$ sudo gedit core-site.xml

<!-- In: conf/core-site.xml -->

<property>

<name>hadoop.tmp.dir</name>

<value>/tmp/hadoop/hadoop-${user.name}</value>

<description>A base for other temporary directories.</description>

</property>

给此路径路径设定权限

- $ sudo mkdir -p /tmp/hadoop/hadoop-hduser

- $ sudo chown hduser:hadoop /tmp/hadoop/hadoop-hduser

- # ...and if you want to tighten up security, chmod from 755 to 750...

- $ sudo chmod 750 /tmp/hadoop/hadoop-hduser

- $ sudo mkdir -p /tmp/hadoop/hadoop-hduser

- $ sudo chown hduser:hadoop /tmp/hadoop/hadoop-hduser

- # ...and if you want to tighten up security, chmod from 755 to 750...

- $ sudo chmod 750 /tmp/hadoop/hadoop-hduser

在执行的格式化就会看到

执行画面如:

[: 107: namenode: unexpected operator

12/05/07 20:47:40 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = seven7-laptop/127.0.1.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 1.0.2

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.0.2 -r 1304954; compiled by 'hortonfo' on Sat Mar 24 23:58:21 UTC 2012

************************************************************/

12/05/07 20:47:41 INFO util.GSet: VM type = 32-bit

12/05/07 20:47:41 INFO util.GSet: 2% max memory = 17.77875 MB

12/05/07 20:47:41 INFO util.GSet: capacity = 2^22 = 4194304 entries

12/05/07 20:47:41 INFO util.GSet: recommended=4194304, actual=4194304

12/05/07 20:47:41 INFO namenode.FSNamesystem: fsOwner=hduser

12/05/07 20:47:41 INFO namenode.FSNamesystem: supergroup=supergroup

12/05/07 20:47:41 INFO namenode.FSNamesystem: isPermissionEnabled=true

12/05/07 20:47:41 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100

12/05/07 20:47:41 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s)

12/05/07 20:47:41 INFO namenode.NameNode: Caching file names occuring more than 10 times

12/05/07 20:47:42 INFO common.Storage: Image file of size 112 saved in 0 seconds.

12/05/07 20:47:42 INFO common.Storage: Storage directory /tmp/hadoop/hadoop-hduser/dfs/name has been successfully formatted.

12/05/07 20:47:42 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at seven7-laptop/127.0.1.1

************************************************************/

step 7. 启动Hadoop

•接着用 start-all.sh 来启动所有服务,包含 namenode, datanode,

$HADOOP_HOME/bin/start-all.sh

- •opt/hadoop-1.0.2/bin$ sh ./start-all.sh

- •opt/hadoop-1.0.2/bin$ sh ./start-all.sh

执行画面如:

•starting namenode, logging to /opt/hadoop-1.0.2/logs/hadoop-hduser-namenode-seven7-laptop.out

localhost:

localhost: starting datanode, logging to /opt/hadoop-1.0.2/logs/hadoop-hduser-datanode-seven7-laptop.out

localhost:

localhost: starting secondarynamenode, logging to /opt/hadoop-1.0.2/logs/hadoop-hduser-secondarynamenode-seven7-laptop.out

starting jobtracker, logging to /opt/hadoop-1.0.2/logs/hadoop-hduser-jobtracker-seven7-laptop.out

localhost:

localhost: starting tasktracker, logging to /opt/hadoop-1.0.2/logs/hadoop-hduser-tasktracker-seven7-laptop.out

step 8. 安装完毕测试

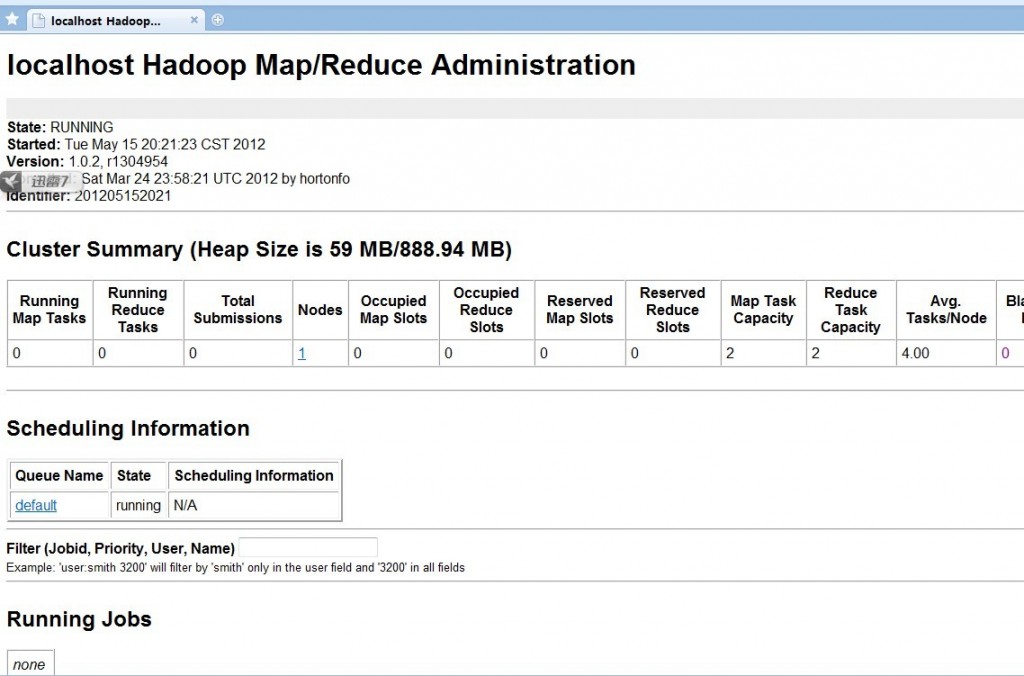

•启动之后,可以检查以下网址,来观看服务是否正常。 Hadoop 管理接口 Hadoop Task Tracker 状态 Hadoop DFS 状态

•http://localhost:50030/ - Hadoop 管理接口

至此

Hadoop单节点安装完成,下面将在次单节点集群上进行作业

<1>. Hadoop简介 hadoop是apache的开源项目,开发的主要目的是为了构建可靠,可拓展scalable,分布式的系统,hadoop是一系列的子工程的总和,其中包含。

1. hadoop common:为其他项目提供基础设施

2. HDFS:分布式的文件系统

3. MapReduce:A software framework for distributed processing of large data sets on compute clusters。一个简化分布式编程的框架。

4. 其他工程包含:Avro(序列化系统),Cassandra(数据库项目)等

从此学习网 http://www.congci.com/item/596hadoop

http://www.congci.com/item/596hadoop

在单机来模拟Hadoop基于分布式运行,最终通过在本机创建多个线程来模拟。主要就是实现运行Hadoop自带的WordCount这个例子,具体实现过程将在下面详细叙述。

(PS:因为我是一个新手,刚接触Hadoop不久,在学习Hadoop过程中遇到很多问题,特别将自己的实践过程写得非常详细,为更多对Hadoop感兴趣的朋友提供尽可能多的信息,仅此而已。)

模拟Linux环境配置

使用cygwin来模拟Linux运行环境,安装好cygwin后,配置好OpenSSH以后才能进行下面的操作。

Hadoop配置

首先进行Hadoop配置:

1、conf/hadoop-env.sh文件中最基本需要指定JAVA_HOME,例如我的如下:

| export JAVA_HOME="D:\Program Files\Java\jdk1.6.0_07" |

如果路径中存在空格,需要使用双引号。

2、只需要修改conf/hadoop-site.xml文件即可,默认情况下,hadoop-site.xml并没有被配置,如果是基于单机运行,就会按照hadoop-default.xml中的基本配置选项执行任务。

将hadoop-site.xml文件修改为如下所示:

|

<?xml version="1.0"?> <!-- Put site-specific property overrides in this file. --> <configuration> |

实现过程

1、认证配置

启动cygwin,同时使用下面的命令启动ssh:

| $ net start sshd |

如图所示:

接着,需要对身份加密认证这一部分进行配置,这也是非常关键的,因为基于分布式的多个Datanode结点需要向Namenode提供任务执行报告信息,如果每次访问Namenode结点都需要密码验证的话就麻烦了,当然我要说的就是基于无密码认证的方式的配置,可以参考我的其他文章。

生成RSA公钥的命令如下:

| $ ssh-keygen |

生成过程如图所示:

上面执行到如下步骤时需要进行设置:

| Enter file in which to save the key (/home/SHIYANJUN/.ssh/id_rsa): |

直接按回车键即可,按照默认的选项将生成的RSA公钥保存在/home/SHIYANJUN/.ssh/id_rsa文件中,以便结点之间进行通讯认证。

继续执行,又会提示进行输入选择密码短语passphrase,在如下这里:

| Enter passphrase (empty for no passphrase): |

直接按回车键,而且一定要这么做,因为空密码短语就会在后面执行过程中免去结点之间通讯进行的认证,直接通过RSA公钥(事实上,我们使用的是DSA认证,当然RSA也可以进行认证,继续看后面)认证。

RSA公钥主要是对结点之间的通讯信息加密的。如果RSA公钥生成过程如上图,说明正确生成了RSA公钥。

接着生成DSA公钥,使用如下命令:

| $ ssh-keygen -t dsa |

生成过程与前面的RSA类似,如图所示:

然后,需要将DSA公钥加入到公钥授权文件authorized_keys中,使用如下命令:

| $ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys |

如图所示,没有任何信息输出:

到此,就可以进行Hadoop的运行工作了。

2、Hadoop处理的数据文件准备

我使用的是hadoop-0.16.4版本,直接拷贝到G:\根目录下面,同时,我的cygwin直接安装在G:\Cygwin里面。

在目录G:\hadoop-0.16.4中创建一个input目录,里面准备几个TXT文件,我准备了7个,文件中内容就是使用空格分隔的多个英文单词,因为是运行WordCount这个例子,后面可以看到我存入了多少内容。

3、运行过程

下面,切换到G:\hadoop-0.16.4目录下面

| $ cd ../../cygdrive/g/hadoop-0.16.4 |

其中通过cygdrive(位于Cygwin根目录中)可以直接映射到Windows下的各个逻辑磁盘分区中。

在执行任务中,使用HDFS,即Hadoop的分布式文件系统,因此这时要做的就是格式化这个文件系统,使用下面命令可以完成:

| $ bin/hadoop namenode -format |

格式化过程如图所示:

此时,应该启动Namenode、Datanode、SecondaryNamenode、JobTracer,使用这个命令启动:

| $ bin/start-all.sh |

启动过程如图所示:

如果你没有配置前面ssh的无密码认证,或者配置了但是输入了密码短语,那么到这里就会每启动一个进程就提示输入密码,试想,如果有N多进程的话,那岂不是要命了。

然后,需要把上面我们在本地的input目录中准备的文件复制到HDFS中的input目录中,以便在分布式文件系统管理这些待处理的数据文件,使用下面命令:

| $ bin/hadoop dfs -put ./input input |

执行上述命令如果没有信息输出就复制成功。

现在,才可以执行Hadoop自带的WordCount列子了,使用下面命令开始提交任务,进入运行:

| $ bin/hadoop jar hadoop-0.16.4-examples.jar wordcount input output |

最后面两个参数分别为数据输入目录和数据处理完成后的输出目录,这里,不能在你的G:\hadoop-0.16.4目录中存在output这个目录,否则会报错的。

运行过程如图所示:

通过上图,可以看出在运行一个Job的过程中,WordCount工具执行任务的进度情况,非常详细。

最后查看执行任务后,处理数据的结果,使用的命令行如下所示:

| $ bin/hadoop dfs -cat output/part-00000 |

输出结果如图所示:

最后,停止Hadoop进程,使用如下命令:

|

$ bin/stop-all.sh |

如图所示:

以上就是全部的过程了。

http://hi.baidu.com/shirdrn/blog/item/33c762fecf9811375c600892.html

hadoop-1.1.2-1 RPM for x86_64

The Apache Hadoop project develops open-source software for reliable, scalable, distributed computing. Hadoop includes these subprojects: Hadoop Common: The common utilities that support the other Hadoop subprojects. HDFS: A distributed file system that provides high throughput access to application data. MapReduce: A software framework for distributed processing of large data sets on compute clusters.

Provides

Requires

- sh-utils

- textutils

- /usr/sbin/useradd

- /usr/sbin/usermod

- /sbin/chkconfig <=

- /sbin/service

- /bin/sh

- /bin/sh

- /bin/sh

- rpmlib(PayloadFilesHavePrefix) > 4.0-1

- rpmlib(CompressedFileNames) < 3.0.4-1

License

Apache License, Version 2.0

Files

/etc/hadoop/capacity-scheduler.xml /etc/hadoop/configuration.xsl /etc/hadoop/core-site.xml /etc/hadoop/fair-scheduler.xml /etc/hadoop/hadoop-env.sh /etc/hadoop/hadoop-metrics2.properties /etc/hadoop/hadoop-policy.xml /etc/hadoop/hdfs-site.xml /etc/hadoop/log4j.properties /etc/hadoop/mapred-queue-acls.xml /etc/hadoop/mapred-site.xml /etc/hadoop/masters /etc/hadoop/slaves /etc/hadoop/ssl-client.xml.example /etc/hadoop/ssl-server.xml.example /etc/hadoop/taskcontroller.cfg /etc/rc.d/init.d /etc/rc.d/init.d/hadoop-datanode /etc/rc.d/init.d/hadoop-historyserver /etc/rc.d/init.d/hadoop-jobtracker /etc/rc.d/init.d/hadoop-namenode /etc/rc.d/init.d/hadoop-secondarynamenode /etc/rc.d/init.d/hadoop-tasktracker /usr /usr/bin /usr/bin/hadoop /usr/bin/task-controller /usr/include /usr/include/hadoop /usr/include/hadoop/Pipes.hh /usr/include/hadoop/SerialUtils.hh /usr/include/hadoop/StringUtils.hh /usr/include/hadoop/TemplateFactory.hh /usr/lib /usr/lib64 /usr/lib64/libhadoop.a /usr/lib64/libhadoop.la /usr/lib64/libhadoop.so /usr/lib64/libhadoop.so.1 /usr/lib64/libhadoop.so.1.0.0 /usr/lib64/libhadooppipes.a /usr/lib64/libhadooputils.a /usr/lib64/libhdfs.a /usr/lib64/libhdfs.la /usr/lib64/libhdfs.so /usr/lib64/libhdfs.so.0 /usr/lib64/libhdfs.so.0.0.0 /usr/libexec /usr/libexec/hadoop-config.sh /usr/libexec/jsvc.amd64 /usr/man /usr/native /usr/sbin /usr/sbin/hadoop-create-user.sh /usr/sbin/hadoop-daemon.sh /usr/sbin/hadoop-daemons.sh /usr/sbin/hadoop-setup-applications.sh /usr/sbin/hadoop-setup-conf.sh /usr/sbin/hadoop-setup-hdfs.sh /usr/sbin/hadoop-setup-single-node.sh /usr/sbin/hadoop-validate-setup.sh /usr/sbin/rcc /usr/sbin/slaves.sh /usr/sbin/start-all.sh /usr/sbin/start-balancer.sh /usr/sbin/start-dfs.sh /usr/sbin/start-jobhistoryserver.sh /usr/sbin/start-mapred.sh /usr/sbin/stop-all.sh /usr/sbin/stop-balancer.sh /usr/sbin/stop-dfs.sh /usr/sbin/stop-jobhistoryserver.sh /usr/sbin/stop-mapred.sh /usr/sbin/update-hadoop-env.sh /usr/share /usr/share/doc /usr/share/doc/hadoop /usr/share/doc/hadoop/CHANGES.txt /usr/share/doc/hadoop/LICENSE.txt /usr/share/doc/hadoop/NOTICE.txt /usr/share/doc/hadoop/README.txt /usr/share/hadoop /usr/share/hadoop/contrib /usr/share/hadoop/contrib/datajoin /usr/share/hadoop/contrib/datajoin/hadoop-datajoin-1.1.2.jar /usr/share/hadoop/contrib/failmon /usr/share/hadoop/contrib/failmon/hadoop-failmon-1.1.2.jar /usr/share/hadoop/contrib/gridmix /usr/share/hadoop/contrib/gridmix/hadoop-gridmix-1.1.2.jar /usr/share/hadoop/contrib/hdfsproxy /usr/share/hadoop/contrib/hdfsproxy/README /usr/share/hadoop/contrib/hdfsproxy/bin /usr/share/hadoop/contrib/hdfsproxy/bin/hdfsproxy /usr/share/hadoop/contrib/hdfsproxy/bin/hdfsproxy-config.sh /usr/share/hadoop/contrib/hdfsproxy/bin/hdfsproxy-daemon.sh /usr/share/hadoop/contrib/hdfsproxy/bin/hdfsproxy-daemons.sh /usr/share/hadoop/contrib/hdfsproxy/bin/hdfsproxy-slaves.sh /usr/share/hadoop/contrib/hdfsproxy/bin/start-hdfsproxy.sh /usr/share/hadoop/contrib/hdfsproxy/bin/stop-hdfsproxy.sh /usr/share/hadoop/contrib/hdfsproxy/build.xml /usr/share/hadoop/contrib/hdfsproxy/conf /usr/share/hadoop/contrib/hdfsproxy/conf/configuration.xsl /usr/share/hadoop/contrib/hdfsproxy/conf/hdfsproxy-default.xml /usr/share/hadoop/contrib/hdfsproxy/conf/hdfsproxy-env.sh /usr/share/hadoop/contrib/hdfsproxy/conf/hdfsproxy-env.sh.template /usr/share/hadoop/contrib/hdfsproxy/conf/hdfsproxy-hosts /usr/share/hadoop/contrib/hdfsproxy/conf/log4j.properties /usr/share/hadoop/contrib/hdfsproxy/conf/ssl-server.xml /usr/share/hadoop/contrib/hdfsproxy/conf/tomcat-forward-web.xml /usr/share/hadoop/contrib/hdfsproxy/conf/tomcat-web.xml /usr/share/hadoop/contrib/hdfsproxy/conf/user-certs.xml /usr/share/hadoop/contrib/hdfsproxy/conf/user-permissions.xml /usr/share/hadoop/contrib/hdfsproxy/hdfsproxy-2.0.jar /usr/share/hadoop/contrib/hdfsproxy/logs /usr/share/hadoop/contrib/hod /usr/share/hadoop/contrib/hod/CHANGES.txt /usr/share/hadoop/contrib/hod/README /usr/share/hadoop/contrib/hod/bin /usr/share/hadoop/contrib/hod/bin/VERSION /usr/share/hadoop/contrib/hod/bin/checknodes /usr/share/hadoop/contrib/hod/bin/hod /usr/share/hadoop/contrib/hod/bin/hodcleanup /usr/share/hadoop/contrib/hod/bin/hodring /usr/share/hadoop/contrib/hod/bin/ringmaster /usr/share/hadoop/contrib/hod/bin/verify-account /usr/share/hadoop/contrib/hod/build.xml /usr/share/hadoop/contrib/hod/conf /usr/share/hadoop/contrib/hod/conf/hodrc /usr/share/hadoop/contrib/hod/config.txt /usr/share/hadoop/contrib/hod/getting_started.txt /usr/share/hadoop/contrib/hod/hodlib /usr/share/hadoop/contrib/hod/hodlib/AllocationManagers /usr/share/hadoop/contrib/hod/hodlib/AllocationManagers/__init__.py /usr/share/hadoop/contrib/hod/hodlib/AllocationManagers/__init__.pyc /usr/share/hadoop/contrib/hod/hodlib/AllocationManagers/__init__.pyo /usr/share/hadoop/contrib/hod/hodlib/AllocationManagers/goldAllocationManager.py /usr/share/hadoop/contrib/hod/hodlib/AllocationManagers/goldAllocationManager.pyc /usr/share/hadoop/contrib/hod/hodlib/AllocationManagers/goldAllocationManager.pyo /usr/share/hadoop/contrib/hod/hodlib/Common /usr/share/hadoop/contrib/hod/hodlib/Common/__init__.py /usr/share/hadoop/contrib/hod/hodlib/Common/__init__.pyc /usr/share/hadoop/contrib/hod/hodlib/Common/__init__.pyo /usr/share/hadoop/contrib/hod/hodlib/Common/allocationManagerUtil.py /usr/share/hadoop/contrib/hod/hodlib/Common/allocationManagerUtil.pyc /usr/share/hadoop/contrib/hod/hodlib/Common/allocationManagerUtil.pyo /usr/share/hadoop/contrib/hod/hodlib/Common/desc.py /usr/share/hadoop/contrib/hod/hodlib/Common/desc.pyc /usr/share/hadoop/contrib/hod/hodlib/Common/desc.pyo /usr/share/hadoop/contrib/hod/hodlib/Common/descGenerator.py /usr/share/hadoop/contrib/hod/hodlib/Common/descGenerator.pyc /usr/share/hadoop/contrib/hod/hodlib/Common/descGenerator.pyo /usr/share/hadoop/contrib/hod/hodlib/Common/hodsvc.py /usr/share/hadoop/contrib/hod/hodlib/Common/hodsvc.pyc /usr/share/hadoop/contrib/hod/hodlib/Common/hodsvc.pyo /usr/share/hadoop/contrib/hod/hodlib/Common/logger.py /usr/share/hadoop/contrib/hod/hodlib/Common/logger.pyc /usr/share/hadoop/contrib/hod/hodlib/Common/logger.pyo /usr/share/hadoop/contrib/hod/hodlib/Common/miniHTMLParser.py /usr/share/hadoop/contrib/hod/hodlib/Common/miniHTMLParser.pyc /usr/share/hadoop/contrib/hod/hodlib/Common/miniHTMLParser.pyo /usr/share/hadoop/contrib/hod/hodlib/Common/nodepoolutil.py /usr/share/hadoop/contrib/hod/hodlib/Common/nodepoolutil.pyc /usr/share/hadoop/contrib/hod/hodlib/Common/nodepoolutil.pyo /usr/share/hadoop/contrib/hod/hodlib/Common/setup.py /usr/share/hadoop/contrib/hod/hodlib/Common/setup.pyc /usr/share/hadoop/contrib/hod/hodlib/Common/setup.pyo /usr/share/hadoop/contrib/hod/hodlib/Common/socketServers.py /usr/share/hadoop/contrib/hod/hodlib/Common/socketServers.pyc /usr/share/hadoop/contrib/hod/hodlib/Common/socketServers.pyo /usr/share/hadoop/contrib/hod/hodlib/Common/tcp.py /usr/share/hadoop/contrib/hod/hodlib/Common/tcp.pyc /usr/share/hadoop/contrib/hod/hodlib/Common/tcp.pyo /usr/share/hadoop/contrib/hod/hodlib/Common/threads.py /usr/share/hadoop/contrib/hod/hodlib/Common/threads.pyc /usr/share/hadoop/contrib/hod/hodlib/Common/threads.pyo /usr/share/hadoop/contrib/hod/hodlib/Common/types.py /usr/share/hadoop/contrib/hod/hodlib/Common/types.pyc /usr/share/hadoop/contrib/hod/hodlib/Common/types.pyo /usr/share/hadoop/contrib/hod/hodlib/Common/util.py /usr/share/hadoop/contrib/hod/hodlib/Common/util.pyc /usr/share/hadoop/contrib/hod/hodlib/Common/util.pyo /usr/share/hadoop/contrib/hod/hodlib/Common/xmlrpc.py /usr/share/hadoop/contrib/hod/hodlib/Common/xmlrpc.pyc /usr/share/hadoop/contrib/hod/hodlib/Common/xmlrpc.pyo /usr/share/hadoop/contrib/hod/hodlib/GridServices /usr/share/hadoop/contrib/hod/hodlib/GridServices/__init__.py /usr/share/hadoop/contrib/hod/hodlib/GridServices/__init__.pyc /usr/share/hadoop/contrib/hod/hodlib/GridServices/__init__.pyo /usr/share/hadoop/contrib/hod/hodlib/GridServices/hdfs.py /usr/share/hadoop/contrib/hod/hodlib/GridServices/hdfs.pyc /usr/share/hadoop/contrib/hod/hodlib/GridServices/hdfs.pyo /usr/share/hadoop/contrib/hod/hodlib/GridServices/mapred.py /usr/share/hadoop/contrib/hod/hodlib/GridServices/mapred.pyc /usr/share/hadoop/contrib/hod/hodlib/GridServices/mapred.pyo /usr/share/hadoop/contrib/hod/hodlib/GridServices/service.py /usr/share/hadoop/contrib/hod/hodlib/GridServices/service.pyc /usr/share/hadoop/contrib/hod/hodlib/GridServices/service.pyo /usr/share/hadoop/contrib/hod/hodlib/Hod /usr/share/hadoop/contrib/hod/hodlib/Hod/__init__.py /usr/share/hadoop/contrib/hod/hodlib/Hod/__init__.pyc /usr/share/hadoop/contrib/hod/hodlib/Hod/__init__.pyo /usr/share/hadoop/contrib/hod/hodlib/Hod/hadoop.py /usr/share/hadoop/contrib/hod/hodlib/Hod/hadoop.pyc /usr/share/hadoop/contrib/hod/hodlib/Hod/hadoop.pyo /usr/share/hadoop/contrib/hod/hodlib/Hod/hod.py /usr/share/hadoop/contrib/hod/hodlib/Hod/hod.pyc /usr/share/hadoop/contrib/hod/hodlib/Hod/hod.pyo /usr/share/hadoop/contrib/hod/hodlib/Hod/nodePool.py /usr/share/hadoop/contrib/hod/hodlib/Hod/nodePool.pyc /usr/share/hadoop/contrib/hod/hodlib/Hod/nodePool.pyo /usr/share/hadoop/contrib/hod/hodlib/HodRing /usr/share/hadoop/contrib/hod/hodlib/HodRing/__init__.py /usr/share/hadoop/contrib/hod/hodlib/HodRing/__init__.pyc /usr/share/hadoop/contrib/hod/hodlib/HodRing/__init__.pyo /usr/share/hadoop/contrib/hod/hodlib/HodRing/hodRing.py /usr/share/hadoop/contrib/hod/hodlib/HodRing/hodRing.pyc /usr/share/hadoop/contrib/hod/hodlib/HodRing/hodRing.pyo /usr/share/hadoop/contrib/hod/hodlib/NodePools /usr/share/hadoop/contrib/hod/hodlib/NodePools/__init__.py /usr/share/hadoop/contrib/hod/hodlib/NodePools/__init__.pyc /usr/share/hadoop/contrib/hod/hodlib/NodePools/__init__.pyo /usr/share/hadoop/contrib/hod/hodlib/NodePools/torque.py /usr/share/hadoop/contrib/hod/hodlib/NodePools/torque.pyc /usr/share/hadoop/contrib/hod/hodlib/NodePools/torque.pyo /usr/share/hadoop/contrib/hod/hodlib/RingMaster /usr/share/hadoop/contrib/hod/hodlib/RingMaster/__init__.py /usr/share/hadoop/contrib/hod/hodlib/RingMaster/__init__.pyc /usr/share/hadoop/contrib/hod/hodlib/RingMaster/__init__.pyo /usr/share/hadoop/contrib/hod/hodlib/RingMaster/idleJobTracker.py /usr/share/hadoop/contrib/hod/hodlib/RingMaster/idleJobTracker.pyc /usr/share/hadoop/contrib/hod/hodlib/RingMaster/idleJobTracker.pyo /usr/share/hadoop/contrib/hod/hodlib/RingMaster/ringMaster.py /usr/share/hadoop/contrib/hod/hodlib/RingMaster/ringMaster.pyc /usr/share/hadoop/contrib/hod/hodlib/RingMaster/ringMaster.pyo /usr/share/hadoop/contrib/hod/hodlib/Schedulers /usr/share/hadoop/contrib/hod/hodlib/Schedulers/__init__.py /usr/share/hadoop/contrib/hod/hodlib/Schedulers/__init__.pyc /usr/share/hadoop/contrib/hod/hodlib/Schedulers/__init__.pyo /usr/share/hadoop/contrib/hod/hodlib/Schedulers/torque.py /usr/share/hadoop/contrib/hod/hodlib/Schedulers/torque.pyc /usr/share/hadoop/contrib/hod/hodlib/Schedulers/torque.pyo /usr/share/hadoop/contrib/hod/hodlib/ServiceProxy /usr/share/hadoop/contrib/hod/hodlib/ServiceProxy/__init__.py /usr/share/hadoop/contrib/hod/hodlib/ServiceProxy/__init__.pyc /usr/share/hadoop/contrib/hod/hodlib/ServiceProxy/__init__.pyo /usr/share/hadoop/contrib/hod/hodlib/ServiceProxy/serviceProxy.py /usr/share/hadoop/contrib/hod/hodlib/ServiceProxy/serviceProxy.pyc /usr/share/hadoop/contrib/hod/hodlib/ServiceProxy/serviceProxy.pyo /usr/share/hadoop/contrib/hod/hodlib/ServiceRegistry /usr/share/hadoop/contrib/hod/hodlib/ServiceRegistry/__init__.py /usr/share/hadoop/contrib/hod/hodlib/ServiceRegistry/__init__.pyc /usr/share/hadoop/contrib/hod/hodlib/ServiceRegistry/__init__.pyo /usr/share/hadoop/contrib/hod/hodlib/ServiceRegistry/serviceRegistry.py /usr/share/hadoop/contrib/hod/hodlib/ServiceRegistry/serviceRegistry.pyc /usr/share/hadoop/contrib/hod/hodlib/ServiceRegistry/serviceRegistry.pyo /usr/share/hadoop/contrib/hod/hodlib/__init__.py /usr/share/hadoop/contrib/hod/hodlib/__init__.pyc /usr/share/hadoop/contrib/hod/hodlib/__init__.pyo /usr/share/hadoop/contrib/hod/ivy /usr/share/hadoop/contrib/hod/ivy.xml /usr/share/hadoop/contrib/hod/ivy/libraries.properties /usr/share/hadoop/contrib/hod/support /usr/share/hadoop/contrib/hod/support/checklimits.sh /usr/share/hadoop/contrib/hod/support/logcondense.py /usr/share/hadoop/contrib/hod/support/logcondense.pyc /usr/share/hadoop/contrib/hod/support/logcondense.pyo /usr/share/hadoop/contrib/hod/testing /usr/share/hadoop/contrib/hod/testing/__init__.py /usr/share/hadoop/contrib/hod/testing/__init__.pyc /usr/share/hadoop/contrib/hod/testing/__init__.pyo /usr/share/hadoop/contrib/hod/testing/helper.py /usr/share/hadoop/contrib/hod/testing/helper.pyc /usr/share/hadoop/contrib/hod/testing/helper.pyo /usr/share/hadoop/contrib/hod/testing/lib.py /usr/share/hadoop/contrib/hod/testing/main.py /usr/share/hadoop/contrib/hod/testing/main.pyc /usr/share/hadoop/contrib/hod/testing/main.pyo /usr/share/hadoop/contrib/hod/testing/testHadoop.py /usr/share/hadoop/contrib/hod/testing/testHadoop.pyc /usr/share/hadoop/contrib/hod/testing/testHadoop.pyo /usr/share/hadoop/contrib/hod/testing/testHod.py /usr/share/hadoop/contrib/hod/testing/testHod.pyc /usr/share/hadoop/contrib/hod/testing/testHod.pyo /usr/share/hadoop/contrib/hod/testing/testHodCleanup.py /usr/share/hadoop/contrib/hod/testing/testHodCleanup.pyc /usr/share/hadoop/contrib/hod/testing/testHodCleanup.pyo /usr/share/hadoop/contrib/hod/testing/testHodRing.py /usr/share/hadoop/contrib/hod/testing/testHodRing.pyc /usr/share/hadoop/contrib/hod/testing/testHodRing.pyo /usr/share/hadoop/contrib/hod/testing/testModule.py /usr/share/hadoop/contrib/hod/testing/testModule.pyc /usr/share/hadoop/contrib/hod/testing/testModule.pyo /usr/share/hadoop/contrib/hod/testing/testRingmasterRPCs.py /usr/share/hadoop/contrib/hod/testing/testRingmasterRPCs.pyc /usr/share/hadoop/contrib/hod/testing/testRingmasterRPCs.pyo /usr/share/hadoop/contrib/hod/testing/testThreads.py /usr/share/hadoop/contrib/hod/testing/testThreads.pyc /usr/share/hadoop/contrib/hod/testing/testThreads.pyo /usr/share/hadoop/contrib/hod/testing/testTypes.py /usr/share/hadoop/contrib/hod/testing/testTypes.pyc /usr/share/hadoop/contrib/hod/testing/testTypes.pyo /usr/share/hadoop/contrib/hod/testing/testUtil.py /usr/share/hadoop/contrib/hod/testing/testUtil.pyc /usr/share/hadoop/contrib/hod/testing/testUtil.pyo /usr/share/hadoop/contrib/hod/testing/testXmlrpc.py /usr/share/hadoop/contrib/hod/testing/testXmlrpc.pyc /usr/share/hadoop/contrib/hod/testing/testXmlrpc.pyo /usr/share/hadoop/contrib/index /usr/share/hadoop/contrib/index/hadoop-index-1.1.2.jar /usr/share/hadoop/contrib/streaming /usr/share/hadoop/contrib/streaming/hadoop-streaming-1.1.2.jar /usr/share/hadoop/contrib/vaidya /usr/share/hadoop/contrib/vaidya/bin /usr/share/hadoop/contrib/vaidya/bin/vaidya.sh /usr/share/hadoop/contrib/vaidya/conf /usr/share/hadoop/contrib/vaidya/conf/postex_diagnosis_tests.xml /usr/share/hadoop/contrib/vaidya/hadoop-vaidya-1.1.2.jar /usr/share/hadoop/hadoop-ant-1.1.2.jar /usr/share/hadoop/hadoop-client-1.1.2.jar /usr/share/hadoop/hadoop-core-1.1.2.jar /usr/share/hadoop/hadoop-examples-1.1.2.jar /usr/share/hadoop/hadoop-minicluster-1.1.2.jar /usr/share/hadoop/hadoop-test-1.1.2.jar /usr/share/hadoop/hadoop-tools-1.1.2.jar /usr/share/hadoop/lib /usr/share/hadoop/lib/asm-3.2.jar /usr/share/hadoop/lib/aspectjrt-1.6.11.jar /usr/share/hadoop/lib/aspectjtools-1.6.11.jar /usr/share/hadoop/lib/commons-beanutils-1.7.0.jar /usr/share/hadoop/lib/commons-beanutils-core-1.8.0.jar /usr/share/hadoop/lib/commons-cli-1.2.jar /usr/share/hadoop/lib/commons-codec-1.4.jar /usr/share/hadoop/lib/commons-collections-3.2.1.jar /usr/share/hadoop/lib/commons-configuration-1.6.jar /usr/share/hadoop/lib/commons-daemon-1.0.1.jar /usr/share/hadoop/lib/commons-digester-1.8.jar /usr/share/hadoop/lib/commons-el-1.0.jar /usr/share/hadoop/lib/commons-httpclient-3.0.1.jar /usr/share/hadoop/lib/commons-io-2.1.jar /usr/share/hadoop/lib/commons-lang-2.4.jar /usr/share/hadoop/lib/commons-logging-1.1.1.jar /usr/share/hadoop/lib/commons-logging-api-1.0.4.jar /usr/share/hadoop/lib/commons-math-2.1.jar /usr/share/hadoop/lib/commons-net-3.1.jar /usr/share/hadoop/lib/core-3.1.1.jar /usr/share/hadoop/lib/hadoop-capacity-scheduler-1.1.2.jar /usr/share/hadoop/lib/hadoop-fairscheduler-1.1.2.jar /usr/share/hadoop/lib/hadoop-thriftfs-1.1.2.jar /usr/share/hadoop/lib/hsqldb-1.8.0.10.LICENSE.txt /usr/share/hadoop/lib/hsqldb-1.8.0.10.jar /usr/share/hadoop/lib/jackson-core-asl-1.8.8.jar /usr/share/hadoop/lib/jackson-mapper-asl-1.8.8.jar /usr/share/hadoop/lib/jasper-compiler-5.5.12.jar /usr/share/hadoop/lib/jasper-runtime-5.5.12.jar /usr/share/hadoop/lib/jdeb-0.8.jar /usr/share/hadoop/lib/jdiff /usr/share/hadoop/lib/jdiff/hadoop_0.17.0.xml /usr/share/hadoop/lib/jdiff/hadoop_0.18.1.xml /usr/share/hadoop/lib/jdiff/hadoop_0.18.2.xml /usr/share/hadoop/lib/jdiff/hadoop_0.18.3.xml /usr/share/hadoop/lib/jdiff/hadoop_0.19.0.xml /usr/share/hadoop/lib/jdiff/hadoop_0.19.1.xml /usr/share/hadoop/lib/jdiff/hadoop_0.19.2.xml /usr/share/hadoop/lib/jdiff/hadoop_0.20.1.xml /usr/share/hadoop/lib/jdiff/hadoop_0.20.205.0.xml /usr/share/hadoop/lib/jdiff/hadoop_1.0.0.xml /usr/share/hadoop/lib/jdiff/hadoop_1.0.1.xml /usr/share/hadoop/lib/jdiff/hadoop_1.0.2.xml /usr/share/hadoop/lib/jdiff/hadoop_1.0.3.xml /usr/share/hadoop/lib/jdiff/hadoop_1.0.4.xml /usr/share/hadoop/lib/jdiff/hadoop_1.1.0.xml /usr/share/hadoop/lib/jdiff/hadoop_1.1.1.xml /usr/share/hadoop/lib/jdiff/hadoop_1.1.2.xml /usr/share/hadoop/lib/jersey-core-1.8.jar /usr/share/hadoop/lib/jersey-json-1.8.jar /usr/share/hadoop/lib/jersey-server-1.8.jar /usr/share/hadoop/lib/jets3t-0.6.1.jar /usr/share/hadoop/lib/jetty-6.1.26.jar /usr/share/hadoop/lib/jetty-util-6.1.26.jar /usr/share/hadoop/lib/jsch-0.1.42.jar /usr/share/hadoop/lib/jsp-2.1 /usr/share/hadoop/lib/jsp-2.1/jsp-2.1.jar /usr/share/hadoop/lib/jsp-2.1/jsp-api-2.1.jar /usr/share/hadoop/lib/junit-4.5.jar /usr/share/hadoop/lib/kfs-0.2.2.jar /usr/share/hadoop/lib/kfs-0.2.LICENSE.txt /usr/share/hadoop/lib/log4j-1.2.15.jar /usr/share/hadoop/lib/mockito-all-1.8.5.jar /usr/share/hadoop/lib/oro-2.0.8.jar /usr/share/hadoop/lib/servlet-api-2.5-20081211.jar /usr/share/hadoop/lib/slf4j-api-1.4.3.jar /usr/share/hadoop/lib/slf4j-log4j12-1.4.3.jar /usr/share/hadoop/lib/xmlenc-0.52.jar /usr/share/hadoop/templates /usr/share/hadoop/templates/conf /usr/share/hadoop/templates/conf/capacity-scheduler.xml /usr/share/hadoop/templates/conf/commons-logging.properties /usr/share/hadoop/templates/conf/core-site.xml /usr/share/hadoop/templates/conf/hadoop-env.sh /usr/share/hadoop/templates/conf/hadoop-metrics2.properties /usr/share/hadoop/templates/conf/hadoop-policy.xml /usr/share/hadoop/templates/conf/hdfs-site.xml /usr/share/hadoop/templates/conf/log4j.properties /usr/share/hadoop/templates/conf/mapred-queue-acls.xml /usr/share/hadoop/templates/conf/mapred-site.xml /usr/share/hadoop/templates/conf/taskcontroller.cfg /usr/share/hadoop/webapps /usr/share/hadoop/webapps/datanode /usr/share/hadoop/webapps/datanode/WEB-INF /usr/share/hadoop/webapps/datanode/WEB-INF/web.xml /usr/share/hadoop/webapps/hdfs /usr/share/hadoop/webapps/hdfs/WEB-INF /usr/share/hadoop/webapps/hdfs/WEB-INF/web.xml /usr/share/hadoop/webapps/hdfs/index.html /usr/share/hadoop/webapps/history /usr/share/hadoop/webapps/history/WEB-INF /usr/share/hadoop/webapps/history/WEB-INF/web.xml /usr/share/hadoop/webapps/job /usr/share/hadoop/webapps/job/WEB-INF /usr/share/hadoop/webapps/job/WEB-INF/web.xml /usr/share/hadoop/webapps/job/analysejobhistory.jsp /usr/share/hadoop/webapps/job/gethistory.jsp /usr/share/hadoop/webapps/job/index.html /usr/share/hadoop/webapps/job/job_authorization_error.jsp /usr/share/hadoop/webapps/job/jobblacklistedtrackers.jsp /usr/share/hadoop/webapps/job/jobconf.jsp /usr/share/hadoop/webapps/job/jobconf_history.jsp /usr/share/hadoop/webapps/job/jobdetails.jsp /usr/share/hadoop/webapps/job/jobdetailshistory.jsp /usr/share/hadoop/webapps/job/jobfailures.jsp /usr/share/hadoop/webapps/job/jobhistory.jsp /usr/share/hadoop/webapps/job/jobhistoryhome.jsp /usr/share/hadoop/webapps/job/jobqueue_details.jsp /usr/share/hadoop/webapps/job/jobtasks.jsp /usr/share/hadoop/webapps/job/jobtaskshistory.jsp /usr/share/hadoop/webapps/job/jobtracker.jsp /usr/share/hadoop/webapps/job/legacyjobhistory.jsp /usr/share/hadoop/webapps/job/loadhistory.jsp /usr/share/hadoop/webapps/job/machines.jsp /usr/share/hadoop/webapps/job/taskdetails.jsp /usr/share/hadoop/webapps/job/taskdetailshistory.jsp /usr/share/hadoop/webapps/job/taskstats.jsp /usr/share/hadoop/webapps/job/taskstatshistory.jsp /usr/share/hadoop/webapps/secondary /usr/share/hadoop/webapps/secondary/WEB-INF /usr/share/hadoop/webapps/static /usr/share/hadoop/webapps/static/hadoop-logo.jpg /usr/share/hadoop/webapps/static/hadoop.css /usr/share/hadoop/webapps/static/jobconf.xsl /usr/share/hadoop/webapps/static/jobtracker.js /usr/share/hadoop/webapps/static/sorttable.js /usr/share/hadoop/webapps/task /usr/share/hadoop/webapps/task/WEB-INF /usr/share/hadoop/webapps/task/WEB-INF/web.xml /usr/share/hadoop/webapps/task/index.html /var/log/hadoop /var/run/hadoop

相关推荐

本教程主要涵盖的是在较旧版本的Hadoop 1.0.2上安装并配置HBase 0.94,以及相关的MapReduce开发和Hadoop-Eclipse插件的编译。这些内容对于理解大数据处理的基本流程和工具使用具有重要意义。 首先,我们来详细讨论...

### Ubuntu11.10下安装Hadoop1.0.2(双机集群) #### 一、概述 本文档详细介绍了如何在Ubuntu11.10操作系统上搭建Hadoop1.0.2双机集群的过程,并通过WordCount示例验证了集群的正确性和可用性。该文档对于希望在类...

hadoop1.0.2-eclipse-plugin,目前比较新的hadoop eclipse插件

编译后的Hadoop 1.0.2 eclipse插件,eclipse indigo测试通过。外挂形式,直接解压到eclipse目录下即可。如启动后window-preferences 里没有hadoop map/reduce,在eclipse快捷方式后加启动参数 -clean启动下。如有...

Hadoop 1.0.2应该与多个JDK版本兼容,但建议使用与Hadoop发布时推荐的JDK版本。配置Hadoop时,需要确保`JAVA_HOME`环境变量指向正确的JDK安装目录。 在开发Hadoop应用时,理解MapReduce的工作原理至关重要。Map阶段...

### Hadoop-1.0.2通过Eclipse连接编程问题 #### 一、环境搭建与配置 为了确保能够顺利地在Eclipse中进行Hadoop的编程工作,首先需要准备一个良好的开发环境。根据题目中提供的信息,我们所处的具体环境为: - **...

参考http://www.cnblogs.com/siwei1988/archive/2012/08/03/2621589.html打包编译的,有需要但觉得自己打包编译麻烦的同学,可以下来用下。

官方未提供hadoop-1.0.2的插件。基于eclipse3.3.2 个人修改整理的插件。 可以顺利连接master。

hadoop-1.0.2-eclipse-plugin.jar eclipse官方插件

hadoop1.0.2版eclipse插件。前天在csdn上下了一个一样的插件,但是用不了,就是连不上DFS,后来发现缺少了五个库,所以加了库,重新打包,测试通过。由于刚接触hadoop,出现这种问题一开始没想到问题在插件上,这个...

1.安装 Hadoop-gpl-compression 1.1 wget http://hadoop-gpl-compression.apache-extras.org.codespot.com/files/hadoop-gpl-compression-0.1.0-rc0.tar.gz 1.2 mv hadoop-gpl-compression-0.1.0/lib/native/Linux-...

安装 Hadoop 在 Windows 平台上的步骤可能会比较复杂,但是通过使用 Cygwin 仿真 Linux 环境,我们可以成功地安装 Hadoop、ZooKeeper、HBase 和 Hive 等相关组件。同时,我们也需要注意安装过程中的每一个细节,以...

hadoop-eclipse-plugin-1.0.2.jar 本人保证可以使用,如不会使用可参考本人分享的文档《Hadoop-1.0.2通过Eclipse连接编程问题》

在Windows 7上安装Hadoop并使用Eclipse进行MapReduce调试需要一系列的配置步骤,主要包括以下几个关键环节: 1. **安装Cygwin和配置sshd服务** - Cygwin是为Hadoop提供类Linux环境的工具,而sshd服务则是Hadoop...

通过参考http://www.cnblogs.com/siwei1988/archive/2012/08/03/2621589.html上面的文章打包的eclipse-hadoop插件,有需要同时觉得编译打包繁琐的同学可以下来用下。版本:hadoop:1.0.2;eclipse:4.2;Ubuntu11.10系统

- **Hortonworks**: 1.0.7 版本基于 Apache Hadoop 1.0.2,应该支持 WebHDFS。 #### Maven 项目配置示例 文档提供了一个 Maven 项目的 `pom.xml` 示例,展示了如何配置 Hadoop 相关依赖。值得注意的是,示例中指定...

- **软件环境**:使用了Hadoop 1.0.2版本、Hive 0.8.1、HBase 0.92.1、Sqoop 1.4.1等工具,确保了完整的Hadoop生态系统。 2. **集群部署** - 集群包含6个TaskTracker、多个RegionServer和DataNode,以及1个...

为什么要使用大索引?使用后会有什么好处? 1. 索引大幅度的加快数据的检索速度。 2. 索引可以显著减少查询中分组、统计和排序的时间。 3. 索引大幅度的提高系统的性能和响应时间,从而节约资源。 正因为大索引技术...

6. 继续构建Hadoop,使用CMake生成适合你的操作系统的构建文件,然后执行编译和安装步骤。 7. 最后,配置Hadoop环境变量,如`HADOOP_HOME`、`JAVA_HOME`等,并设置相应的路径。 8. 启动Hadoop服务,如NameNode、...