- µÁÅÞºê: 42752 µ¼í

- µÇºÕê½:

- µØÑÞç¬: Õîùõ║¼

-

µûçþ½áÕêåþ▒╗

þñ¥Õî║þëêÕØù

- µêæþÜäÞÁäÞ«» ( 0)

- µêæþÜäÞ«║ÕØø ( 5)

- µêæþÜäÚù«þ¡ö ( 0)

Õ¡ÿµíúÕêåþ▒╗

- 2012-10 ( 2)

- 2012-08 ( 1)

- 2012-07 ( 1)

- µø┤ÕñÜÕ¡ÿµíú...

µ£Çµû░Þ»äÞ«║

ÕåàÕ¡ÿÕ»╣Ú¢ÉþÜäÕăþÉå´╝îõ¢£þö¿,õ¥ïÕ¡Éõ╗ÑÕÅèõ©Çõ║øÞºäÕêÆ´╝êõ©¡Þï▒µûçÞ»┤µÿÄ´╝îÚÇéþö¿sizeofþ╗ôµ×äõ¢ô´╝ë

- ÕìÜÕ«óÕêåþ▒╗´╝Ü

- C

┬áõ©Ç┬á┬á ┬á ÕåàÕ¡ÿÞ»╗ÕÅûþ▓ÆÕ║ª┬á ┬á ┬á ┬á┬áMemory access granularity ┬á

Programmers are conditioned to think of memory as a simple array of bytes. Among C and its descendants, char* is ubiquitous as meaning "a block of memory", and even Java? has its byte[] type to represent raw memory.

Figure 1. How programmers see memory

However, your computer's processor does not read from and write to memory in byte-sized chunks. Instead, it accesses memory in two-, four-, eight- 16- or even 32-byte chunks. We'll call the size in which a processor accesses memory its memory access granularity.

Figure 2. How processors see memory

The difference between how high-level programmers think of memory and how modern processors actually work with memory raises interesting issues that this article explores.

If you don't understand and address alignment issues in your software, the following scenarios, in increasing order of severity, are all possible:

- Your software will run slower.

- Your application will lock up.

- Your operating system will crash.

- Your software will silently fail, yielding incorrect results.

ÚÿƒÕêùÕăþÉå┬áAlignment fundamentals

To illustrate the principles behind alignment, examine a constant task, and how it's affected by a processor's memory access granularity. The task is simple: first read four bytes from address 0 into the processor's register. Then read four bytes from address 1 into the same register.

First examine what would happen on a processor with a one-byte memory access granularity:

Figure 3. Single-byte memory access granularity

This fits in with the naive programmer's model of how memory works: it takes the same four memory accesses to read from address 0 as it does from address 1. Now see what would happen on a processor with two-byte granularity, like the original 68000:

Figure 4. Double-byte memory access granularity

When reading from address 0, a processor with two-byte granularity takes half the number of memory accesses as a processor with one-byte granularity. Because each memory access entails a fixed amount overhead, minimizing the number of accesses can really help performance.

However, notice what happens when reading from address 1. Because the address doesn't fall evenly on the processor's memory access boundary, the processor has extra work to do. Such an address is known as an unaligned address. Because address 1 is unaligned, a processor with two-byte granularity must perform an extra memory access, slowing down the operation.

Finally, examine what would happen on a processor with four-byte memory access granularity, like the 68030 or PowerPC? 601:

Figure 5. Quad-byte memory access granularity

A processor with four-byte granularity can slurp up four bytes from an aligned address with one read. Also note that reading from an unaligned address doubles the access count.

Now that you understand the fundamentals behind aligned data access, you can explore some of the issues related to alignment.

A processor has to perform some tricks when instructed to access an unaligned address. Going back to the example of reading four bytes from address 1 on a processor with four-byte granularity, you can work out exactly what needs to be done:

Figure 6. How processors handle unaligned memory access

The processor needs to read the first chunk of the unaligned address and shift out the "unwanted" bytes from the first chunk. Then it needs to read the second chunk of the unaligned address and shift out some of its information. Finally, the two are merged together for placement in the register. It's a lot of work.

Some processors just aren't willing to do all of that work for you.

The original 68000 was a processor with two-byte granularity and lacked the circuitry to cope with unaligned addresses. When presented with such an address, the processor would throw an exception. The original Mac OS didn't take very kindly to this exception, and would usually demand the user restart the machine. Ouch.

Later processors in the 680x0 series, such as the 68020, lifted this restriction and performed the necessary work for you. This explains why some old software that works on the 68020 crashes on the 68000. It also explains why, way back when, some old Mac coders initialized pointers with odd addresses. On the original Mac, if the pointer was accessed without being reassigned to a valid address, the Mac would immediately drop into the debugger. Often they could then examine the calling chain stack and figure out where the mistake was.

All processors have a finite number of transistors to get work done. Adding unaligned address access support cuts into this "transistor budget." These transistors could otherwise be used to make other portions of the processor work faster, or add new functionality altogether.

An example of a processor that sacrifices unaligned address access support in the name of speed is MIPS. MIPS is a great example of a processor that does away with almost all frivolity in the name of getting real work done faster.

The PowerPC takes a hybrid approach. Every PowerPC processor to date has hardware support for unaligned 32-bit integer access. While you still pay a performance penalty for unaligned access, it tends to be small.

On the other hand, modern PowerPC processors lack hardware support for unaligned 64-bit floating-point access. When asked to load an unaligned floating-point number from memory, modern PowerPC processors will throw an exception and have the operating system perform the alignment chores in software. Performing alignment in software is much slower than performing it in hardware.

õ║î ÚǃÕ║ª Speed ´╝êÕåàÕ¡ÿÕ»╣Ú¢ÉþÜäÕƒ║µ£¼ÕăþÉå´╝ë

ÕåàÕ¡ÿÕ»╣ڢɵ£ëõ©Çõ©¬ÕÑ¢Õñäµÿ»µÅÉÚ½ÿÞ«┐Úù«ÕåàÕ¡ÿþÜäÚǃÕ║ª´╝îÕøáõ©║Õ£¿Þ«©Õñܵò░µì«þ╗ôµ×äõ©¡Úâ¢Ú£ÇÞªüÕìáþö¿ÕåàÕ¡ÿ´╝îÕ£¿Õ¥êÕñÜþ│╗þ╗ƒõ©¡´╝îÞªüµ▒éÕåàÕ¡ÿÕêåÚàìþÜäµùÂÕÇÖÞªüÕ»╣Ú¢É.õ©ïÚØóµÿ»Õ»╣õ©║õ╗Çõ╣êÕÅ»õ╗ѵÅÉÚ½ÿÕåàÕ¡ÿÚǃÕ║ªÚÇÜÞ┐çõ╗úþáüÕüÜõ║åÞºúÚçè´╝ü

õ╗úþáüÞºúÚçè┬áWriting some tests illustrates the performance penalties of unaligned memory access. The test is simple: you read, negate, and write back the numbers in a ten-megabyte buffer. These tests have two variables:

- The size, in bytes, in which you process the buffer. First you'll process the buffer one byte at a time. Then you'll move onto two-, four- and eight-bytes at a time.

- The alignment of the buffer. You'll stagger the alignment of the buffer by incrementing the pointer to the buffer and running each test again.

These tests were performed on a 800 MHz PowerBook G4. To help normalize performance fluctuations from interrupt processing, each test was run ten times, keeping the average of the runs. First up is the test that operates on a single byte at a time:

Listing 1. Munging data one byte at a timevoid Munge8( void *data, uint32_t size ) {

uint8_t *data8 = (uint8_t*) data;

uint8_t *data8End = data8 + size;

while( data8 != data8End ) {

*data8++ = -*data8;

}

}

It took an average of 67,364 microseconds to execute this function. Now modify it to work on two bytes at a time instead of one byte at a time -- which will halve the number of memory accesses:

Listing 2. Munging data two bytes at a time

void Munge16( void *data, uint32_t size ) {

uint16_t *data16 = (uint16_t*) data;

uint16_t *data16End = data16 + (size >> 1); /* Divide size by 2. */

uint8_t *data8 = (uint8_t*) data16End;

uint8_t *data8End = data8 + (size & 0x00000001); /* Strip upper 31 bits. */

while( data16 != data16End ) {

*data16++ = -*data16;

}

while( data8 != data8End ) {

*data8++ = -*data8;

}

}

This function took 48,765 microseconds to process the same ten-megabyte buffer -- 38% faster than Munge8. However, that buffer was aligned. If the buffer is unaligned, the time required increases to 66,385 microseconds -- about a 27% speed penalty. The following chart illustrates the performance pattern of aligned memory accesses versus unaligned accesses:

Figure 7. Single-byte access versus double-byte access

The first thing you notice is that accessing memory one byte at a time is uniformly slow. The second item of interest is that when accessing memory two bytes at a time, whenever the address is not evenly divisible by two, that 27% speed penalty rears its ugly head.

Now up the ante, and process the buffer four bytes at a time:

Listing 3. Munging data four bytes at a time

void Munge16( void *data, uint32_t size ) {

uint16_t *data16 = (uint16_t*) data;

uint16_t *data16End = data16 + (size >> 1); /* Divide size by 2. */

uint8_t *data8 = (uint8_t*) data16End;

uint8_t *data8End = data8 + (size & 0x00000001); /* Strip upper 31 bits. */

while( data16 != data16End ) {

*data16++ = -*data16;

}

while( data8 != data8End ) {

*data8++ = -*data8;

}

}

This function processes an aligned buffer in 43,043 microseconds and an unaligned buffer in 55,775 microseconds, respectively. Thus, on this test machine, accessing unaligned memory four bytes at a time is slower than accessing aligned memory two bytes at a time:

Figure 8. Single- versus double- versus quad-byte access

Now for the horror story: processing the buffer eight bytes at a time.

Listing 4. Munging data eight bytes at a time

void Munge32( void *data, uint32_t size ) {

uint32_t *data32 = (uint32_t*) data;

uint32_t *data32End = data32 + (size >> 2); /* Divide size by 4. */

uint8_t *data8 = (uint8_t*) data32End;

uint8_t *data8End = data8 + (size & 0x00000003); /* Strip upper 30 bits. */

while( data32 != data32End ) {

*data32++ = -*data32;

}

while( data8 != data8End ) {

*data8++ = -*data8;

}

}

Munge64 processes

an aligned buffer in 39,085 microseconds -- about 10% faster than

processing the buffer four bytes at a time. However, processing an

unaligned buffer takes an amazing 1,841,155 microseconds -- two orders

of magnitude slower than aligned access, an outstanding 4,610%

performance penalty!

What happened? Because modern PowerPC processors lack hardware support for unaligned floating-point access, the processor throws an exception for each unaligned access. The operating system catches this exception and performs the alignment in software. Here's a chart illustrating the penalty, and when it occurs:

Figure 9. Multiple-byte access comparison

The penalties for one-, two- and four-byte unaligned access are dwarfed by the horrendous unaligned eight-byte penalty. Maybe this chart, removing the top (and thus the tremendous gulf between the two numbers), will be clearer:

Figure 10. Multiple-byte access comparison #2

There's another subtle insight hidden in this data. Compare eight-byte access speeds on four-byte boundaries:

Figure 11. Multiple-byte access comparison #3

Notice accessing memory eight bytes at a time on four- and twelve- byte boundaries is slower than reading the same memory four or even two bytes at a time. While PowerPCs have hardware support for four-byte aligned eight-byte doubles, you still pay a performance penalty if you use that support. Granted, it's no where near the 4,610% penalty, but it's certainly noticeable. Moral of the story: accessing memory in large chunks can be slower than accessing memory in small chunks, if that access is not aligned.

All modern processors offer atomic instructions. These special instructions are crucial for synchronizing two or more concurrent tasks. As the name implies, atomic instructions must be indivisible -- that's why they're so handy for synchronization: they can't be preempted.

It turns out that in order for atomic instructions to perform correctly, the addresses you pass them must be at least four-byte aligned. This is because of a subtle interaction between atomic instructions and virtual memory.

If an address is unaligned, it requires at least two memory accesses. But what happens if the desired data spans two pages of virtual memory? This could lead to a situation where the first page is resident while the last page is not. Upon access, in the middle of the instruction, a page fault would be generated, executing the virtual memory management swap-in code, destroying the atomicity of the instruction. To keep things simple and correct, both the 68K and PowerPC require that atomically manipulated addresses always be at least four-byte aligned.

Unfortunately, the PowerPC does not throw an exception when atomically storing to an unaligned address. Instead, the store simply always fails. This is bad because most atomic functions are written to retry upon a failed store, under the assumption they were preempted. These two circumstances combine to where your program will go into an infinite loop if you attempt to atomically store to an unaligned address. Oops.

Altivec is all about speed. Unaligned memory access slows down the processor and costs precious transistors. Thus, the Altivec engineers took a page from the MIPS playbook and simply don't support unaligned memory access. Because Altivec works with sixteen-byte chunks at a time, all addresses passed to Altivec must be sixteen-byte aligned. What's scary is what happens if your address is not aligned.

Altivec won't throw an exception to warn you about the unaligned address. Instead, Altivec simply ignores the lower four bits of the address and charges ahead, operating on the wrong address. This means your program may silently corrupt memory or return incorrect results if you don't explicitly make sure all your data is aligned.

There is an advantage to Altivec's bit-stripping ways. Because you don't need to explicitly truncate (align-down) an address, this behavior can save you an instruction or two when handing addresses to the processor.

This is not to say Altivec can't process unaligned memory. You can find detailed instructions how to do so on the Altivec Programming Environments Manual (see Resources). It requires more work, but because memory is so slow compared to the processor, the overhead for such shenanigans is surprisingly low.

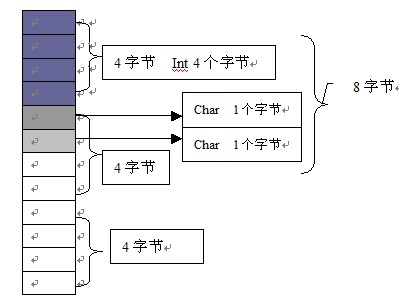

Examine the following structure:

Listing 5. An innocent structure

void Munge64( void *data, uint32_t size ) {

typedef struct {

char    a;

long    b;

char    c;

}   Struct;What is the size of this structure in bytes? Many programmers will answer "6 bytes." It makes sense: one byte for a, four bytes forb and another byte for c. 1 + 4 + 1 equals 6. Here's how it would lay out in memory:

| Field Type | Field Name | Field Offset | Field Size | Field End |

char |

a |

0 | 1 | 1 |

long |

b |

1 | 4 | 5 |

char |

c |

5 | 1 | 6 |

| Total Size in Bytes: | 6 |

However, if you were to ask your compiler to sizeof( Struct ),

chances are the answer you'd get back would be greater than six,

perhaps eight or even twenty-four. There's two reasons for this:

backwards compatibility and efficiency.

First,

backwards compatibility. Remember the 68000 was a processor with

two-byte memory access granularity, and would throw an exception upon

encountering an odd address. If you were to read from or write to field b,

you'd attempt to access an odd address. If a debugger weren't

installed, the old Mac OS would throw up a System Error dialog box with

one button: Restart. Yikes!

So, instead of laying out your fields just the way you wrote them, the compiler padded the structure so that b and c would reside at even addresses:

| Field Type | Field Name | Field Offset | Field Size | Field End |

char |

a |

0 | 1 | 1 |

| padding | 1 | 1 | 2 | |

long |

b |

2 | 4 | 6 |

char |

c |

6 | 1 | 7 |

| padding | 7 | 1 | 8 | |

| Total Size in Bytes: | 8 |

Padding is the act of adding otherwise unused space to a structure to make fields line up in a desired way. Now, when the 68020 came out with built-in hardware support for unaligned memory access, this padding was unnecessary. However, it didn't hurt anything, and it even helped a little in performance.

The

second reason is efficiency. Nowadays, on PowerPC machines, two-byte

alignment is nice, but four-byte or eight-byte is better. You probably

don't care anymore that the original 68000 choked on unaligned

structures, but you probably care about potential 4,610% performance

penalties, which can happen if a double field doesn't sit aligned in a structure of your devising.

ÕåàÕ¡ÿÕ»╣Ú¢ÉÕà│Úö«µÿ»Ú£ÇÞªüþö╗Õø¥´╝üÕ£¿õ©ïÚØóþÜäõ©¡µûçµ£ëÞ»┤µÿÄõ¥ïÕ¡É

Examine the following structure:

Õªéµ×£Þï▒µûçþ£ïõ©ìµçé´╝îÚéúõ╣êÕÅ»õ╗Ñþø┤µÄÑþö¿õ©¡µûçõ¥ïÕªé´╝êhttp://www.cppblog.com/snailcong/archive/2009/03/16/76705.html´╝ëµØÑÞ»┤´╝ü

What is the size of this structure in bytes? Many programmers will answer "6 bytes." It makes sense: one byte for a, four bytes forb and another byte for c. 1 + 4 + 1 equals 6. Here's how it would lay out in memory:

| Field Type | Field Name | Field Offset | Field Size | Field End |

char |

a |

0 | 1 | 1 |

long |

b |

1 | 4 | 5 |

char |

c |

5 | 1 | 6 |

| Total Size in Bytes: | 6 |

However, if you were to ask your compiler to sizeof( Struct ),

chances are the answer you'd get back would be greater than six,

perhaps eight or even twenty-four. There's two reasons for this:

backwards compatibility and efficiency.

First,

backwards compatibility. Remember the 68000 was a processor with

two-byte memory access granularity, and would throw an exception upon

encountering an odd address. If you were to read from or write to field b,

you'd attempt to access an odd address. If a debugger weren't

installed, the old Mac OS would throw up a System Error dialog box with

one button: Restart. Yikes!

So, instead of laying out your fields just the way you wrote them, the compiler padded the structure so that b and c would reside at even addresses:

| Field Type | Field Name | Field Offset | Field Size | Field End |

char |

a |

0 | 1 | 1 |

| padding | 1 | 1 | 2 | |

long |

b |

2 | 4 | 6 |

char |

c |

6 | 1 | 7 |

| padding | 7 | 1 | 8 | |

| Total Size in Bytes: | 8 |

Padding is the act of adding otherwise unused space to a structure to make fields line up in a desired way. Now, when the 68020 came out with built-in hardware support for unaligned memory access, this padding was unnecessary. However, it didn't hurt anything, and it even helped a little in performance.

The

second reason is efficiency. Nowadays, on PowerPC machines, two-byte

alignment is nice, but four-byte or eight-byte is better. You probably

don't care anymore that the original 68000 choked on unaligned

structures, but you probably care about potential 4,610% performance

penalties, which can happen if a double field doesn't sit aligned in a structure of your devising.

Õ¥êÕñÜõ║║Úâ¢þƒÑÚüôµÿ»ÕåàÕ¡ÿÕ»╣ڢɵëÇÚÇáµêÉþÜäÕăÕøá´╝îÕì┤Ú▓£µ£ëõ║║ÕæèÞ»ëõ¢áÕåàÕ¡ÿÕ»╣Ú¢ÉþÜäÕƒ║µ£¼ÕăþÉå´╝üõ©èÚØóõ¢£ÞÇàÕ░▒ÕüÜõ║åÞºúÚçè!

õ©ë┬á┬áõ©ìµçéÕåàÕ¡ÿÕ»╣Ú¢ÉÕ░åÚÇáµêÉþÜäÕÅ»Þâ¢Õ¢▒ÕôìÕªéõ©ï

- Your software may hit performance-killing unaligned memory access exceptions, which invoke very expensive alignment exception handlers.

- Your application may attempt to atomically store to an unaligned address, causing your application to lock up.

- Your application may attempt to pass an unaligned address to Altivec, resulting in Altivec reading from and/or writing to the wrong part of memory, silently corrupting data or yielding incorrect results.

-

õ©ÇÒÇüÕåàÕ¡ÿÕ»╣Ú¢ÉþÜäÕăÕøá - ÕñºÚâ¿ÕêåþÜäÕÅéÞÇâÞÁäµûÖÚ⢵ÿ»Õªéµÿ»Þ»┤þÜä´╝Ü

1ÒÇüÕ╣│ÕÅ░ÕăÕøá(þº╗µñìÕăÕøá)´╝Üõ©ìµÿ»µëǵ£ëþÜäþí¼õ╗ÂÕ╣│ÕÅ░Úâ¢Þâ¢Þ«┐Úù«õ╗╗µäÅÕ£░ÕØÇõ©èþÜäõ╗╗µäŵò░µì«þÜä´╝øµƒÉõ║øþí¼õ╗ÂÕ╣│ÕÅ░ÕŬÞâ¢Õ£¿µƒÉõ║øÕ£░ÕØÇÕñäÕÅûµƒÉõ║øþë╣Õ«Üþ▒╗Õ×ïþÜäµò░µì«´╝îÕɪÕêÖµèøÕç║þí¼õ╗ÂÕ╝éÕ©©ÒÇé

2ÒÇüµÇºÞâ¢ÕăÕøá´╝ܵò░µì«þ╗ôµ×ä(Õ░ñÕàµÿ»µáê)Õ║öÞ»ÑÕ░¢ÕÅ»Þâ¢Õ£░Õ£¿Þç¬þäÂÞ¥╣þòîõ©èÕ»╣Ú¢ÉÒÇéÕăÕøáÕ£¿õ║Ä´╝îõ©║õ║åÞ«┐Úù«µ£¬Õ»╣Ú¢ÉþÜäÕåàÕ¡ÿ´╝îÕñäþÉåÕÖ¿Ú£ÇÞªüõ¢£õ©ñµ¼íÕåàÕ¡ÿÞ«┐Úù«´╝øÞÇîÕ»╣Ú¢ÉþÜäÕåàÕ¡ÿÞ«┐Úù«õ╗àÚ£ÇÞªüõ©Çµ¼íÞ«┐Úù«ÒÇé

õ║îÒÇüÕ»╣Ú¢ÉÞºäÕêÖ

µ»Åõ©¬þë╣Õ«ÜÕ╣│ÕÅ░õ©èþÜäþ╝ûÞ»æÕÖ¿Ú⢵£ëÞç¬ÕÀ▒þÜäÚ╗ÿÞ«ñÔÇ£Õ»╣Ú¢Éþ│╗µò░ÔÇØ(õ╣ƒÕŽջ╣ڢɵ¿íµò░)ÒÇéþ¿ïÕ║ÅÕæÿÕÅ»õ╗ÑÚÇÜÞ┐çÚóäþ╝ûÞ»æÕæ¢õ╗ñ#pragma pack(n)´╝în=1,2,4,8,16µØѵö╣ÕÅÿÞ┐Öõ©Çþ│╗µò░´╝îÕàÂõ©¡þÜänÕ░▒µÿ»õ¢áÞªüµîçÕ«ÜþÜäÔÇ£Õ»╣Ú¢Éþ│╗µò░ÔÇØÒÇé

ÞºäÕêÖ´╝Ü

1ÒÇüµò░µì«µêÉÕæÿÕ»╣Ú¢ÉÞºäÕêÖ´╝Üþ╗ôµ×ä(struct)(µêûÞüöÕÉê(union))þÜäµò░µì«µêÉÕæÿ´╝îþ¼¼õ©Çõ©¬µò░µì«µêÉÕæÿµö¥Õ£¿offsetõ©║0þÜäÕ£░µû╣´╝îõ╗ÑÕÉĵ»Åõ©¬µò░µì«µêÉÕæÿþÜäÕ»╣ڢɵîëþàº#pragma packµîçÕ«ÜþÜäµò░ÕÇ╝ÕÆîÞ┐Öõ©¬µò░µì«µêÉÕæÿ

Þç¬Þ║½Úò┐Õ║ªõ©¡´╝îµ»öÞ¥âÕ░ÅþÜäÚéúõ©¬Þ┐øÞíîÒÇé

2ÒÇüþ╗ôµ×ä(µêûÞüöÕÉê)þÜäµò┤õ¢ôÕ»╣Ú¢ÉÞºäÕêÖ´╝ÜÕ£¿µò░µì«µêÉÕæÿÕ«îµêÉÕÉäÞç¬Õ»╣Ú¢Éõ╣ïÕÉÄ´╝îþ╗ôµ×ä(µêûÞüöÕÉê)µ£¼Þ║½õ╣ƒÞªüÞ┐øÞíîÕ»╣ڢɴ╝îÕ»╣Ú¢ÉÕ░åµîëþàº#pragma packµîçÕ«ÜþÜäµò░ÕÇ╝ÕÆîþ╗ôµ×ä(µêûÞüöÕÉê)µ£ÇÕñºµò░µì«µêÉÕæÿÚò┐Õ║ªõ©¡´╝îµ»öÞ¥âÕ░ÅþÜäÚéúõ©¬Þ┐øÞíîÒÇé

3ÒÇüþ╗ôÕÉê1ÒÇü2ÕÅ»µÄ¿µû¡´╝ÜÕ¢ô#pragma packþÜänÕÇ╝þ¡ëõ║ĵêûÞÂàÞ┐çµëǵ£ëµò░µì«µêÉÕæÿÚò┐Õ║ªþÜäµùÂÕÇÖ´╝îÞ┐Öõ©¬nÕÇ╝þÜäÕñºÕ░ÅÕ░åõ©ìõ║ºþöƒõ╗╗õ¢òµòêµ×£ÒÇé

õ©ëÒÇüÞ»òÚ¬î

õ©ïÚØóµêæõ╗¼ÚÇÜÞ┐çõ©Çþ│╗Õêùõ¥ïÕ¡ÉþÜäÞ»ªþ╗åÞ»┤µÿĵØÑÞ»üµÿÄÞ┐Öõ©¬ÞºäÕêÖ

þ╝ûÞ»æÕÖ¿´╝ÜGCC 3.4.2ÒÇüVC6.0

Õ╣│ÕÅ░´╝ÜWindows XP

Õà©Õ×ïþÜästructÕ»╣Ú¢É

structÕ«Üõ╣ë´╝Ü

#pragma pack(n) /* n = 1, 2, 4, 8, 16 */

struct test_t {

int a;

char b;

short c;

char d;

};

#pragma pack(n)

ÚªûÕàêþí«Þ«ñÕ£¿Þ»òÚ¬îÕ╣│ÕÅ░õ©èþÜäÕÉäõ©¬þ▒╗Õ×ïþÜäsize´╝îþ╗ÅÚ¬îÞ»üõ©ñõ©¬þ╝ûÞ»æÕÖ¿þÜäÞ¥ôÕç║ÕØçõ©║´╝Ü

sizeof(char) = 1

sizeof(short) = 2

sizeof(int) = 4

Þ»òÚ¬îÞ┐çþ¿ïÕªéõ©ï´╝ÜÚÇÜÞ┐ç#pragma pack(n)µö╣ÕÅÿÔÇ£Õ»╣Ú¢Éþ│╗µò░ÔÇØ´╝îþäÂÕÉÄÕ»ƒþ£ïsizeof(struct test_t)þÜäÕÇ╝ÒÇé

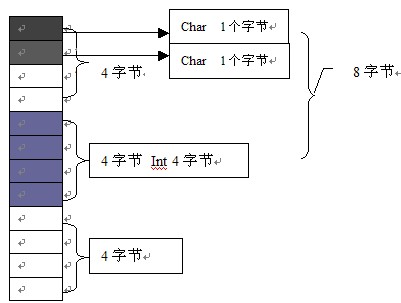

1ÒÇü1Õ¡ùÞèéÕ»╣Ú¢É(#pragma pack(1))

Þ¥ôÕç║þ╗ôµ×£´╝Üsizeof(struct test_t) = 8 [õ©ñõ©¬þ╝ûÞ»æÕÖ¿Þ¥ôÕç║õ©ÇÞç┤]

Õêåµ×ÉÞ┐çþ¿ï´╝Ü

1) µêÉÕæÿµò░µì«Õ»╣Ú¢É

#pragma pack(1)

struct test_t {

┬áint a;┬á /* Úò┐Õ║ª4 > 1 µîë1Õ»╣ڢɴ╝øÞÁÀÕºïoffset=0 0%1=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[0,3] */

┬áchar b;┬á /* Úò┐Õ║ª1 = 1 µîë1Õ»╣ڢɴ╝øÞÁÀÕºïoffset=4 4%1=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[4] */

┬áshort c; /* Úò┐Õ║ª2 > 1 µîë1Õ»╣ڢɴ╝øÞÁÀÕºïoffset=5 5%1=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[5,6] */

┬áchar d;┬á /* Úò┐Õ║ª1 = 1 µîë1Õ»╣ڢɴ╝øÞÁÀÕºïoffset=7 7%1=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[7] */

};

#pragma pack()

µêÉÕæÿµÇ╗ÕñºÕ░Å=8

2) µò┤õ¢ôÕ»╣Ú¢É

µò┤õ¢ôÕ»╣Ú¢Éþ│╗µò░ = min((max(int,short,char), 1) = 1

µò┤õ¢ôÕñºÕ░Å(size)=$(µêÉÕæÿµÇ╗ÕñºÕ░Å) µîë $(µò┤õ¢ôÕ»╣Ú¢Éþ│╗µò░) Õ£åµò┤ = 8 /* 8%1=0 */ [µ│¿1]

2ÒÇü2Õ¡ùÞèéÕ»╣Ú¢É(#pragma pack(2))

Þ¥ôÕç║þ╗ôµ×£´╝Üsizeof(struct test_t) = 10 [õ©ñõ©¬þ╝ûÞ»æÕÖ¿Þ¥ôÕç║õ©ÇÞç┤]

Õêåµ×ÉÞ┐çþ¿ï´╝Ü

1) µêÉÕæÿµò░µì«Õ»╣Ú¢É

#pragma pack(2)

struct test_t {

┬áint a;┬á /* Úò┐Õ║ª4 > 2 µîë2Õ»╣ڢɴ╝øÞÁÀÕºïoffset=0 0%2=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[0,3] */

┬áchar b;┬á /* Úò┐Õ║ª1 < 2 µîë1Õ»╣ڢɴ╝øÞÁÀÕºïoffset=4 4%1=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[4] */

┬áshort c; /* Úò┐Õ║ª2 = 2 µîë2Õ»╣ڢɴ╝øÞÁÀÕºïoffset=6 6%2=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[6,7] */

┬áchar d;┬á /* Úò┐Õ║ª1 < 2 µîë1Õ»╣ڢɴ╝øÞÁÀÕºïoffset=8 8%1=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[8] */

};

#pragma pack()

µêÉÕæÿµÇ╗ÕñºÕ░Å=9

2) µò┤õ¢ôÕ»╣Ú¢É

µò┤õ¢ôÕ»╣Ú¢Éþ│╗µò░ = min((max(int,short,char), 2) = 2

µò┤õ¢ôÕñºÕ░Å(size)=$(µêÉÕæÿµÇ╗ÕñºÕ░Å) µîë $(µò┤õ¢ôÕ»╣Ú¢Éþ│╗µò░) Õ£åµò┤ = 10 /* 10%2=0 */

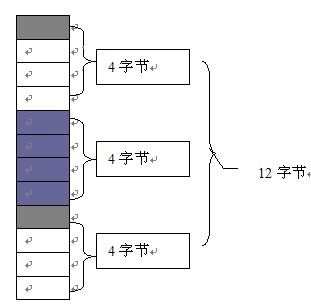

3ÒÇü4Õ¡ùÞèéÕ»╣Ú¢É(#pragma pack(4))

Þ¥ôÕç║þ╗ôµ×£´╝Üsizeof(struct test_t) = 12 [õ©ñõ©¬þ╝ûÞ»æÕÖ¿Þ¥ôÕç║õ©ÇÞç┤]

Õêåµ×ÉÞ┐çþ¿ï´╝Ü

1) µêÉÕæÿµò░µì«Õ»╣Ú¢É

#pragma pack(4)

struct test_t {

┬áint a;┬á /* Úò┐Õ║ª4 = 4 µîë4Õ»╣ڢɴ╝øÞÁÀÕºïoffset=0 0%4=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[0,3] */

┬áchar b;┬á /* Úò┐Õ║ª1 < 4 µîë1Õ»╣ڢɴ╝øÞÁÀÕºïoffset=4 4%1=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[4] */

┬áshort c; /* Úò┐Õ║ª2 < 4 µîë2Õ»╣ڢɴ╝øÞÁÀÕºïoffset=6 6%2=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[6,7] */

┬áchar d;┬á /* Úò┐Õ║ª1 < 4 µîë1Õ»╣ڢɴ╝øÞÁÀÕºïoffset=8 8%1=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[8] */

};

#pragma pack()

µêÉÕæÿµÇ╗ÕñºÕ░Å=9

2) µò┤õ¢ôÕ»╣Ú¢É

µò┤õ¢ôÕ»╣Ú¢Éþ│╗µò░ = min((max(int,short,char), 4) = 4

µò┤õ¢ôÕñºÕ░Å(size)=$(µêÉÕæÿµÇ╗ÕñºÕ░Å) µîë $(µò┤õ¢ôÕ»╣Ú¢Éþ│╗µò░) Õ£åµò┤ = 12 /* 12%4=0 */

4ÒÇü8Õ¡ùÞèéÕ»╣Ú¢É(#pragma pack(8))

Þ¥ôÕç║þ╗ôµ×£´╝Üsizeof(struct test_t) = 12 [õ©ñõ©¬þ╝ûÞ»æÕÖ¿Þ¥ôÕç║õ©ÇÞç┤]

Õêåµ×ÉÞ┐çþ¿ï´╝Ü

1) µêÉÕæÿµò░µì«Õ»╣Ú¢É

#pragma pack(8)

struct test_t {

┬áint a;┬á /* Úò┐Õ║ª4 < 8 µîë4Õ»╣ڢɴ╝øÞÁÀÕºïoffset=0 0%4=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[0,3] */

┬áchar b;┬á /* Úò┐Õ║ª1 < 8 µîë1Õ»╣ڢɴ╝øÞÁÀÕºïoffset=4 4%1=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[4] */

┬áshort c; /* Úò┐Õ║ª2 < 8 µîë2Õ»╣ڢɴ╝øÞÁÀÕºïoffset=6 6%2=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[6,7] */

┬áchar d;┬á /* Úò┐Õ║ª1 < 8 µîë1Õ»╣ڢɴ╝øÞÁÀÕºïoffset=8 8%1=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[8] */

};

#pragma pack()

µêÉÕæÿµÇ╗ÕñºÕ░Å=9

2) µò┤õ¢ôÕ»╣Ú¢É

µò┤õ¢ôÕ»╣Ú¢Éþ│╗µò░ = min((max(int,short,char), 8) = 4

µò┤õ¢ôÕñºÕ░Å(size)=$(µêÉÕæÿµÇ╗ÕñºÕ░Å) µîë $(µò┤õ¢ôÕ»╣Ú¢Éþ│╗µò░) Õ£åµò┤ = 12 /* 12%4=0 */

5ÒÇü16Õ¡ùÞèéÕ»╣Ú¢É(#pragma pack(16))

Þ¥ôÕç║þ╗ôµ×£´╝Üsizeof(struct test_t) = 12 [õ©ñõ©¬þ╝ûÞ»æÕÖ¿Þ¥ôÕç║õ©ÇÞç┤]

Õêåµ×ÉÞ┐çþ¿ï´╝Ü

1) µêÉÕæÿµò░µì«Õ»╣Ú¢É

#pragma pack(16)

struct test_t {

┬áint a;┬á /* Úò┐Õ║ª4 < 16 µîë4Õ»╣ڢɴ╝øÞÁÀÕºïoffset=0 0%4=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[0,3] */

┬áchar b;┬á /* Úò┐Õ║ª1 < 16 µîë1Õ»╣ڢɴ╝øÞÁÀÕºïoffset=4 4%1=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[4] */

┬áshort c; /* Úò┐Õ║ª2 < 16 µîë2Õ»╣ڢɴ╝øÞÁÀÕºïoffset=6 6%2=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[6,7] */

┬áchar d;┬á /* Úò┐Õ║ª1 < 16 µîë1Õ»╣ڢɴ╝øÞÁÀÕºïoffset=8 8%1=0´╝øÕ¡ÿµö¥õ¢ìþ¢«Õî║Úù┤[8] */

};

#pragma pack()

µêÉÕæÿµÇ╗ÕñºÕ░Å=9

2) µò┤õ¢ôÕ»╣Ú¢É

µò┤õ¢ôÕ»╣Ú¢Éþ│╗µò░ = min((max(int,short,char), 16) = 4

µò┤õ¢ôÕñºÕ░Å(size)=$(µêÉÕæÿµÇ╗ÕñºÕ░Å) µîë $(µò┤õ¢ôÕ»╣Ú¢Éþ│╗µò░) Õ£åµò┤ = 12 /* 12%4=0 */

8Õ¡ùÞèéÕÆî16Õ¡ùÞèéÕ»╣Ú¢ÉÞ»òÚ¬îÞ»üµÿÄõ║åÔÇ£ÞºäÕêÖÔÇØþÜäþ¼¼3þé╣´╝ÜÔÇ£Õ¢ô#pragma packþÜänÕÇ╝þ¡ëõ║ĵêûÞÂàÞ┐çµëǵ£ëµò░µì«µêÉÕæÿÚò┐Õ║ªþÜäµùÂÕÇÖ´╝îÞ┐Öõ©¬nÕÇ╝þÜäÕñºÕ░ÅÕ░åõ©ìõ║ºþöƒõ╗╗õ¢òµòêµ×£ÔÇØÒÇé

Õåà Õ¡ÿÕêåÚàìõ©ÄÕåàÕ¡ÿÕ»╣ڢɵÿ»õ©¬Õ¥êÕñìµØéþÜäõ©£ÞÑ┐´╝îõ©ìõ¢åõ©ÄÕàÀõ¢ôÕ«×þÄ░Õ»åÕêçþø©Õà│´╝îÞÇîõ©öÕ£¿õ©ìÕÉîþÜäµôìõ¢£þ│╗þ╗ƒ´╝îþ╝ûÞ»æÕÖ¿µêûþí¼õ╗ÂÕ╣│ÕÅ░õ©èÞºäÕêÖõ╣ƒõ©ìÕ░¢þø©ÕÉî´╝îÞÖ¢þäÂþø«ÕëìÕñºÕñܵò░þ│╗þ╗ƒ/Þ»¡Þ¿ÇÚâ¢ÕàÀµ£ë Þç¬Õè¿þ«íþÉåÒÇüÕêåÚàìÕ╣ÂÚÜÉÞùÅõ¢ÄÕ▒éµôìõ¢£þÜäÕèƒÞ⢴╝îõ¢┐Õ¥ùÕ║öþö¿þ¿ïÕ║Åþ╝ûÕåÖÕñºõ©║þ«ÇÕìò´╝îþ¿ïÕ║ÅÕæÿõ©ìÕ£¿Ú£ÇÞªüÞÇâÞÖæÞ»ªþ╗åþÜäÕåàÕ¡ÿÕêåÚàìÚù«ÚóÿÒÇéõ¢åµÿ»´╝îÕ£¿þ│╗þ╗ƒµêûÚ®▒Õè¿þ║ºõ╗ÑÞç│õ║ÄÚ½ÿÕ«×µù´╝îÚ½ÿõ┐ØÕ»åµÇºþÜä þ¿ïÕ║ÅÕ╝ÇÕÅæÞ┐çþ¿ïõ©¡´╝îþ¿ïÕ║ÅÕåàÕ¡ÿÕêåÚàìÚù«Úóÿõ╗ìµùºµÿ»õ┐ØÞ»üµò┤õ©¬þ¿ïÕ║Åþ¿│Õ«Ü´╝îÕ«ëÕà¿´╝îÚ½ÿµòêþÜäÕƒ║þíÇÒÇé -

[注1]

õ╗Çõ╣êµÿ»ÔÇ£Õ£åµò┤ÔÇØ´╝ƒ

õ©¥õ¥ïÞ»┤µÿÄ´╝ÜÕªéõ©èÚØóþÜä8Õ¡ùÞèéÕ»╣Ú¢Éõ©¡þÜäÔÇ£µò┤õ¢ôÕ»╣Ú¢ÉÔÇØ´╝îµò┤õ¢ôÕñºÕ░Å=9┬áµîë┬á4┬áÕ£åµò┤┬á= 12

Õ£åµò┤þÜäÞ┐çþ¿ï´╝Üõ╗Ä9Õ╝Çպﵻŵ¼íÕèáõ©Ç´╝îþ£ïµÿ»ÕɪÞâ¢Þó½4µò┤ÚÖñ´╝îÞ┐ÖÚçî9´╝î10´╝î11ÕØçõ©ìÞâ¢Þó½4µò┤ÚÖñ´╝îÕê░12µùÂÕÅ»õ╗Ñ´╝îÕêÖÕ£åµò┤þ╗ôµØƒÒÇé

- 2011-11-10 17:28

- µÁÅÞºê 5006

- Þ»äÞ«║(0)

- Õêåþ▒╗:þ╝ûþ¿ïÞ»¡Þ¿Ç

- µƒÑþ£ïµø┤ÕñÜ

ÕÅæÞí¿Þ»äÞ«║

-

ÕêáÚÖñõ╗úþáüõ©¡þÜäµ│¿Úçè

2012-05-09 14:28 1064    #include <stdio.h> ... -

_finddata_t

2011-11-01 23:11 1768_finddata_t ÒÇÇÒÇÇstruct _finddata ... -

CÕ«ÅÕ«Üõ╣ë

2011-10-27 15:22 875CÞ»¡Þ¿ÇÕ©©þö¿Õ«ÅÕ«Üõ╣ë 01: Úÿ▓µ¡óõ©Çõ©¬Õñ┤µûçõ╗ÂÞó½ÚçìÕñìÕîàÕɽ #ifn ... -

CÞ»¡Þ¿ÇÕÅÿÚçÅÕú░µÿÄÕåàÕ¡ÿÕêåÚàì

2011-10-21 11:49 1023õ©Çõ©¬þö▒C/C++þ╝ûÞ»æþÜäþ¿ïÕ║ÅÕì ...

þø©Õà│µÄ¿ÞìÉ

sizeof(þ╗ôµ×äõ¢ô)µÿ»µîçþ╗ôµ×äõ¢ôÕ£¿ÕåàÕ¡ÿõ©¡þÜäÕñºÕ░Å´╝îÞÇîÕåàÕ¡ÿÕ»╣ڢɵÿ»µîçþ╝ûÞ»æÕÖ¿õ©║õ║åµÅÉÚ½ÿþ¿ïÕ║ÅþÜäµòêþÄçÕÆîÕÅ»þº╗µñìµÇº´╝îÕ»╣ÕåàÕ¡ÿÕ£░ÕØÇþÜäÚÖÉÕêÂÕÆîÞ░âµò┤ÒÇé Õ£¿CÞ»¡Þ¿Çõ©¡´╝îþ╗ôµ×äõ¢ôþÜäÕñºÕ░Åõ©ìõ╗àÕÅûÕå│õ║Äþ╗ôµ×äõ¢ôµêÉÕæÿþÜäõ©¬µò░ÕÆîþ▒╗Õ×ï´╝îÞ┐ÿÕÅûÕå│õ║ÄÕåàÕ¡ÿÕ»╣Ú¢ÉþÜäÞºäÕêÖÒÇéÕ£¿...

õ©ïÚØóµÿ»õ©Çõ║øÕà│õ║Ä`sizeof`ÕÆî`struct`þ╗ôµ×äõ¢ôÕåàÕ¡ÿÕ»╣Ú¢ÉþÜäÕ©©ÞºüþƒÑÞ»åþé╣´╝Ü 1. **µêÉÕæÿÕÅÿÚçÅÕ»╣Ú¢É**´╝Üþ╝ûÞ»æÕÖ¿õ╝ܵá╣µì«µ»Åõ©¬µêÉÕæÿÕÅÿÚçÅþÜäÕñºÕ░ÅÕÆîÕ»╣Ú¢ÉÞºäÕêÖÞ┐øÞíîµÄÆÕêù´╝îõ¢┐Õ¥ùµ»Åõ©¬µêÉÕæÿÕÅÿÚçÅþÜäÕ£░ÕØÇÚ⢵ÿ»ÕàÂÞç¬Þ║½ÕñºÕ░ÅþÜäµò┤µò░ÕÇìÒÇé 2. **Õí½Õàà´╝êPadding...

޻ѵûçµíúµÅÉõ¥øõ║åÞ»ªþ╗åÞºúÕå│þ╗ôµ×äõ¢ôsizeofÚù«Úóÿ,õ╗Äþ╗ôµ×äõ¢ôÕåàÕÅÿÚçŵëÇÕìáþ®║Úù┤ÕñºÕ░Å,Ú╗ÿÞ«ñÕåàÕ¡ÿÕ»╣Ú¢ÉÕñºÕ░Å,Õ╝║ÕêÂÕåàÕ¡ÿÕ»╣ڢɵû╣µ│ò,ÕÅÿÚçÅÕ£¿ÕåàÕ¡ÿõ©¡Õ©âÕ▒ÇþÜäÞ»ªþ╗åÕêåµ×É,Þ»¡Þ¿ÇÞ¿Çþ«ÇµäÅÞÁà,þ╗صùáÕ║ƒÞ»Ø,õ©║Þ»╗ÞÇàÞºúÕå│õ║åÕñºÚçÅÕ»╗µë¥õ╣ªþ▒ìþÜäþ⪵ü╝,Þ»╗ÞÇàÕÅ»õ╗ÑÞè▒Þ┤╣ÕçáÕêåÚƃþÜä...

sizeofõ©Äþ╗ôµ×äõ¢ôÕÆîÕà▒ÕÉîõ¢ô.PDF þë╣Õê½þë╣Õê½ÚÜ¥µë¥þÜäõ©Çµ£¼õ╣ª´╝îõ©ìõ©ïõ╝ÜÕÉĵéöþÜä

sizeofÞ┐øÞíîþ╗ôµ×äõ¢ôÕñºÕ░ÅþÜäÕêñµû¡.sizeofÞ┐øÞíîþ╗ôµ×äõ¢ôÕñºÕ░ÅþÜäÕêñµû¡.sizeofÞ┐øÞíîþ╗ôµ×äõ¢ôÕñºÕ░ÅþÜäÕêñµû¡.

Õ£¿CÞ»¡Þ¿Çõ©¡´╝îÕåàÕ¡ÿÕ¡ùÞèéÕ»╣ڢɵÿ»µîçþ╝ûÞ»æÕÖ¿õ©║õ║åµÅÉÚ½ÿþ¿ïÕ║ŵëºÞíîµòêþÄçÕÆîÕÅ»þº╗µñìµÇº´╝îÞÇîÕ»╣þ╗ôµ×äõ¢ôµêÉÕæÿÕ£¿ÕåàÕ¡ÿõ©¡þÜäÕ¡ÿÕ鿵û╣Õ╝ÅÞ┐øÞíîþÜäÞ░âµò┤ÒÇéÞ┐Öõ©¬Þ░âµò┤µÿ»Õƒ║õ║Äõ¢ôþ│╗þ╗ôµ×äþÜäÕ»╣Ú¢ÉÞºäÕêÖ´╝îµù¿Õ£¿µÅÉÚ½ÿþ¿ïÕ║ÅþÜäµëºÞíîµòêþÄçÕÆîÕÅ»þº╗µñìµÇºÒÇé Õ£¿ C Þ»¡Þ¿Çõ©¡´╝îsizeof ...

Õ£¿Þ«íþ«ùµ£║þºæÕ¡ªõ©¡´╝îÕåàÕ¡ÿÕ»╣ڢɴ╝êMemory Alignment´╝ëµÿ»þ╝ûþ¿ïõ©¡õ©Çõ©¬ÚçìÞªüþÜ䵪éÕ┐Á´╝îþë╣Õê½µÿ»Õ£¿ÕñäþÉåþ╗ôµ×äõ¢ô´╝êStructures´╝ëµùÂÒÇéÕåàÕ¡ÿÕ»╣Ú¢Éþí«õ┐Øõ║åµò░µì«Õ£¿ÕåàÕ¡ÿõ©¡þÜäÕ¡ÿÕ鿵û╣Õ╝ÅÞâ¢Õñƒµ£ëµòêÕ£░Þó½ÕñäþÉåÕÖ¿Þ«┐Úù«´╝îµÅÉÚ½ÿµÇºÞâ¢Õ╣ÂÚü┐Õàìµ¢£Õ£¿þÜäÚöÖÞ»»ÒÇéµ£¼µûçÕ░åµÀ▒ÕàÑ...

Õ£¿Þ«íþ«ùµ£║þ╝ûþ¿ïõ©¡´╝îÕ¡ùÞèéÕ»╣ڢɵÿ»õ©Çõ©¬ÚØ×Õ©©ÚçìÞªüþÜ䵪éÕ┐Á´╝îþë╣Õê½µÿ»Õ£¿µÂëÕÅèÕê░þ╗ôµ×äõ¢ôþÜäÕåàÕ¡ÿÕ©âÕ▒ǵùÂÒÇéþ╗ôµ×äõ¢ôþÜä`sizeof`µôìõ¢£þ¼ªÞ┐öÕø×þÜäµÿ»µò┤õ©¬þ╗ôµ×äõ¢ôÕ£¿ÕåàÕ¡ÿõ©¡Õìáþö¿þÜäÕ¡ùÞèéµò░´╝îÞÇîÞ┐Öõ©¬ÕÇ╝Õ╣Âõ©ìµÇ╗µÿ»þ«ÇÕìòÕ£░þ¡ëõ║ĵëǵ£ëµêÉÕæÿÕñºÕ░ÅþÜäµÇ╗ÕÆîÒÇéÞ┐Öµÿ»Õøáõ©║...

þ╗ôµ×äõ¢ôÕ£¿Þ«íþ«ùµ£║ÕåàÕ¡ÿõ©¡þÜäÕ»╣ڢɵû╣Õ╝Å Õ£¿ C Þ»¡Þ¿Çõ©¡´╝îþ╗ôµ×äõ¢ô´╝êstruct´╝ëµÿ»õ©ÇþºìÞç¬Õ«Üõ╣ëµò░µì«þ▒╗Õ×ï´╝îþö¿õ║Äþ╗äÕÉêÕñÜõ©¬ÕÅÿÚçÅõ╗Ñõ¥┐µø┤µû╣õ¥┐Õ£░þ╗äþ╗çÕÆîþ«íþÉåµò░µì«ÒÇéõ¢åµÿ»´╝îÕ¢ôµêæõ╗¼õ¢┐þö¿ sizeof Þ┐Éþ«ùþ¼ªµØÑÞÄÀÕÅûþ╗ôµ×äõ¢ôþÜäÕñºÕ░ŵù´╝îþ╗ÅÕ©©õ╝ÜÕÅæþÄ░þ╗ôµ×£õ©ÄÚóäµ£ƒ...

µ£¼þ»çµûçþ½áÕ░åÞ»ªþ╗åÞºúÚçèCÞ»¡Þ¿Çõ©¡þÜäþ╗ôµ×äõ¢ôÕåàÕ¡ÿÕ»╣Ú¢ÉÕăþÉå´╝îÕ╣ÂÚÇÜÞ┐çõ©Çõ©¬ÕàÀõ¢ôþÜäõ¥ïաɵØÑÞ»┤µÿÄÕªéõ¢òÞ«íþ«ùþ╗ôµ×äõ¢ôþÜäÕ«×ÚÖàÕñºÕ░ÅÒÇé #### õ╗Çõ╣êµÿ»ÕåàÕ¡ÿÕ»╣ڢɴ╝ƒ ÕåàÕ¡ÿÕ»╣ڢɵÿ»µîçþ╝ûÞ»æÕÖ¿õ©║õ║åµÅÉÚ½ÿþ¿ïÕ║ÅþÜäÞ┐ÉÞíîµòêþÄç´╝îÕ£¿Õ¡ÿÕé¿þ╗ôµ×äõ¢ôÕÅÿÚçŵùÂõ╝ÜÞç¬Õè¿Þ░âµò┤ÕàÂÕåàÚâ¿...

µ£¼µûçÕ░åµÀ▒ÕàѵÄóÞ«¿C++õ©¡þÜäÕåàÕ¡ÿÕ»╣ڢɵ£║Õê´╝îþë╣Õê½µÿ»þ╗ôµ×äõ¢ô´╝ê`struct`´╝ëÕ»╣ڢɵû╣ÚØó´╝îÕ╣µÅÉõ¥øÕàÀõ¢ôþÜäþñ║õ¥ïõ╗úþáüÞ┐øÞíîÞºúÚçèÒÇé #### õ║îÒÇüÕåàþ¢«þ▒╗Õ×ïþÜäÕñºÕ░Å Õåàþ¢«þ▒╗Õ×ïþÜäÕñºÕ░ŵÿ»µîçC++õ©¡Õƒ║µ£¼µò░µì«þ▒╗Õ×ïÕ£¿ÕåàÕ¡ÿõ©¡Õìáþö¿þÜäþ®║Úù┤ÕñºÕ░ÅÒÇéÞ┐Öõ║øþ▒╗Õ×ïÕîàµï¼õ¢åõ©ì...

ÕåàÕ¡ÿÕ»╣ڢɵÿ»µîçÕ£¿Þ«íþ«ùµ£║ÕåàÕ¡ÿõ©¡´╝îµò░µì«þÜäÕ¡ÿÕé¿Õ£░ÕØÇÕ┐àÚí╗þ¼ªÕÉêõ©ÇÕ«ÜÞºäÕêÖþÜäþÄ░Þ▒íÒÇéÞ┐ÖþºìÞºäÕêÖÚÇÜÕ©©þö▒þí¼õ╗ÂÕ╣│ÕÅ░ÕÆîþ╝ûÞ»æÕÖ¿Õà▒ÕÉîÕå│Õ«ÜÒÇéÕåàÕ¡ÿÕ»╣Ú¢ÉþÜäõ©╗ÞªüÕăÕøáµ£ëõ©ñõ©¬µû╣ÚØó´╝Ü 1. **Õ╣│ÕÅ░ÕăÕøá´╝êþº╗µñìÕăÕøá´╝ë**´╝Üõ©ìÕÉîþÜäþí¼õ╗ÂÕ╣│ÕÅ░Õ»╣µò░µì«þÜäÞ«┐Úù«Þâ¢Õèø...

Õ£¿C/C++þ╝ûþ¿ïõ©¡´╝îµò░µì«Õ»╣ڢɵÿ»þ╝ûÞ»æÕÖ¿Õ£¿ÕåàÕ¡ÿõ©¡Õ«ëµÄƵò░µì«þÜäõ©Çþºìµû╣Õ╝Å´╝îÕàÂþø«þÜäµÿ»õ©║õ║åõ╝ÿÕîûÕåàÕ¡ÿÞ«┐Úù«ÚǃÕ║ªÒÇéþ╝ûÞ»æÕÖ¿õ╝ܵá╣µì«þ▒╗Õ×ïÕñºÕ░ÅÒÇüþ╝ûÞ»æÕÖ¿þëêµ£¼õ╗ÑÕÅèÕ╣│ÕÅ░þÜäõ©ìÕÉîµØÑÕå│իܵò░µì«þÜäÕ»╣ڢɵû╣Õ╝ÅÒÇéµò░µì«Õ»╣Ú¢ÉÚÇÜÕ©©µÿ»µîçµò░µì«þ╗ôµ×ä´╝êÕªéþ╗ôµ×äõ¢ôÕÆîþ▒╗´╝ëõ©¡...

Õ£¿CÞ»¡Þ¿Çõ©¡´╝îÕåàÕ¡ÿÕ»╣ڢɵÿ»õ©Çõ©¬ÚçìÞªüþÜ䵪éÕ┐Á´╝îÕ«âµÂëÕÅèÕê░µò░µì«Õ£¿Þ«íþ«ùµ£║ÕåàÕ¡ÿõ©¡þÜäÕ¡ÿÕ鿵û╣Õ╝ÅÒÇéÕåàÕ¡ÿÕ»╣Ú¢ÉþÜäõ©╗Þªüþø«þÜäµÿ»µÅÉÚ½ÿµò░µì«Õ¡ÿÕÅûµòêþÄç´╝îÕçÅÕ░æCPUÞ«┐Úù«ÕåàÕ¡ÿµùÂþÜäÚóØÕñûÕ╝ÇÚöÇÒÇéÕ¢ôµò░µì«µîëþàºþë╣Õ«ÜþÜäÞºäÕêÖµÄÆÕêùÕ£¿ÕåàÕ¡ÿõ©¡´╝îÕÅ»õ╗ÑÚü┐ÕàìÕñäþÉåÕÖ¿Þ┐øÞíîõ©ìÕ┐àÞªü...

µ£¼µûçÕ░åÞ»ªþ╗åÞ«▓Þºúþ╗ôµ×äÕåàÕ¡ÿÕ»╣ڢɴ╝êStructMemory´╝ëþÜ䵪éÕ┐ÁÒÇüÕăÕøáõ╗ÑÕÅèÕªéõ¢òÚÇÜÞ┐ç`sizeof`Þ┐Éþ«ùþ¼ªµØÑþÉåÞºúÕàÂÕÀÑõ¢£ÕăþÉåÒÇé ÕåàÕ¡ÿÕ»╣ڢɵÿ»µîçµò░µì«Õ£¿ÕåàÕ¡ÿõ©¡þÜäÕ¡ÿÕ鿵û╣Õ╝Å´╝îÕ«âÚüÁÕ¥¬õ©ÇÕ«ÜþÜäÞºäÕêÖ´╝îþí«õ┐صò░µì«Þâ¢ÕñƒÞó½ÕñäþÉåÕÖ¿Ú½ÿµòêÕ£░Þ«┐Úù«ÒÇéÚÇÜÕ©©´╝îµ»Åõ©¬µò░µì«...

C++ õ©¡þÜäÕåàÕ¡ÿÞÁäµ║ÉÕ»╣Ú¢ÉÞºäÕêÖµÿ»µîçÕ£¿Õ¡ÿÕé¿ÕÖ¿õ©¡Õ»╣µò░µì«þÜäµÄÆÕêùµû╣Õ╝Å´╝îõ╗Ñþí«õ┐Øþ¿ïÕ║ÅþÜäÞ┐ÉÞíîµòêþÄçÕÆúþí«µÇºÒÇéµ£¼µûçÕ░åõ╗ĵÀ▒ÕàѵÁàÕç║þÜäÞºÆÕ║ª´╝îÕ▒òþñ║õ║åµîçÚÆêþÜäÕ»åþáü´╝îõ╗ïþ╗ì C++ õ©¡þÜäÕåàÕ¡ÿÕ»╣Ú¢ÉÞºäÕêÖþÜ䵪éÕ┐ÁÒÇüõ¢£þö¿ÒÇüÕ«×þÄ░ÕÆîþ«ùµ│òÒÇé µªéÕ┐Á´╝Ü ÕåàÕ¡ÿÕ»╣ڢɵÿ»µîç...

ÕÉîµù´╝îµêæõ╗¼Ú£ÇÞªüµ│¿µäÅþ╗ôµ×äõ¢ôþÜäÕåàÕ¡ÿÕ»╣Ú¢ÉÚù«Úóÿ´╝îÕøáõ©║þ╝ûÞ»æÕÖ¿ÕÅ»Þâ¢õ╝ÜÕ£¿þ╗ôµ×äõ¢ôµêÉÕæÿõ╣ïÚù┤µÅÆÕàÑÚóØÕñûþÜäÕ¡ùÞèéõ╗Ñõ┐ØÞ»üµò░µì«Þ«┐Úù«þÜäµòêþÄçÒÇéÞ┐ÖÕÅ»Þâ¢õ╝ÜÕ¢▒ÕôìÕê░þ╗ôµ×äõ¢ôþÜäÕ«×ÚÖàÕñºÕ░Å´╝îÕÅ»õ╗ÑÚÇÜÞ┐ç sizeof µôìõ¢£þ¼ªµØÑÞÄÀÕÅûþ╗ôµ×äõ¢ôµëÇÕìáþÜäÕ¡ùÞèéµò░ÒÇé õ¥ïÕªé´╝îµêæõ╗¼...

Õ¡ùÞèéÕ»╣ڢɵÿ»µîçÕ£¿Þ«íþ«ùµ£║ÕåàÕ¡ÿõ©¡´╝îþ╗ôµ×äõ¢ôþÜäµêÉÕæÿÕÅÿÚçŵîëþàºõ©ÇÕ«ÜþÜäÞºäÕêÖÞ┐øÞíîµÄÆÕêù´╝îõ╗Ñõ¥┐µÅÉÚ½ÿÕ¡ÿÕÅûµòêþÄçÕÆîÚÿ▓µ¡óÚöÖÞ»»ÒÇéõ©ìÕÉîþÜäþí¼õ╗ÂÕ╣│ÕÅ░Õ»╣Õ¡ÿÕé¿þ®║Úù┤þÜäÕñäþÉåµû╣Õ╝Åõ©ìÕÉî´╝îõ©Çõ║øÕ╣│ÕÅ░Õ»╣µƒÉõ║øþë╣Õ«Üþ▒╗Õ×ïþÜäµò░µì«ÕŬÞâ¢õ╗ĵƒÉõ║øþë╣Õ«ÜÕ£░ÕØÇÕ╝ÇÕºïÕ¡ÿÕÅûÒÇéÕªéµ×£õ©ì...

Þ┐Öõ©¬õ¥ïÕ¡Éõ©¡´╝îµêÉÕæÿÕÅÿÚçÅþÜäÚí║Õ║ŵö╣ÕÅÿÕ»╝Þç┤õ║åõ©ìÕÉîþÜäÕåàÕ¡ÿÕ»╣Ú¢ÉÕÆîÕí½ÕààÕ¡ùÞèé´╝îõ╗ÄÞÇîÕ¢▒Õôìõ║åþ╗ôµ×äõ¢ôþÜäµÇ╗ÕñºÕ░ÅÒÇé - sizeof(struct A) = 24; sizeof(struct B) = 48ÒÇéÞ┐ÖÚçîµÂëÕÅèÕê░þ╗ôµ×äõ¢ôÕåàÚ⿵ò░þ╗äÕÆîµêÉÕæÿþÜäþ╗äÕÉê´╝îÕ▒òþñ║õ║åµø┤õ©║ÕñìµØéþÜäÕåàÕ¡ÿÕ»╣Ú¢É...