1.重要属性:

存储数据的数组 transient Entry[] table; 默认容量 static final int DEFAULT_INITIAL_CAPACITY = 16; 最大容量 static final int MAXIMUM_CAPACITY = 1 << 30; 默认加载因子,加载因子是一个比例,当HashMap的数据大小>=容量*加载因子时,HashMap会将容量扩容 static final float DEFAULT_LOAD_FACTOR = 0.75f; 当实际数据大小超过threshold时,HashMap会将容量扩容,threshold=容量*加载因子 int threshold; //当存放量大于这个值的时候,就需要将table进行扩张,新建一个两倍大的数组,并将老的元素移过去 加载因子 final float loadFactor;

transient Entry[] table;

static class Entry<K,V> implements Map.Entry<K,V> {

final K key;

V value;

Entry<K,V> next;

final int hash;

Entry(int h, K k, V v, Entry<K,V> n) {

value = v;

next = n;

key = k;

hash = h;

}

}

看到next了吗?next就是为了哈希冲突而存在的。比如通过哈希运算,一个新元素应该在数组的第10个位置,但是第10个位置已经有Entry,那么好吧,将新加的元素也放到第10个位置,将第10个位置的原有Entry赋值给当前新加的 Entry的next属性。

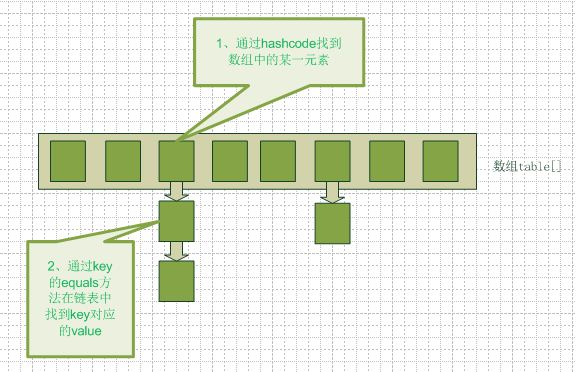

table数组中存放的是单向链表Entry,链表是为了解决哈希冲突的.

重要方法:

public V get(Object key) 当key为null时,调用private V getForNullKey(),从table[0]取出Entry得到对应key==null的value。如果key不为null,则根据hash码快速跳到数组的某个位置,只对很少的元素进行比较,这就是HashMap速度很快的原因。 void resize(int newCapacity) 根据指定的newCapacity值调整table的大小 void transfer(Entry[] newTable) 将当前table中的内容转移到新的newTable中

addEntry(int hash, K key, V value, int bucketIndex) 添加新元素,重点所在

入口:

public HashMap() {

this.loadFactor = DEFAULT_LOAD_FACTOR;

threshold = (int)(DEFAULT_INITIAL_CAPACITY * DEFAULT_LOAD_FACTOR);

table = new Entry[DEFAULT_INITIAL_CAPACITY];

init();

}

public HashMap(int initialCapacity, float loadFactor) {

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " +

initialCapacity);

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " +

loadFactor);

// Find a power of 2 >= initialCapacity

int capacity = 1;

while (capacity < initialCapacity)

capacity <<= 1;

this.loadFactor = loadFactor;

threshold = (int)(capacity * loadFactor);

table = new Entry[capacity];

init();

}

public V put(K key, V value) {

if (key == null)

return putForNullKey(value);

int hash = hash(key.hashCode());

int i = indexFor(hash, table.length);

for (Entry<K,V> e = table[i]; e != null; e = e.next) {

Object k;

if (e.hash == hash && ((k = e.key) == key || key.equals(k))) {

V oldValue = e.value;

e.value = value;

e.recordAccess(this);

return oldValue;

}

}

modCount++;

addEntry(hash, key, value, i);

return null;

}

public V get(Object key) {

if (key == null)

return getForNullKey();

int hash = hash(key.hashCode());

for (Entry<K,V> e = table[indexFor(hash, table.length)];

e != null;

e = e.next) {

Object k;

if (e.hash == hash && ((k = e.key) == key || key.equals(k)))

return e.value;

}

return null;

}

第一次Put时,Table中元素都为null,所以不会进去for循环!

当我们往hashmap中put元素的时候,先根据key的hash值得到这个元素在数组中的位置(即下标),然后就可以把这个元素放到对应的位置中了。如果这个元素所在的位子上已经存放有其他元素了,那么在同一个位子上的元素将以链表的形式存放,新加入的放在链头,最先加入的放在链尾。从hashmap 中get元素时,首先计算key的hashcode,找到数组中对应位置的某一元素,然后通过key的equals方法在对应位置的链表中找到需要的元素。

hashMap的resize:

当hashmap中的元素越来越多的时候,碰撞的几率也就越来越高(因为数组的长度是固定的),所以为了提高查询的效率,就要对hashmap的数组进行扩容,数组扩容这个操作也会出现在ArrayList中,所以这是一个通用的操作,很多人对它的性能表示过怀疑,不过想想我们的“均摊”原理,就释然了,而在hashmap数组扩容之后,最消耗性能的点就出现了:原数组中的数据必须重新计算其在新数组中的位置,并放进去,这就是resize。

那么hashmap什么时候进行扩容呢?当hashmap中的元素个数超过数组大小*loadFactor时,就会进行数组扩容,loadFactor的默认值为0.75,也就是说,默认情况下,数组大小为16,那么当hashmap中元素个数超过16*0.75=12的时候,就把数组的大小扩展为 2*16=32,即扩大一倍,然后重新计算每个元素在数组中的位置,而这是一个非常消耗性能的操作,所以如果我们已经预知hashmap中元素的个数,那么预设元素的个数能够有效的提高hashmap的性能。比如说,我们有1000个元素new HashMap(1000), 但是理论上来讲new HashMap(1024)更合适,不过上面annegu已经说过,即使是1000,hashmap也自动会将其设置为1024。 但是new HashMap(1024)还不是更合适的,因为0.75*1000 < 1000, 也就是说为了让0.75 * size > 1000, 我们必须这样new HashMap(2048)才最合适,既考虑了&的问题,也避免了resize的问题。

2. 在这之前,先介绍一下负载因子和容量的属性。大家都知道其实一个 HashMap 的实际容量就 因子*容量,其默认值是 16×0.75=12; 这个很重要,对效率很一定影响!当存入HashMap的对象超过这个容量时,HashMap 就会重新构造存取表。这就是一个大问题,我后面慢慢介绍,反正,如果你已经知道你大概要存放多少个对象,最好设为该实际容量的能接受的数字。

两个关键的方法,put和get:

先有这样一个概念,HashMap是声明了 Map,Cloneable, Serializable 接口,和继承了 AbstractMap 类,里面的 Iterator 其实主要都是其内部类HashIterator 和其他几个 iterator 类实现,当然还有一个很重要的继承了Map.Entry 的 Entry 内部类,由于大家都有源代码,大家有兴趣可以看看这部分,我主要想说明的是 Entry 内部类。它包含了hash,value,key 和next 这四个属性,很重要。put的源码如下

public Object put(Object key, Object value) {

Object k = maskNull(key);

这个就是判断键值是否为空,并不很深奥,其实如果为空,它会返回一个static Object 作为键值,这就是为什么HashMap允许空键值的原因。

int hash = hash(k);

int i = indexFor(hash, table.length);

这连续的两步就是 HashMap 最牛的地方!研究完我都汗颜了,其中 hash 就是通过 key 这个Object的 hashcode 进行 hash,然后通过 indexFor 获得在Object table的索引值。

table???不要惊讶,其实HashMap也神不到哪里去,它就是用 table 来放的。最牛的就是用 hash 能正确的返回索引。

不知道大家有没有留意 put 其实是一个有返回的方法,它会把相同键值的 put 覆盖掉并返回旧的值!如下方法彻底说明了 HashMap 的结构,其实就是一个表加上在相应位置的Entry的链表:

for (Entry e = table[i]; e != null; e = e.next) {

if (e.hash == hash && eq(k, e.key)) {

Object oldvalue = e.value;

e.value = value; //把新的值赋予给对应键值。

e.recordAccess(this); //空方法,留待实现

return oldvalue; //返回相同键值的对应的旧的值。

}

}

modCount++; //结构性更改的次数

addEntry(hash, k, value, i); //添加新元素,关键所在!

return null; //没有相同的键值返回

}

我们把关键的方法拿出来分析:

void addEntry(int hash, K key, V value, int bucketIndex) {

Entry<K,V> e = table[bucketIndex];

table[bucketIndex] = new Entry<K,V>(hash, key, value, e);

if (size++ >= threshold)

resize(2 * table.length);

}

/**

* 新建数组进行扩容

*/

void resize(int newCapacity) {

Entry[] oldTable = table;

int oldCapacity = oldTable.length;

if (oldCapacity == MAXIMUM_CAPACITY) {

threshold = Integer.MAX_VALUE;

return;

}

Entry[] newTable = new Entry[newCapacity];

transfer(newTable);

table = newTable;

threshold = (int)(newCapacity * loadFactor);

}

/**

* Transfers all entries from current table to newTable.

*/

void transfer(Entry[] newTable) {

Entry[] src = table;

int newCapacity = newTable.length;

for (int j = 0; j < src.length; j++) {

Entry<K,V> e = src[j];

if (e != null) {

src[j] = null;

do {

Entry<K,V> next = e.next;

int i = indexFor(e.hash, newCapacity);

e.next = newTable[i];

newTable[i] = e;

e = next;

} while (e != null);

}

}

}

多个线程时,可能多个线程都在这扩容,这样就会导致新建了两个扩容后的数组。

因为 hash 的算法有可能令不同的键值有相同的hash码并有相同的table索引,如:key=“33”和key=Object g的hash都是-8901334,那它经过indexfor之后的索引一定都为i,这样在new的时候这个Entry的next就会指向这个原本的 table[i],再有下一个也如此,形成一个链表,和put的循环对定e.next获得旧的值。到这里,HashMap的结构,大家也十分明白了吧?

if (size++ >= threshold) //这个threshold就是能实际容纳的量

resize(2 * table.length); //超出这个容量就会将Object table重构

所谓的重构也不神,就是建一个两倍大的table(我在别的论坛上看到有人说是两倍加1,把我骗了),然后再一个个indexfor进去!注意!!这就是效率!!如果你能让你的HashMap不需要重构那么多次,效率会大大提高!

说到这里也差不多了,get比put简单得多,大家,了解put,get也差不了多少了。对于collections我是认为,它是适合广泛的,当不完全适合特有的,如果大家的程序需要特殊的用途,自己写吧,其实很简单。(作者是这样跟我说的,他还建议我用LinkedHashMap,我看了源码以后发现,LinkHashMap其实就是继承HashMap的,然后override相应的方法,有兴趣的同人,自己looklook)建个 Object table,写相应的算法,就ok啦。

举个例子吧,像 Vector,list 啊什么的其实都很简单,最多就多了的同步的声明,其实如果要实现像Vector那种,插入,删除不多的,可以用一个Object table来实现,按索引存取,添加等。

如果插入,删除比较多的,可以建两个Object table,然后每个元素用含有next结构的,一个table存,如果要插入到i,但是i已经有元素,用next连起来,然后size++,并在另一个table记录其位置。

来源:http://blog.csdn.net/autoinspired/article/details/2074916

参考:http://www.iteye.com/topic/754887

http://blog.csdn.net/HEYUTAO007/article/details/6206153

需要注意的问题:

1、Entry里有四个元素,key/value/next/hash,注意不要漏了hash。

2、接口内部可以定义接口,如Map接口内部就定义了Entry接口

HashMap 内部类Entry 实现了 Map接口里的Entry接口

static class Entry<K,V> implements Map.Entry<K,V> {

final K key;

V value;

Entry<K,V> next;

final int hash;

}

3、Entry类里的setValue设置新值成功后,返回老值

public final V setValue(V newValue) {

V oldValue = value;

value = newValue;

return oldValue;

}

4、赋值时,若当前位置存在时,是将新值放在链表头部,老值放在next中。

- Entry<K,V> e = table[bucketIndex];

- table[bucketIndex] = new Entry<K,V>(hash, key, value, e);

5、扩容后,同一位置上的链表倒序排列了。

/**

* Transfers all entries from current table to newTable.

*/

void transfer(Entry[] newTable) {

Entry[] src = table;

int newCapacity = newTable.length;

for (int j = 0; j < src.length; j++) {

Entry<K,V> e = src[j];

if (e != null) {

src[j] = null;

do {

Entry<K,V> next = e.next;

int i = indexFor(e.hash, newCapacity);

e.next = newTable[i];

//最后遍历的放在链表头

newTable[i] = e;

e = next;

} while (e != null);

}

}

}

6、加载因子为什么选择0.75 ??

加载因子需要在时间和空间成本上寻求一种折衷。加载因子过高,例如为1,虽然减少了空间开销,提高了空间利用率,但同时也增加了查询时间成本;加载因子过低,例如0.5,虽然可以减少查询时间成本,但是空间利用率很低,同时提高了rehash操作的次数。在设置初始容量时应该考虑到映射中所需的条目数及其加载因子,以便最大限度地减少rehash操作次数,所以,一般在使用HashMap时建议根据预估值设置初始容量,减少扩容操作。

选择0.75作为默认的加载因子,完全是时间和空间成本上寻求的一种折衷选择,至于为什么不选择0.5或0.8,笔者没有找到官方的直接说明,在HashMap的源码注释中也只是说是一种折中的选择。

7、HashMap在JDK1.8之前和之后的区别?

在JDK1.8以前版本中,HashMap的实现是数组+链表,它的缺点是即使哈希函数选择的再好,也很难达到元素百分百均匀分布,而且当HashMap中有大量元素都存到同一个桶中时,这个桶会有一个很长的链表,此时遍历的时间复杂度就是O(n),当然这是最糟糕的情况。

在JDK1.8及以后的版本中引入了红黑树结构,HashMap的实现就变成了数组+链表或数组+红黑树。

添加元素时,若桶中链表个数超过8,链表会转换成红黑树;删除元素、扩容时,若桶中结构为红黑树并且树中元素个数较少时会进行修剪或直接还原成链表结构,以提高后续操作性能;遍历、查找时,由于使用红黑树结构,红黑树遍历的时间复杂度为 O(logn),所以性能得到提升。

若桶中链表元素个数大于等于8时,链表转换成树结构;若桶中链表元素个数小于等于6时,树结构还原成链表。因为红黑树的平均查找长度是log(n),长度为8的时候,平均查找长度为3,如果继续使用链表,平均查找长度为8/2=4,这才有转换为树的必要。链表长度如果是小于等于6,6/2=3,虽然速度也很快的,但是转化为树结构和生成树的时间并不会太短。

还有选择6和8,中间有个差值7可以有效防止链表和树频繁转换。假设一下,如果设计成链表个数超过8则链表转换成树结构,链表个数小于8则树结构转换成链表,如果一个HashMap不停的插入、删除元素,链表个数在8左右徘徊,就会频繁的发生树转链表、链表转树,效率会很低。

Map:

数组 Entry[] table;

Map里使用的是一个Entry数组,而Entry又是一个单向链表

按索引找到位置后,遍历链表

int hash = hash(key.hashCode());

int i = indexFor(hash, table.length);

for (Entry<K,V> e = table[i]; e != null; e = e.next)

Map 里覆盖值时会返回老值

public V put(K key, V value) {

if (key == null)

return putForNullKey(value);

int hash = hash(key.hashCode());

int i = indexFor(hash, table.length);

for (Entry<K,V> e = table[i]; e != null; e = e.next) {

Object k;

if (e.hash == hash && ((k = e.key) == key || key.equals(k))) {

V oldValue = e.value;

e.value = value;

e.recordAccess(this);

//返回

return oldValue;

}

}

modCount++;

addEntry(hash, key, value, i);

return null;

}

扩容的阀值容量 = 容量 * 加载因子:

threshold = (int)(capacity * loadFactor);

添加后容量超过阀值时,就会扩容,然后重新计算各元素位置。默认2倍扩容

所以为减少碰撞,当已知道元素大概个数时,可以设置默认容量,如:

public HashMap(int initialCapacity) {

this(initialCapacity, DEFAULT_LOAD_FACTOR);

}

如1000个元素,

1000 < 0.75 * capacity;

=> 3 * capacity > 4000

=> capacity > 1333

因为容量默认为2的幂,所以应该设置为2048

附源码:

/*

* @(#)HashMap.java 1.73 07/03/13

*

* Copyright 2006 Sun Microsystems, Inc. All rights reserved.

* SUN PROPRIETARY/CONFIDENTIAL. Use is subject to license terms.

*/

package java.util;

import java.io.*;

public class HashMap<K,V>

extends AbstractMap<K,V>

implements Map<K,V>, Cloneable, Serializable

{

/**

* The default initial capacity - MUST be a power of two.

*/

static final int DEFAULT_INITIAL_CAPACITY = 16;

/**

* The maximum capacity, used if a higher value is implicitly specified

* by either of the constructors with arguments.

* MUST be a power of two <= 1<<30.

*/

static final int MAXIMUM_CAPACITY = 1 << 30;

/**

* The load factor used when none specified in constructor.

*/

static final float DEFAULT_LOAD_FACTOR = 0.75f;

/**

* The table, resized as necessary. Length MUST Always be a power of two.

*/

transient Entry[] table;

/**

* The number of key-value mappings contained in this map.

*/

transient int size;

/**

* The next size value at which to resize (capacity * load factor).

* @serial

*/

int threshold;

/**

* The load factor for the hash table.

*

* @serial

*/

final float loadFactor;

/**

* The number of times this HashMap has been structurally modified

* Structural modifications are those that change the number of mappings in

* the HashMap or otherwise modify its internal structure (e.g.,

* rehash). This field is used to make iterators on Collection-views of

* the HashMap fail-fast. (See ConcurrentModificationException).

*/

transient volatile int modCount;

/**

* Constructs an empty <tt>HashMap</tt> with the specified initial

* capacity and load factor.

*

* @param initialCapacity the initial capacity

* @param loadFactor the load factor

* @throws IllegalArgumentException if the initial capacity is negative

* or the load factor is nonpositive

*/

public HashMap(int initialCapacity, float loadFactor) {

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " +

initialCapacity);

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " +

loadFactor);

// Find a power of 2 >= initialCapacity

int capacity = 1;

while (capacity < initialCapacity)

capacity <<= 1;

this.loadFactor = loadFactor;

threshold = (int)(capacity * loadFactor);

table = new Entry[capacity];

init();

}

/**

* Constructs an empty <tt>HashMap</tt> with the specified initial

* capacity and the default load factor (0.75).

*

* @param initialCapacity the initial capacity.

* @throws IllegalArgumentException if the initial capacity is negative.

*/

public HashMap(int initialCapacity) {

this(initialCapacity, DEFAULT_LOAD_FACTOR);

}

/**

* Constructs an empty <tt>HashMap</tt> with the default initial capacity

* (16) and the default load factor (0.75).

*/

public HashMap() {

this.loadFactor = DEFAULT_LOAD_FACTOR;

threshold = (int)(DEFAULT_INITIAL_CAPACITY * DEFAULT_LOAD_FACTOR);

table = new Entry[DEFAULT_INITIAL_CAPACITY];

init();

}

/**

* Constructs a new <tt>HashMap</tt> with the same mappings as the

* specified <tt>Map</tt>. The <tt>HashMap</tt> is created with

* default load factor (0.75) and an initial capacity sufficient to

* hold the mappings in the specified <tt>Map</tt>.

*

* @param m the map whose mappings are to be placed in this map

* @throws NullPointerException if the specified map is null

*/

public HashMap(Map<? extends K, ? extends V> m) {

this(Math.max((int) (m.size() / DEFAULT_LOAD_FACTOR) + 1,

DEFAULT_INITIAL_CAPACITY), DEFAULT_LOAD_FACTOR);

putAllForCreate(m);

}

// internal utilities

/**

* Initialization hook for subclasses. This method is called

* in all constructors and pseudo-constructors (clone, readObject)

* after HashMap has been initialized but before any entries have

* been inserted. (In the absence of this method, readObject would

* require explicit knowledge of subclasses.)

*/

void init() {

}

/**

* Applies a supplemental hash function to a given hashCode, which

* defends against poor quality hash functions. This is critical

* because HashMap uses power-of-two length hash tables, that

* otherwise encounter collisions for hashCodes that do not differ

* in lower bits. Note: Null keys always map to hash 0, thus index 0.

*/

static int hash(int h) {

// This function ensures that hashCodes that differ only by

// constant multiples at each bit position have a bounded

// number of collisions (approximately 8 at default load factor).

h ^= (h >>> 20) ^ (h >>> 12);

return h ^ (h >>> 7) ^ (h >>> 4);

}

/**

* Returns index for hash code h.

*/

static int indexFor(int h, int length) {

return h & (length-1);

}

/**

* Returns the number of key-value mappings in this map.

*

* @return the number of key-value mappings in this map

*/

public int size() {

return size;

}

/**

* Returns <tt>true</tt> if this map contains no key-value mappings.

*

* @return <tt>true</tt> if this map contains no key-value mappings

*/

public boolean isEmpty() {

return size == 0;

}

/**

* Returns the value to which the specified key is mapped,

* or {@code null} if this map contains no mapping for the key.

*

* <p>More formally, if this map contains a mapping from a key

* {@code k} to a value {@code v} such that {@code (key==null ? k==null :

* key.equals(k))}, then this method returns {@code v}; otherwise

* it returns {@code null}. (There can be at most one such mapping.)

*

* <p>A return value of {@code null} does not <i>necessarily</i>

* indicate that the map contains no mapping for the key; it's also

* possible that the map explicitly maps the key to {@code null}.

* The {@link #containsKey containsKey} operation may be used to

* distinguish these two cases.

*

* @see #put(Object, Object)

*/

public V get(Object key) {

if (key == null)

return getForNullKey();

int hash = hash(key.hashCode());

for (Entry<K,V> e = table[indexFor(hash, table.length)];

e != null;

e = e.next) {

Object k;

if (e.hash == hash && ((k = e.key) == key || key.equals(k)))

return e.value;

}

return null;

}

/**

* Offloaded version of get() to look up null keys. Null keys map

* to index 0. This null case is split out into separate methods

* for the sake of performance in the two most commonly used

* operations (get and put), but incorporated with conditionals in

* others.

*/

private V getForNullKey() {

for (Entry<K,V> e = table[0]; e != null; e = e.next) {

if (e.key == null)

return e.value;

}

return null;

}

/**

* Returns <tt>true</tt> if this map contains a mapping for the

* specified key.

*

* @param key The key whose presence in this map is to be tested

* @return <tt>true</tt> if this map contains a mapping for the specified

* key.

*/

public boolean containsKey(Object key) {

return getEntry(key) != null;

}

/**

* Returns the entry associated with the specified key in the

* HashMap. Returns null if the HashMap contains no mapping

* for the key.

*/

final Entry<K,V> getEntry(Object key) {

int hash = (key == null) ? 0 : hash(key.hashCode());

for (Entry<K,V> e = table[indexFor(hash, table.length)];

e != null;

e = e.next) {

Object k;

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

return e;

}

return null;

}

/**

* Associates the specified value with the specified key in this map.

* If the map previously contained a mapping for the key, the old

* value is replaced.

*

* @param key key with which the specified value is to be associated

* @param value value to be associated with the specified key

* @return the previous value associated with <tt>key</tt>, or

* <tt>null</tt> if there was no mapping for <tt>key</tt>.

* (A <tt>null</tt> return can also indicate that the map

* previously associated <tt>null</tt> with <tt>key</tt>.)

*/

public V put(K key, V value) {

if (key == null)

return putForNullKey(value);

int hash = hash(key.hashCode());

int i = indexFor(hash, table.length);

for (Entry<K,V> e = table[i]; e != null; e = e.next) {

Object k;

if (e.hash == hash && ((k = e.key) == key || key.equals(k))) {

V oldValue = e.value;

e.value = value;

e.recordAccess(this);

return oldValue;

}

}

modCount++;

addEntry(hash, key, value, i);

return null;

}

/**

* Offloaded version of put for null keys

*/

private V putForNullKey(V value) {

for (Entry<K,V> e = table[0]; e != null; e = e.next) {

if (e.key == null) {

V oldValue = e.value;

e.value = value;

e.recordAccess(this);

return oldValue;

}

}

modCount++;

addEntry(0, null, value, 0);

return null;

}

/**

* This method is used instead of put by constructors and

* pseudoconstructors (clone, readObject). It does not resize the table,

* check for comodification, etc. It calls createEntry rather than

* addEntry.

*/

private void putForCreate(K key, V value) {

int hash = (key == null) ? 0 : hash(key.hashCode());

int i = indexFor(hash, table.length);

/**

* Look for preexisting entry for key. This will never happen for

* clone or deserialize. It will only happen for construction if the

* input Map is a sorted map whose ordering is inconsistent w/ equals.

*/

for (Entry<K,V> e = table[i]; e != null; e = e.next) {

Object k;

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k)))) {

e.value = value;

return;

}

}

createEntry(hash, key, value, i);

}

private void putAllForCreate(Map<? extends K, ? extends V> m) {

for (Iterator<? extends Map.Entry<? extends K, ? extends V>> i = m.entrySet().iterator(); i.hasNext(); ) {

Map.Entry<? extends K, ? extends V> e = i.next();

putForCreate(e.getKey(), e.getValue());

}

}

/**

* Rehashes the contents of this map into a new array with a

* larger capacity. This method is called automatically when the

* number of keys in this map reaches its threshold.

*

* If current capacity is MAXIMUM_CAPACITY, this method does not

* resize the map, but sets threshold to Integer.MAX_VALUE.

* This has the effect of preventing future calls.

*

* @param newCapacity the new capacity, MUST be a power of two;

* must be greater than current capacity unless current

* capacity is MAXIMUM_CAPACITY (in which case value

* is irrelevant).

*/

void resize(int newCapacity) {

Entry[] oldTable = table;

int oldCapacity = oldTable.length;

if (oldCapacity == MAXIMUM_CAPACITY) {

threshold = Integer.MAX_VALUE;

return;

}

Entry[] newTable = new Entry[newCapacity];

transfer(newTable);

table = newTable;

threshold = (int)(newCapacity * loadFactor);

}

/**

* Transfers all entries from current table to newTable.

*/

void transfer(Entry[] newTable) {

Entry[] src = table;

int newCapacity = newTable.length;

for (int j = 0; j < src.length; j++) {

Entry<K,V> e = src[j];

if (e != null) {

src[j] = null;

do {

Entry<K,V> next = e.next;

int i = indexFor(e.hash, newCapacity);

e.next = newTable[i];

newTable[i] = e;

e = next;

} while (e != null);

}

}

}

/**

* Copies all of the mappings from the specified map to this map.

* These mappings will replace any mappings that this map had for

* any of the keys currently in the specified map.

*

* @param m mappings to be stored in this map

* @throws NullPointerException if the specified map is null

*/

public void putAll(Map<? extends K, ? extends V> m) {

int numKeysToBeAdded = m.size();

if (numKeysToBeAdded == 0)

return;

/*

* Expand the map if the map if the number of mappings to be added

* is greater than or equal to threshold. This is conservative; the

* obvious condition is (m.size() + size) >= threshold, but this

* condition could result in a map with twice the appropriate capacity,

* if the keys to be added overlap with the keys already in this map.

* By using the conservative calculation, we subject ourself

* to at most one extra resize.

*/

if (numKeysToBeAdded > threshold) {

int targetCapacity = (int)(numKeysToBeAdded / loadFactor + 1);

if (targetCapacity > MAXIMUM_CAPACITY)

targetCapacity = MAXIMUM_CAPACITY;

int newCapacity = table.length;

while (newCapacity < targetCapacity)

newCapacity <<= 1;

if (newCapacity > table.length)

resize(newCapacity);

}

for (Iterator<? extends Map.Entry<? extends K, ? extends V>> i = m.entrySet().iterator(); i.hasNext(); ) {

Map.Entry<? extends K, ? extends V> e = i.next();

put(e.getKey(), e.getValue());

}

}

/**

* Removes the mapping for the specified key from this map if present.

*

* @param key key whose mapping is to be removed from the map

* @return the previous value associated with <tt>key</tt>, or

* <tt>null</tt> if there was no mapping for <tt>key</tt>.

* (A <tt>null</tt> return can also indicate that the map

* previously associated <tt>null</tt> with <tt>key</tt>.)

*/

public V remove(Object key) {

Entry<K,V> e = removeEntryForKey(key);

return (e == null ? null : e.value);

}

/**

* Removes and returns the entry associated with the specified key

* in the HashMap. Returns null if the HashMap contains no mapping

* for this key.

*/

final Entry<K,V> removeEntryForKey(Object key) {

int hash = (key == null) ? 0 : hash(key.hashCode());

int i = indexFor(hash, table.length);

Entry<K,V> prev = table[i];

Entry<K,V> e = prev;

while (e != null) {

Entry<K,V> next = e.next;

Object k;

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k)))) {

modCount++;

size--;

if (prev == e)

table[i] = next;

else

prev.next = next;

e.recordRemoval(this);

return e;

}

prev = e;

e = next;

}

return e;

}

/**

* Special version of remove for EntrySet.

*/

final Entry<K,V> removeMapping(Object o) {

if (!(o instanceof Map.Entry))

return null;

Map.Entry<K,V> entry = (Map.Entry<K,V>) o;

Object key = entry.getKey();

int hash = (key == null) ? 0 : hash(key.hashCode());

int i = indexFor(hash, table.length);

Entry<K,V> prev = table[i];

Entry<K,V> e = prev;

while (e != null) {

Entry<K,V> next = e.next;

if (e.hash == hash && e.equals(entry)) {

modCount++;

size--;

if (prev == e)

table[i] = next;

else

prev.next = next;

e.recordRemoval(this);

return e;

}

prev = e;

e = next;

}

return e;

}

/**

* Removes all of the mappings from this map.

* The map will be empty after this call returns.

*/

public void clear() {

modCount++;

Entry[] tab = table;

for (int i = 0; i < tab.length; i++)

tab[i] = null;

size = 0;

}

/**

* Returns <tt>true</tt> if this map maps one or more keys to the

* specified value.

*

* @param value value whose presence in this map is to be tested

* @return <tt>true</tt> if this map maps one or more keys to the

* specified value

*/

public boolean containsValue(Object value) {

if (value == null)

return containsNullValue();

Entry[] tab = table;

for (int i = 0; i < tab.length ; i++)

for (Entry e = tab[i] ; e != null ; e = e.next)

if (value.equals(e.value))

return true;

return false;

}

/**

* Special-case code for containsValue with null argument

*/

private boolean containsNullValue() {

Entry[] tab = table;

for (int i = 0; i < tab.length ; i++)

for (Entry e = tab[i] ; e != null ; e = e.next)

if (e.value == null)

return true;

return false;

}

/**

* Returns a shallow copy of this <tt>HashMap</tt> instance: the keys and

* values themselves are not cloned.

*

* @return a shallow copy of this map

*/

public Object clone() {

HashMap<K,V> result = null;

try {

result = (HashMap<K,V>)super.clone();

} catch (CloneNotSupportedException e) {

// assert false;

}

result.table = new Entry[table.length];

result.entrySet = null;

result.modCount = 0;

result.size = 0;

result.init();

result.putAllForCreate(this);

return result;

}

static class Entry<K,V> implements Map.Entry<K,V> {

final K key;

V value;

Entry<K,V> next;

final int hash;

/**

* Creates new entry.

*/

Entry(int h, K k, V v, Entry<K,V> n) {

value = v;

next = n;

key = k;

hash = h;

}

public final K getKey() {

return key;

}

public final V getValue() {

return value;

}

public final V setValue(V newValue) {

V oldValue = value;

value = newValue;

return oldValue;

}

public final boolean equals(Object o) {

if (!(o instanceof Map.Entry))

return false;

Map.Entry e = (Map.Entry)o;

Object k1 = getKey();

Object k2 = e.getKey();

if (k1 == k2 || (k1 != null && k1.equals(k2))) {

Object v1 = getValue();

Object v2 = e.getValue();

if (v1 == v2 || (v1 != null && v1.equals(v2)))

return true;

}

return false;

}

public final int hashCode() {

return (key==null ? 0 : key.hashCode()) ^

(value==null ? 0 : value.hashCode());

}

public final String toString() {

return getKey() + "=" + getValue();

}

/**

* This method is invoked whenever the value in an entry is

* overwritten by an invocation of put(k,v) for a key k that's already

* in the HashMap.

*/

void recordAccess(HashMap<K,V> m) {

}

/**

* This method is invoked whenever the entry is

* removed from the table.

*/

void recordRemoval(HashMap<K,V> m) {

}

}

/**

* Adds a new entry with the specified key, value and hash code to

* the specified bucket. It is the responsibility of this

* method to resize the table if appropriate.

*

* Subclass overrides this to alter the behavior of put method.

*/

void addEntry(int hash, K key, V value, int bucketIndex) {

Entry<K,V> e = table[bucketIndex];

table[bucketIndex] = new Entry<K,V>(hash, key, value, e);

if (size++ >= threshold)

resize(2 * table.length);

}

/**

* Like addEntry except that this version is used when creating entries

* as part of Map construction or "pseudo-construction" (cloning,

* deserialization). This version needn't worry about resizing the table.

*

* Subclass overrides this to alter the behavior of HashMap(Map),

* clone, and readObject.

*/

void createEntry(int hash, K key, V value, int bucketIndex) {

Entry<K,V> e = table[bucketIndex];

table[bucketIndex] = new Entry<K,V>(hash, key, value, e);

size++;

}

private abstract class HashIterator<E> implements Iterator<E> {

Entry<K,V> next; // next entry to return

int expectedModCount; // For fast-fail

int index; // current slot

Entry<K,V> current; // current entry

HashIterator() {

expectedModCount = modCount;

if (size > 0) { // advance to first entry

Entry[] t = table;

while (index < t.length && (next = t[index++]) == null)

;

}

}

public final boolean hasNext() {

return next != null;

}

final Entry<K,V> nextEntry() {

if (modCount != expectedModCount)

throw new ConcurrentModificationException();

Entry<K,V> e = next;

if (e == null)

throw new NoSuchElementException();

if ((next = e.next) == null) {

Entry[] t = table;

while (index < t.length && (next = t[index++]) == null)

;

}

current = e;

return e;

}

public void remove() {

if (current == null)

throw new IllegalStateException();

if (modCount != expectedModCount)

throw new ConcurrentModificationException();

Object k = current.key;

current = null;

HashMap.this.removeEntryForKey(k);

expectedModCount = modCount;

}

}

private final class ValueIterator extends HashIterator<V> {

public V next() {

return nextEntry().value;

}

}

private final class KeyIterator extends HashIterator<K> {

public K next() {

return nextEntry().getKey();

}

}

private final class EntryIterator extends HashIterator<Map.Entry<K,V>> {

public Map.Entry<K,V> next() {

return nextEntry();

}

}

// Subclass overrides these to alter behavior of views' iterator() method

Iterator<K> newKeyIterator() {

return new KeyIterator();

}

Iterator<V> newValueIterator() {

return new ValueIterator();

}

Iterator<Map.Entry<K,V>> newEntryIterator() {

return new EntryIterator();

}

// Views

private transient Set<Map.Entry<K,V>> entrySet = null;

/**

* Returns a {@link Set} view of the keys contained in this map.

* The set is backed by the map, so changes to the map are

* reflected in the set, and vice-versa. If the map is modified

* while an iteration over the set is in progress (except through

* the iterator's own <tt>remove</tt> operation), the results of

* the iteration are undefined. The set supports element removal,

* which removes the corresponding mapping from the map, via the

* <tt>Iterator.remove</tt>, <tt>Set.remove</tt>,

* <tt>removeAll</tt>, <tt>retainAll</tt>, and <tt>clear</tt>

* operations. It does not support the <tt>add</tt> or <tt>addAll</tt>

* operations.

*/

public Set<K> keySet() {

Set<K> ks = keySet;

return (ks != null ? ks : (keySet = new KeySet()));

}

private final class KeySet extends AbstractSet<K> {

public Iterator<K> iterator() {

return newKeyIterator();

}

public int size() {

return size;

}

public boolean contains(Object o) {

return containsKey(o);

}

public boolean remove(Object o) {

return HashMap.this.removeEntryForKey(o) != null;

}

public void clear() {

HashMap.this.clear();

}

}

/**

* Returns a {@link Collection} view of the values contained in this map.

* The collection is backed by the map, so changes to the map are

* reflected in the collection, and vice-versa. If the map is

* modified while an iteration over the collection is in progress

* (except through the iterator's own <tt>remove</tt> operation),

* the results of the iteration are undefined. The collection

* supports element removal, which removes the corresponding

* mapping from the map, via the <tt>Iterator.remove</tt>,

* <tt>Collection.remove</tt>, <tt>removeAll</tt>,

* <tt>retainAll</tt> and <tt>clear</tt> operations. It does not

* support the <tt>add</tt> or <tt>addAll</tt> operations.

*/

public Collection<V> values() {

Collection<V> vs = values;

return (vs != null ? vs : (values = new Values()));

}

private final class Values extends AbstractCollection<V> {

public Iterator<V> iterator() {

return newValueIterator();

}

public int size() {

return size;

}

public boolean contains(Object o) {

return containsValue(o);

}

public void clear() {

HashMap.this.clear();

}

}

/**

* Returns a {@link Set} view of the mappings contained in this map.

* The set is backed by the map, so changes to the map are

* reflected in the set, and vice-versa. If the map is modified

* while an iteration over the set is in progress (except through

* the iterator's own <tt>remove</tt> operation, or through the

* <tt>setValue</tt> operation on a map entry returned by the

* iterator) the results of the iteration are undefined. The set

* supports element removal, which removes the corresponding

* mapping from the map, via the <tt>Iterator.remove</tt>,

* <tt>Set.remove</tt>, <tt>removeAll</tt>, <tt>retainAll</tt> and

* <tt>clear</tt> operations. It does not support the

* <tt>add</tt> or <tt>addAll</tt> operations.

*

* @return a set view of the mappings contained in this map

*/

public Set<Map.Entry<K,V>> entrySet() {

return entrySet0();

}

private Set<Map.Entry<K,V>> entrySet0() {

Set<Map.Entry<K,V>> es = entrySet;

return es != null ? es : (entrySet = new EntrySet());

}

private final class EntrySet extends AbstractSet<Map.Entry<K,V>> {

public Iterator<Map.Entry<K,V>> iterator() {

return newEntryIterator();

}

public boolean contains(Object o) {

if (!(o instanceof Map.Entry))

return false;

Map.Entry<K,V> e = (Map.Entry<K,V>) o;

Entry<K,V> candidate = getEntry(e.getKey());

return candidate != null && candidate.equals(e);

}

public boolean remove(Object o) {

return removeMapping(o) != null;

}

public int size() {

return size;

}

public void clear() {

HashMap.this.clear();

}

}

/**

* Save the state of the <tt>HashMap</tt> instance to a stream (i.e.,

* serialize it).

*

* @serialData The <i>capacity</i> of the HashMap (the length of the

* bucket array) is emitted (int), followed by the

* <i>size</i> (an int, the number of key-value

* mappings), followed by the key (Object) and value (Object)

* for each key-value mapping. The key-value mappings are

* emitted in no particular order.

*/

private void writeObject(java.io.ObjectOutputStream s)

throws IOException

{

Iterator<Map.Entry<K,V>> i =

(size > 0) ? entrySet0().iterator() : null;

// Write out the threshold, loadfactor, and any hidden stuff

s.defaultWriteObject();

// Write out number of buckets

s.writeInt(table.length);

// Write out size (number of Mappings)

s.writeInt(size);

// Write out keys and values (alternating)

if (i != null) {

while (i.hasNext()) {

Map.Entry<K,V> e = i.next();

s.writeObject(e.getKey());

s.writeObject(e.getValue());

}

}

}

private static final long serialVersionUID = 362498820763181265L;

/**

* Reconstitute the <tt>HashMap</tt> instance from a stream (i.e.,

* deserialize it).

*/

private void readObject(java.io.ObjectInputStream s)

throws IOException, ClassNotFoundException

{

// Read in the threshold, loadfactor, and any hidden stuff

s.defaultReadObject();

// Read in number of buckets and allocate the bucket array;

int numBuckets = s.readInt();

table = new Entry[numBuckets];

init(); // Give subclass a chance to do its thing.

// Read in size (number of Mappings)

int size = s.readInt();

// Read the keys and values, and put the mappings in the HashMap

for (int i=0; i<size; i++) {

K key = (K) s.readObject();

V value = (V) s.readObject();

putForCreate(key, value);

}

}

// These methods are used when serializing HashSets

int capacity() { return table.length; }

float loadFactor() { return loadFactor; }

}

相关推荐

在本分析中,我们将会详细探讨HashMap在不同负载因子(loadFactor)、循环次数(loop)、哈希表长度(maptablelen)和映射长度(maplen)等条件下的行为和特性。 负载因子(loadFactor)是HashMap中的一个关键参数...

Java HashMap原理分析 Java HashMap是一种基于哈希表的数据结构,它的存储原理是通过将Key-Value对存储在一个数组中,每个数组元素是一个链表,链表中的每个元素是一个Entry对象,Entry对象包含了Key、Value和指向...

hashMap存储分析hashMap存储分析

### HashMap部分源码分析 #### 一、HashMap数据结构 HashMap是一种非常常用的数据结构,在Java中,它提供了基于键值对存储数据的功能。其内部采用了数组加链表(或红黑树)的形式来存储数据。 - **数组**:提供...

HashMap 是 Java 中最常用的集合类之一,它是基于哈希表实现的,提供了高效的数据存取功能。HashMap 的核心原理是将键(key)通过哈希函数转化为数组索引,然后将键值对(key-value pair)存储在数组的对应位置。...

HashMap死循环原因分析 HashMap是Java中常用的数据结构,但是它在多线程环境下可能会出现死循环的问题,使CPU占用率达到100%。这种情况是如何产生的呢?下面我们将从源码中一步一步地分析这种情况是如何产生的。 ...

哈希映射(HashMap)是Java编程语言中广泛使用的数据结构之一,主要提供键值对的存储和查找功能。HashMap的实现基于哈希表的概念,它通过计算对象的哈希码来快速定位数据,从而实现了O(1)的平均时间复杂度。在深入...

面试中,可能会被问及HashMap的性能优化、内存占用分析、以及在特定场景下的选择,如并发环境、内存敏感的应用等。 总结,HashMap是Java编程中的基础工具,掌握其工作原理和常见面试题,不仅能帮助我们应对面试,更...

面试中,HashMap的源码分析与实现是一个常见的考察点,因为它涉及到数据结构、并发处理和性能优化等多个核心领域。本篇文章将深入探讨HashMap的内部工作原理、关键特性以及其在实际应用中的考量因素。 HashMap基于...

用过Java的都知道,里面有个功能强大的数据结构——HashMap,它能提供键与值的对应访问。不过熟悉JS的朋友也会说,JS里面到处都是hashmap,因为每个对象都提供了map[key]的访问形式。

HashMap导致CPU100% 的分析

hashmap的原理啊思想。

在Java编程语言中,`HashMap`和`HashTable`都是实现键值对存储的数据结构,但它们之间存在一些显著的区别,这些区别主要体现在线程安全性、性能、null值处理以及一些方法特性上。以下是对这两个类的详细分析: 1. ...

通过分析源码,开发者可以深入理解哈希表的工作原理,学习如何在易语言中实现高效的数据结构,这对于提升程序性能和优化内存管理至关重要。同时,这也为自定义数据结构或实现其他哈希表相关的功能提供了基础。

比较分析Vector、ArrayList和hashtable hashmap数据结构

hashMap基本工作原理,图解分析,基础Map集合

# 基于Python的HashMap与红黑树性能分析 ## 项目简介 本项目旨在深入分析和优化基于Python实现的HashMap和红黑树的性能。通过模拟实验和数据分析,我们探讨了不同参数设置对HashMap性能的影响,并提供了优化建议。...