- 浏览: 22700107 次

- 性别:

- 来自: 杭州

-

最新评论

-

ZY199266:

配置文件还需要额外的配置ma

Android 客户端通过内置API(HttpClient) 访问 服务器(用Spring MVC 架构) 返回的json数据全过程 -

ZY199266:

我的一访问为什么是 /mavenwebdemo/WEB-I ...

Android 客户端通过内置API(HttpClient) 访问 服务器(用Spring MVC 架构) 返回的json数据全过程 -

lvgaga:

我又一个问题就是 如果像你的这种形式写。配置文件还需要额外的 ...

Android 客户端通过内置API(HttpClient) 访问 服务器(用Spring MVC 架构) 返回的json数据全过程 -

lvgaga:

我的一访问为什么是 /mavenwebdemo/WEB-I ...

Android 客户端通过内置API(HttpClient) 访问 服务器(用Spring MVC 架构) 返回的json数据全过程 -

y1210251848:

你的那个错误应该是项目所使用的目标框架不支持吧

log4net配置(web中使用log4net,把web.config放在单独的文件中)

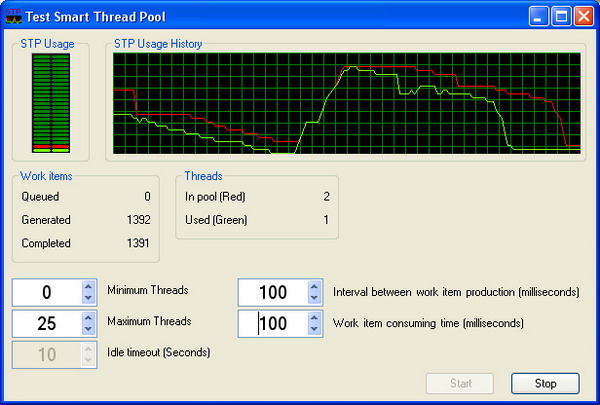

Smart Thread Pool

See the History section at the bottom for changes.

Basic usage

This is a Thread Pool; if you got here, you probably know what you need. If you want to understand the features and know how it works, keep reading the sections below. If you just want to use it, here is a quick usage snippet. See examples for advanced usage.

// Create an instance of the Smart Thread Pool

SmartThreadPool smartThreadPool = new SmartThreadPool ();

// Queue an action (Fire and forget)

smartThreadPool .QueueWorkItem(System.IO.File.Copy,

@" C:\Temp\myfile.bin" , @" C:\Temp\myfile.bak" );

// The action (file copy) will be done in the background by the Thread Pool

Introduction

Smart Thread Pool is a thread pool written in C#. The implementation was first based on Stephan Toub's thread pool with some extra features, but now, it is far beyond the original. Here is a list of the thread pool features:

- The number of threads dynamically changes according to the workload on the threads in the pool.

- Work items can return a value.

- A work item can be cancelled if it hasn't been executed yet.

- The caller thread's context is used when the work item is executed (limited).

- Usage of minimum number of Win32 event handles, so the handle count of the application won't explode.

- The caller can wait for multiple or all the work items to complete.

- A work item can have a

PostExecutecallback, which is called as soon the work item is completed. - The state object that accompanies the work item can be disposed automatically.

- Work item exceptions are sent back to the caller.

- Work items have priority.

- Work items group.

- The caller can suspend the start of a thread pool and work items group.

- Threads have priority.

- Threads have initialization and termination events.

- WinCE platform is supported (limited).

-

Action< T>andFunc< T>generic methods are supported. - Silverlight is supported.

- Mono is supported.

- Performance counters (Windows and internal).

- Work item timeout (passive).

Why do you need a thread pool?

"Many applications create threads that spend a great deal of time in the sleeping state, waiting for an event to occur. Other threads might enter a sleeping state only to be awakened periodically to poll for a change or update status information. Thread pooling enables you to use threads more efficiently by providing your application with a pool of worker threads that are managed by the system. One thread monitors the status of several wait operations queued to the thread pool. When a wait operation completes, a worker thread from the thread pool executes the corresponding callback function".

MSDN, April 2004, ThreadPool Class [C#].

Smart Thread Pool features

When I wrote my application, I discovered that I needed a thread pool with the following features:

- The thread pool should implement the

QueueUserWorkItem()method to comply with the .NET ThreadPool. - The thread pool should be instantiated. (No static methods.) So, the threads in the pool are used only for one purpose.

- The number of threads in the pool should be dynamic with lower and upper limits.

After I published the Smart Thread Pool here, I found out that more features were required and some features had to change. So, the following is an updated list of the implemented features:

- The thread pool is instantiable .

- The number of threads dynamically changes .

- Work items return a value .

- The caller can wait for multiple work items to complete .

- A work item can be cancelled .

- The caller thread's context is used when the work item is executed (limited) .

- Usage of minimum number of Win32 event handles, so the handle count of the application won't explode .

Because of features 3 and 5, the thread pool no longer complies to the .NET ThreadPool, and so I could add more features.

See the additional features section below for the new features added in this version.

Additional features added in December 2004

- Every work item can have a PostExecute callback. This is a method that will be called right after the work item execution has been completed .

- The user can choose to automatically dispose off the state object that accompanies the work item .

- The user can wait for the Smart Thread Pool to become idle .

-

Exception

handling has been changed, so if a work item throws an exception, it is

re-thrown at

GetResult(), rather than firing anUnhandledExceptionevent. Note thatPostExecuteexceptions are always ignored .

New features added in January 2006

- Work items have priority .

- The caller thread's HTTP context can be used when the work item is executed (improves 6) .

- Work items group .

- The caller can create thread pools and work item groups in suspended state .

- Threads have priority .

New features added in May 2008

-

Enabled

the change of

MaxThreads/MinThreads/Concurrencyat run time . - Improved the Cancel behavior (see section 5) .

- Added callbacks for initialization and termination of threads .

- Added support for WinCE (limited) .

-

Added

the

IsIdleflag toSmartThreadPooland toIWorkItemsGroup. -

Added

support for

Action< T>andFunc< T>(strong typed work items) (see section 3) .

New features added in April 2009

- Added support for Silverlight .

- Added support for Mono .

- Added internal performance counters (for Windows CE, Silverlight, and Mono) .

-

Added

new methods:

Join,Choice, andPipe.

New features added in December 2009

What about the .NET ThreadPool?

The Windows system provides one .NET ThreadPool for each process. The

.NET ThreadPool can contain up to 25 (by default) threads per

processor. It is also stated that the operations in the .NET ThreadPool

should be quick to avoid suspension of the work of others who use the

.NET ThreadPool. Note that several AppDomains in the same process share

the same .NET ThreadPool. If you want a thread to work for a long period

of time, then the .NET ThreadPool is not a good choice for you (unless

you know what you are doing). Note that each asynchronous method call

from the .NET Framework that begins with "Begin…" (e.g., BeginInvoke

,

BeginSend

, BeginReceive

, etc.) uses the .NET

ThreadPool to run its callback. Also note that the .NET ThreadPool

doesn't support calls to COM with single threaded apartment (STA), since

the ThreadPool threads are MTA by design.

This thread pool doesn't comply with the requirements 1, 5, 6, 8, 9, 10, 12-25.

Note that the requirements 3 and 4 are implemented in .NET ThreadPool with delegates.

What about Stephen Toub's thread pool?

Toub's thread pool is a better choice than the .NET ThreadPool, since a thread from his pool can be used for a longer period of time without affecting asynchronous method calls. Toub's thread pool uses static methods; hence, you cannot instantiate more than one thread pool. However, this limitation applies per AppDomain rather than the whole process. The main disadvantage of Toub's thread pool over the .NET TheradPool is that Toub creates all the threads in the pool at the initialization point, while the .NET ThreadPool creates threads on the fly.

This thread pool doesn't comply with the requirements 1, 2, 3, 4, 5, 6, 8-25.

The Smart Thread Pool design and features

As I mentioned before, the Smart Thread Pool is based on Toub's thread pool implementation. However, since I have expanded its features, the code is no longer similar to the original one.

Features implementation:

- The thread pool is instantiable .

- The number of threads dynamically changes .

-

Work items return a value

(this feature is enhanced).

- The following function uses the

GetResult()method which blocks the caller until the result is available: - The following function is not recommended, because it uses a busy wait loop. You can use it if you know what you are doing:

Collapse

Collapse

private void WaitForResult1(IWorkItemResult wir)

{

wir.GetResult();

} Collapse

Collapse

private void WaitForResult2(IWorkItemResult wir)

{

while (!wir.IsCompleted)

{

Thread.Sleep(100 );

}

} - The following function uses the

- The caller can wait for multiple work items to complete .

-

A work item can be cancelled

(this feature is enhanced).

-

Queued– The work item is waiting in a queue to be executed. -

InProgress– A thread from the pool is executing the work item. -

Completed– The work item execution has been completed. -

Cancelled– The work item has been cancelled.

-

-

The caller thread's context is used when

the work item is executed (limited)

.

- The current thread context is captured.

- The caller thread context is applied.

- The work item is executed.

- The current old thread context is restored.

-

CurrentCulture- The culture of the thread. -

CurrentUICulture- The culture used by the resource manager to look up culture-specific resources at run time. -

CurrentPrincipal- The current principal (for role-based security). -

CurrentContext- The current context in which the thread is executing (used in remoting).

- Usage of minimum number of Win32 event handles, so the handle count of the application won't explode .

-

Work item can have a PostExecute

callback. This is a method that will be called right after the work item

execution has been completed

.

-

Never– Don't runPostExecute. -

WhenWorkItemCanceled- RunPostExecuteonly when the work item has been canceled. -

WhenWorkItemNotCanceled- RunPostExecuteonly when the work item has not been canceled. -

Always– Always runPostExecute.

-

- The user can choose to automatically dispose off the state object that accompanies the work item .

-

The user can wait for the Smart Thread

Pool / Work Items Group to become idle.

This feature enables the user to wait for a Smart Thread Pool or a Work Items Group to become idle. They become idle when the work items queue is empty and all the threads have completed executing all their work items.

This is useful in case you want to run a batch of work items and then wait for all of them to complete. It saves you from handling the

IWorkItemResultobjects in case you just want to wait for all of the work items to complete.The

SmartThreadPooland WorkItemsGroup classes both implement theIWorkItemsGroupinterface which defines theWaitForIdlemethods.To take advantage of this feature, use the

IWorkItemsGroup.WaitForIdle()method (bothSmartThreadPooland WorkItemsGroup implement theIWorkItemsGroupinterface which defines theWaitForIdlemethods). It has several overloads which provide a timeout argument. TheWaitForIdle()method is notstatic, and should be used on aSmartThreadPoolinstance.SmartThreadPoolalways keeps track of how many work items it has. When a new work item is queued, the number is incremented. When a thread completes a work item, the number is decremented. The total number of work items includes the work items in the queue and the work items that the threads are currently working on.The

WaitForIdle()mechanism works with a privateManualResetEvent. When a work item is queued, theManualResetEventis reset (changed to non-signaled state). When the work items count becomes zero (initial state of the Smart Thread Pool), theManualResetEventis set (changed to signaled state). TheWaitForIdle()method just waits for theManualResetEventto implement its functionality.See the example below.

-

Exception handling has been changed, so

if a work item throws an exception, it is rethrown at

GetResult(), rather than firing anUnhandledExceptionevent. Note thatPostExecuteexceptions are always ignored .After I did some reading about delegates and their implementation, I decided to change the way

SmartThreadPooltreats exceptions. In the previous versions, I used an event driven mechanism. Entities were registered to theSmartThreadPool .UnhandledExceptionevent, and when a work item threw an exception, this event was fired. This is the behavior of Toub’s thread pool..NET delegates behave differently. Instead of using an event driven mechanism, it re-throws the exception of the delegated method at

EndInvoke(). Similarly, theSmartThreadPoolexception mechanism is changed so that exceptions are no longer fired by theUnhandledExceptionevent, but rather re-thrown again whenIWorkItemResult.GetResult()is called.Note that exceptions slow down .NET and degrade the performance. .NET works faster when no exceptions are thrown at all. For this reason, I added an output parameter to some of the

GetResult()overloads, so the exception can be retrieved rather than re-thrown. The work item throws the exception anyway, so re-throwing it will be a waste of time. As a rule of thumb, it is better to use the output parameter than catch the re-thrown exception.GetResult()can be called unlimited number of times, and it re-throws the same exception each time.Note that

PostExecuteis called as and when needed, even if the work item has thrown an exception. Of course, thePostExecuteimplementation should handle exceptions if it callsGetResult().Also note that if

PostExecutethrows an exception, then its exception is ignored.See the example below.

- Work items have priority .

- The caller thread's HTTP context can be used when the work item is executed .

- Work items group .

The reason I need an instantiable thread pool is because I have different needs. I have work items that take a long time to execute, and I have work items that take a very short time to execute. Executing the same type of work items on the same thread pool may cause some serious performance or response problems.

To implement this feature, I just copied Toub's implementation and

removed the static

keyword from the methods. That's the easy part of it.

The number of threads dynamically changes according to the workload on the threads in the pool, with lower and upper constraints for the number of threads in the pool. This feature is needed so we won't have redundant threads in the application.

This feature is a real issue, and is the core of the Smart Thread Pool. How do you know when to add a new thread and when to remove it?

I decided to add a new thread every time a new work item is queued and all the threads in the pool are busy. The formula for adding a new thread can be summarized to:

(InUseThreads + WaitingCallbacks) > WorkerThreads

where WorkerThreads

is the current number of threads in

the pool, InUseThreads

is the number of threads in the pool

that are currently working on a work item, and WaitingCallbacks

is the number of waiting work items. (Thanks to jrshute

for the comment.)

The SmartThreadPool

.Enqueue()

method looks

like this:

private void Enqueue(WorkItem workItem)

{

// Make sure the workItem is not null

Debug.Assert(null != workItem);

// Enqueue the work item

_workItemsQueue.EnqueueWorkItem(workItem);

// If all the threads are busy then try

// to create a new one

if ((InUseThreads + WaitingCallbacks) > _workerThreads.Count)

{

StartThreads(1 );

}

}

When the number of threads reaches the upper limit, no more threads are created.

I decided to remove a thread from the pool when it is idle (i.e., the thread doesn't work on any work item) for a specific period of time. Each time a thread waits for a work item on the work item's queue, it also waits for a timeout. If the waiting exceeds the timeout, the thread should leave the pool, meaning the thread should quit if it is idle. It sounds like a simple solution, but what about the following scenario: assume that the lower limit of threads is 0 and the upper limit is 100. The idle timeout is 60 seconds. Currently, the thread pool contains 60 threads, and each second, a new work item arrives, and it takes a thread one second to handle a work item. This means that, each minute, 60 work items arrive and are handled by 60 threads in the pool. As a result, no thread exits, since no thread is idle for a full 60 seconds, although 1 or 2 threads are enough to do all the work.

In order to solve the problem of this scenario, you have to calculate how much time each thread worked, and once in a while, exit the threads that don't work for enough time in the timeout interval. This means, the thread pool has to use a timer (use the .NET ThreadPool) or a manager thread to handle the thread pool. To me, it seems an overhead to use a special thread to handle a thread pool.

This led me to the idea that the thread pool mechanism should starve the threads in order to let them quit. So, how do you starve the threads?

All the threads in the pool are waiting on the same work items queue. The work items queue manages two queues: one for the work items and one for the waiters (the threads in the pool). Since the trivial work items queue works, the first arrived waiter for a work item gets it first (queue), and so you cannot starve the threads.

Have a look at the following scenario:

The thread pool contains four threads. Let's name them A, B, C, and D. Every second, a new work item arrives, and it takes less than one second and a half to handle each work item:

|

Work item arrival time (sec) |

Work item work duration (sec) |

Threads queue state |

The thread that will execute the arrived work item |

|

00:00:00 |

1.5 |

A, B, C, D |

A |

|

00:00:01 |

1.5 |

B, C, D |

B |

|

00:00:02 |

1.5 |

C, D, A |

C |

|

00:00:03 |

1.5 |

D, A, B |

D |

In this scenario, all the four threads are used, although two threads could handle all the work items.

The solution is to implement the waiters queue as a stack. In this implementation, the last arrived waiter for a work item gets it first (stack). This way, a thread that just finished its work on a work item waits as the first waiter in the queue of waiters.

The previous scenario will look like this with the new implementation:

|

Work item arrival time (sec) |

Work item work duration (sec) |

Threads queue state |

The thread that will execute the arrived work item |

|

00:00:00 |

1.5 |

A, B, C, D |

A |

|

00:00:01 |

1.5 |

B, C, D |

B |

|

00:00:02 |

1.5 |

A, C, D |

A |

|

00:00:03 |

1.5 |

B, C, D |

B |

Threads A and B handle all the work items, since they get back to the front of the waiters queue after they have finished. Threads C and D are starved, and if the same work items are going to arrive for a long time, then threads C and D will have to quit.

The thread pool doesn't implement a load balancing mechanism, since all the threads run on the same machine and take the same CPUs. Note that if you have many threads in the pool, then you will prefer the minimum number of threads to do the job, since each context switch of the threads may result in paging of the threads' stacks. Less working threads means less paging of the threads' stacks.

The work items queue implementation causes threads to starve, and the starved threads quit. This solves the scenario I mentioned earlier without using any extra thread.

The second feature also states that there should be a lower limit to the number of threads in the pool. To implement this feature, every thread that gets a timeout, because it doesn't get any work items, checks whatever it can quit. Smart Thread Pool allows the thread to quit only if the current number of threads is above the lower limit. If the number of threads in the pool is below or equal to the lower limit, then the thread stays alive.

This feature is very useful in cases where you want to know the result of a work item.

The .NET ThreadPool supports this feature via delegates. Each time

you create a delegate, you get the BeginInvoke()

and EndInvoke()

methods for free. BeginInvoke()

queues the method and its

parameters on the .NET ThreadPool, and EndInvoke()

returns

the result of the method. The delegate class is sealed

, so I couldn't override the BeginInvoke()

and EndInvoke()

methods. I took a different approach to

implement this.

First, the work item callback delegate can return a value:

public delegate object WorkItemCallback(object state);

Or in its enhanced form, the callback can be any of the following forms:

public delegate void Action();

public delegate void Action<T>(T arg);

public delegate void Action<T1, T2>(T1 arg1, T2 arg2);

public delegate void Action<T1, T2, T3>(T1 arg1,

T2 arg2, T3 arg3);

public delegate void Action<T1, T2, T3, T4>(T1 arg1,

T2 arg2, T3 arg3, T4 arg4);

public delegate TResult Func();

public delegate TResult Func<T>(T arg1);

public delegate TResult Func<T1, T2>(T1 arg1, T2 arg2);

public delegate TResult Func<T1, T2, T3>(T1 arg1,

T2 arg2, T3 arg3);

public delegate TResult Func<T1, T2, T3, T4>(T1 arg1,

T2 arg2, T3 arg3, T4 arg4);

(Note that the above delegates are defined in .NET 3.5. In .NET 2.0

& 3.0, only public

delegate

void

Action<T>(T arg)

is defined.) Second, the SmartThreadPool

.QueueWorkItem()

method returns a reference to an object that implements the IWorkItemResult<

TResult>

interface. The caller can use this object to get the result of the work

item. The interface is similar to the IAsyncResult

interface:

public interface IWorkItemResult<TResult>

{

/// Get the result of the work item.

/// If the work item didn't run yet then the caller waits

/// until timeout or until the cancelWaitHandle is signaled.

/// If the work item threw then GetResult() will rethrow it.

/// Returns the result of the work item.

/// On timeout throws WorkItemTimeoutException.

/// On cancel throws WorkItemCancelException.

TResult GetResult(

int millisecondsTimeout,

bool exitContext,

WaitHandle cancelWaitHandle);

/// Some of the GetResult() overloads

/// get Exception as an output parameter.

/// In case the work item threw

/// an exception this parameter is filled with

/// it and the GetResult() returns null.

/// These overloads are provided

/// for performance reasons. It is faster to

/// return the exceptions as an output

/// parameter than rethrowing it.

TResult GetResult(..., out Exception e);

/// Other GetResult() overloads.

...

/// Gets an indication whether

/// the asynchronous operation has completed.

bool IsCompleted { get ; }

/// Returns the user-defined object

/// that was provided in the QueueWorkItem.

/// If the work item callback is Action< ... >

/// or Func< ... > the State value

/// depends on the WIGStartInfo.FillStateWithArgs.

object State { get ; }

/// Cancel the work item execution.

/// If the work item is in the queue, it won't execute

/// If the work item is completed, it will remain completed

/// If the work item is already cancelled it will remain cancelled

/// If the work item is in progress,

/// the result of the work item is cancelled.

/// (See the work item canceling section for more information)

/// Param: abortExecution - When true send an AbortException

/// to the executing thread.< / param >

/// Returns true if the work item

/// was not completed, otherwise false.

bool Cancel(bool abortExecution);

/// Get the work item's priority

WorkItemPriority WorkItemPriority { get ; }

/// Returns the result, same as GetResult().

/// Note that this property blocks the caller like GetResult().

TResult Result { get ; }

/// Returns the exception, if occured, otherwise returns null.

/// This function is not blocking like the Result property.

object Exception { get ; }

}

If the work item callback is object

WorkItemCallback(object

state)

, then IWorkItemResult

is returned, and GetResult()

returns object

. Same as in previous

versions.

If the work item callback is one of the Func<

...>

methods I mentioned above, the result of QueueWorkItem

is IWorkItemResult<

TResult>

.

So, the result of the work item is strongly typed.

If the work item callback is one of the Action<

...>

methods I mentioned above, the result of QueueWorkItem

is IWorkItemResult

and GetResult()

always returns null

.

If the work item callback is Action<

...>

or Func<

...>

and WIGStartInfo.FillStateWithArgs

is set to true

, then the State

of the IWorkItemResult

is initialized with a object

[]

that

contains the work item arguments. Otherwise, the State

is null

.

The code examples in the section below show some snippets of how to use it.

To get the result of the work item, use the Result

property or the GetResult()

method. This method has several

overloads. In the interface above, I have written only some of them.

The other overloads use less parameters by giving default values. The GetResult()

returns the result of the work item callback. If the work item hasn't

completed, then the caller waits until one of the following occurs:

| GetResult() return reason | GetResult() return value |

| The work item has been executed and completed. | The result of the work item. |

| The work item has been canceled. | Throws WorkItemCancelException

. |

| The timeout expired. | Throws WorkItemTimeoutException

. |

The cancelWaitHandle

is signaled. |

Throws WorkItemTimeoutException

. |

| The work item threw an exception. | Throws WorkItemResultException

with the work item's

exception as the inner exception. |

There are two ways to wait for a single work item to complete:

This feature is very useful if you want to run several work items at

once and then wait for all of them to complete. The SmartThreadPool

class has two static methods for this: WaitAny()

and WaitAll()

(they have several overloads). Their signature is similar to the WaitHandle

equivalent methods except that in the SmartThreadPool

case, it gets an array of IWaitableResult

(the IWorkItemResult

interface inherits from IWaitableResult

) objects instead

of WaitHandle

objects.

The following snippets show how to wait for several work item results

at once. Assume wir1

and wir2

are of type IWorkItemResult

.

You can wait for both work items to complete:

// Wait for both work items complete

SmartThreadPool .WaitAll(new IWaitableResult[] { wir1, wir2});

Or, for any of the work items to complete:

// Wait for at least one of the work items complete

SmartThreadPool .WaitAny(new IWaitableResult[] { wir1, wir2});

The WaitAll()

and WaitAny()

methods are

overloaded, so you can specify the timeout, the exit context, and cancelWaitHandle

(just like in the GetResult()

method mentioned earlier).

Note

that in order to use WaitAny()

and

WaitAll()

, you need to work in MTA, because internally, I

use WaitHandle.WaitAny()

and WaitHandle.WaitAll()

which require it. If you don't do that, the methods will throw an

exception to remind you.

Also note

that Windows supports WaitAny()

of up to 64 handles. WaitAll()

is more flexible, and I

re-implemented it so it is not limited to 64 handles.

See in the examples section below, the code snippets for WaitAll

and WaitAny

.

This feature enables to cancel work items.

There are several options to cancel work items. To cancel a single

work item, call IWorkItemResult.Cancel()

. To cancel more

than one, call IWorkItemsGroup.Cancel()

or SmartThreadPool

.Cancel()

.

All cancels works in O(1).

There is no guarantee that a work item will be cancelled, it depends

on the state of the work item when the cancel is called and the

cooperation of the work item. (Note that the work item's state I mention

here has nothing to do with the state object argument provided in the QueueWorkItem

.)

These are the possible states of a work item (defined in the WorkItemState

enum):

The cancel behavior depends on the state of the work item.

| Initial State | Next State | Notes |

Queued

|

Cancelled

|

A queued work item becomes cancelled and is not executed at all. |

InProgress

|

Cancelled

|

An executing work item becomes cancelled even if it completes its execution! |

Completed

|

Completed

|

A completed work item stays completed. |

Cancelled

|

Cancelled

|

A cancelled work item stays cancelled. |

A cancelled work item throws a WorkItemCancelException

when its GetResult()

method is called.

The behavior of Cancel()

when the work item is in Completed

or Cancelled

states is straightforward, so I won't get

into the details. A queued work item is marked as cancelled and is

discarded once a thread from the pool dequeues it.

If a work item is in the InProgress

state, then the

behavior depends on the value of abortExecution

in the Cancel

call. When abortExecution

is true

, a Thread.Abort()

will be called upon the executing thread. When abortExecution

is false

, the

work item method is responsible to sample the SmartThreadPool

.IsWorkItemCanceled

static method and quit. Note that, in both cases, the work item is

cancelled, and throws a WorkItemCancelException

on GetResult()

.

Here is an example of a cooperative work item:

private void DoWork()

{

// Do something here.

// Sample SmartThreadPool .IsWorkItemCanceled

if (SmartThreadPool .IsWorkItemCanceled)

{

return ;

}

// Sample the SmartThreadPool .IsWorkItemCanceled in a loop

while (!SmartThreadPool .IsWorkItemCanceled)

{

// Do some work here

}

}

This feature should be elementary, but it is not so simple to

implement. In order to pass the thread's context, the caller thread's CompressedStack

should be passed. This is impossible since Microsoft blocks this option

with security. Other parts of the thread's context can be passed. These

include:

The first three belong to the System.Threading.Thread

class (static or instance), and are get/set properties. However, the

last one is a read only property. In order to set it, I used Reflection,

which slows down the application. If you need this context, just remove

the comments from the code.

To simplify the operation of capturing the context and then applying

it later, I wrote a special class that is used internally and does all

that stuff. The class is called CallerThreadContext

, and it

is used internally. When Microsoft unblocks the protection on the CompressedStack

,

I will add it there.

The caller thread's context is stored when the work item is created,

within the EnqueueWorkItem()

method. Each time a thread

from the pool executes a work item, the thread's context changes in the

following order:

The seventh feature is a result of Kevin's comment on the earlier

version of Smart Thread Pool. It seemed that the test application

consumed a lot of handles (Handle Count in the Task Manager) without

freeing them. After a few tests, I got to the conclusion that the Close()

method of the ManualResetEvent

class doesn't always

release the Win32 event handle immediately, and waits for the garbage

collector to do that. Hence, running the GC explicitly releases the

handles.

To make this problem less acute, I used a new approach. First, I

wanted to create less number of handles; second, I wanted to reuse the

handles I had already created. Therefore, I need not expose any WaitHandle

,

but use them internally and then close them.

In order to create fewer handles, I created the ManualResetEvent

objects only when the user asks for them (lazy creation). For example,

if you don't use the GetResult()

of the IWorkItemResult

interface, then a handle is not created. Using SmartThreadPool

.WaitAll()

and SmartThreadPool

.WaitAny()

creates a

handle.

The work item queue creates a lot of handles since each new wait for a

work item creates a new ManualResetEvent

. Hence, a handle

for each work item. The waiters of the queue are always the same

threads, and a thread cannot wait more than once. So now, every thread

in the thread pool has its own ManualResetEvent

and reuses

it. To avoid coupling of the work items queue and the thread pool

implementation, the work items queue stores a context inside the TLS

(Thread Local Storage) of the thread.

A PostExecute

is a callback method that is called right

after the work item execution has been completed. It runs in the same

context of the thread that executes the work item. The user can choose

the cases in which PostExecute

is called. The options are

represented in the CallToPostExecute

flagged enumerator:

[Flags]

public enum CallToPostExecute

{

Never = 0x00,

WhenWorkItemCanceled = 0x01,

WhenWorkItemNotCanceled = 0x02,

Always = WhenWorkItemCanceled | WhenWorkItemNotCanceled,

}

Explanation:

SmartThreadPool

has a default CallToPostExecute

value of CallToPostExecute.Always

. This can be changed

during the construction of SmartThreadPool

in the STPStartInfo

class argument. Another way to give the CallToPostExecute

value is in one of the SmartThreadPool

.QueueWorkItem

overloads. Note that, as opposed to the WorkItem

execution, if an exception has been thrown during PostExecute

,

then it is ignored. PostExecute

is a delegate with the

following signature:

public delegate void PostExecuteWorkItemCallback(IWorkItemResult wir);

As you can see, PostExecute

receives an argument of type

IWorkItemResult

. It can be used to get the result of the

work item, or any other information made available by the IWorkItemResult

interface.

When the user calls QueueWorkItem

, he/she can provide a

state object. The state object usually stores specific information, such

as arguments that should be used within the WorkItemCallback

delegate.

The state object life time depends on its contents and the user's application. Sometimes, it is useful to dispose off the state object just after the work item has been completed. Especially if it contains unmanaged resources.

For this reason, I added a boolean to SmartThreadPool

that indicates to call Dispose

on the state object when

the work item has been completed. The boolean is initialized when the

thread pool is constructed with STPStartInfo

. Dispose

is called only if the state object implements the IDisposable

interface. Dispose

is called after the WorkItem

has been completed and its PostExecute

has run (if a PostExecute

exists). The state object is disposed even if the work item has been

canceled or the thread pool has been shutdown.

Note

that this feature only applies to the state

argument that comes with WorkItemCallback

, it doesn't

apply to the arguments supplied in Action<

...>

and Func<

...>

!!!

Work items priority enables the user to order work items at run time. Work items are ordered by their priority. High priority is treated first. There are five priorities:

public enum WorkItemPriority

{

Lowest,

BelowNormal,

Normal,

AboveNormal,

Highest,

}

The default priority is Normal

.

The implementation of priorities is quite simple. Instead of using one queue that keeps the work items sorted inside, I used one queue for each priority. Each queue is a FIFO. When the user enqueues a work item, the work item is added to the queue with a matching priority. When a thread dequeues a work item, it looks for the highest priority queue that is not empty.

This is the easiest solution to sort the work items.

This feature improves 6, and was implemented by Steven T. I just replaced my code with that implementation.

With this feature, the Smart Thread Pool can be used with ASP.NET to pass the context of HTTP between the caller thread and the thread in the pool that will execute the work item.

This feature enables the user to execute a group of work items specifying the maximum level of concurrency.

For example, assume that your application uses several resources, the resources are not thread safe, so only one thread can use a resource at a time. There are a few solutions to this, from creating one thread that uses all resources to creating a thread per resource. The first solution doesn’t harness the power of parallelism, the latter solution is too expensive (many threads) if the resources are idle most of the time.

The Smart Thread Pool solution is to create a WorkItemsGroup per resource, with a concurrency of one. Each time a resource needs to do some work, a work item is queued into its WorkItemsGroup. The concurrency of the WorkItemsGroup is one, so only one work item can run at a time per resource. The number of threads dynamically changes according to the load of the work items.

Here is a code snippet to show how this works:

...

// Create a SmartThreadPool <br发表评论

相关推荐

Smart Thread Pool is a thread pool written in C#. The implementation was first based on Stephan Toub's thread pool with some extra features, but now it is far beyond the original. Here is a list of ...

智能线程池(Smart ThreadPool)是一款专为.NET环境设计的高效、可定制的线程池实现,具有跨平台兼容性,能在多种操作系统上运行,包括Windows、Windows CE、Silverlight、ASP.NET以及Mono。作为开源软件,其源代码...

Boost库是一个经过千锤百炼、可移植、提供源代码的C++库,作为标准库的后备,是C++标准化进程的发动机之...Thread 可移植的C++多线程库;Python 把C++类和函数映射到Python之中;Pool 内存池管理;smart_ptr 智能指针。

在"boost1.57 libs源代码第二部分"中,包含了一些核心的Boost组件,如signals、logic、smart_ptr、log、thread、polygon、tuple、pool、parameter和phoenix。以下是对这些组件的详细解释: 1. **signals**:Boost....

thread pool using std::future . I'll teach concepts beyond what you'd find in a reference manual. You'll learn the difference between monomorphic, polymorphic, and generic algorithms (Chapter 1 , ...

fatalerror99 array, bind & mem_fn, dynamic_bitset, function, functional/hash, in_place_factory & typed_in_place_factory, lambda, ref, smart_ptr, static_assert, string_algo, type_traits, typeof ...

Thread 可移植的C++多线程库 Python 把C++类和函数映射到Python之中 Pool 内存池管理 smart_ptr 5个智能指针,学习智能指针必读,一份不错的参考是来自CUJ的文章: Smart Pointers in Boost,哦,...

1. **线程管理**:ACE提供了线程池(Thread Pool)机制,用于高效地管理和调度线程,避免了频繁创建和销毁线程的开销。此外,还有线程同步和互斥锁(Mutex)等工具,保证了并发访问的安全性。 2. **异步I/O**:ACE...

Boost包含了许多实用的库,如MPL(元编程库)、Thread(多线程库)、Python(C++与Python互操作)、Pool(内存池管理)和smart_ptr(智能指针)。Boost库不仅实用,还具有高度的可移植性和对标准C++的兼容性,但其中...

还有概念检查库concept check,元编程库Mpl,多线程库Thread,Python接口库,内存池管理库Pool,以及智能指针库smart_ptr等。Boost库的实用性和高质量使其成为现代C++开发者的必备工具,尽管有些库可能还在试验阶段...

著名的Boost库包括Regex、Spirit、Graph、Lambda、concept check、Mpl、Thread、Python、Pool、smart_ptr等。 GUI库 GUI库是指那些提供图形用户界面功能的库。著名的GUI库包括MFC、QT、WxWindows、Fox、WTL、GTK等...

如Regex(正则表达式),Spirit(LL解析框架),Graph(图组件和算法),Lambda(函数对象定义),Concept Check(概念检查),MPL(元编程框架),Thread(多线程库),Python绑定,Pool内存池管理,以及各种Smart_...

- 使用`boost::thread_pool`管理一组工作线程,提高并发性能。 #### 4. **函数式编程支持(Functional Programming Support)** - **知识点**:Boost.Lambda和Boost.Bind为C++提供了强大的函数式编程能力。 - **...

6. **cpp-thread-pool**:线程池是一种多线程编程的技术,它预先创建一组线程,当需要执行任务时,任务会被分发到这些线程中。这有助于提高系统效率,避免频繁创建和销毁线程的开销。 7. **linux-watchdog**:Linux...

- Smart Ptr:提供多种智能指针,如shared_ptr、unique_ptr等,便于管理对象生命周期。 Boost库的质量高,实用性强,但某些库可能仍处于实验阶段,使用时需谨慎。尽管如此,Boost库中的许多组件如Graph和Lambda,...

Crc计算CRC校验,Optional提供可选值类型,Pool内存池管理,Preprocessor预处理器宏工具,Program_options命令行选项解析,Python支持C++与Python的交互,Smart_ptr智能指针管理,Test单元测试框架,Thread多线程...

除了上述核心功能,poBase 还包含了一些辅助类,如智能指针(Smart Pointer)、日志系统(Logging)、内存池(Memory Pool)等。智能指针帮助管理对象生命周期,防止内存泄漏;日志系统提供了灵活的日志记录和级别...

Boost还提供了一个将C++类和对象映射到Python中的库以及内存池管理Pool和智能指针smart_ptr。不过,Boost中也包含了很多实验性的内容,因此在实际开发中需要谨慎对待,尤其是在使用一些库如Graph等能够提供工业级别...

- **Smart_ptr**:包含了五种智能指针类型,如`shared_ptr`、`unique_ptr`等,这些智能指针能够自动管理资源生命周期,防止内存泄漏。 #### 四、GUI库的选择与特点 在C++的库中,GUI(图形用户界面)库尤其受到...